Manoj Goyal

TeLCoS: OnDevice Text Localization with Clustering of Script

Apr 21, 2021

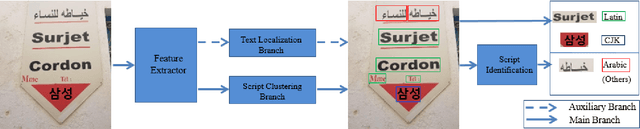

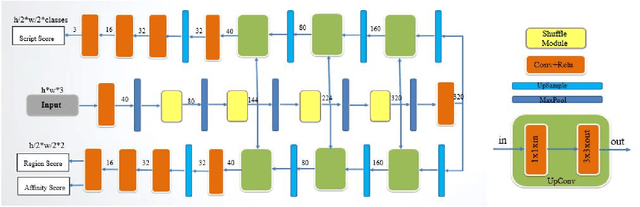

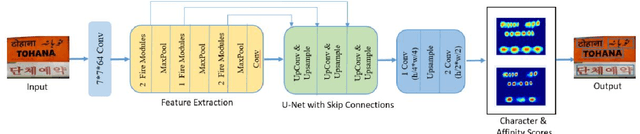

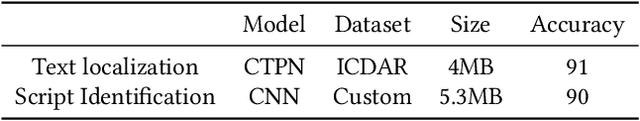

Abstract:Recent research in the field of text localization in a resource constrained environment has made extensive use of deep neural networks. Scene text localization and recognition on low-memory mobile devices have a wide range of applications including content extraction, image categorization and keyword based image search. For text recognition of multi-lingual localized text, the OCR systems require prior knowledge of the script of each text instance. This leads to word script identification being an essential step for text recognition. Most existing methods treat text localization, script identification and text recognition as three separate tasks. This makes script identification an overhead in the recognition pipeline. To reduce this overhead, we propose TeLCoS: OnDevice Text Localization with Clustering of Script, a multi-task dual branch lightweight CNN network that performs real-time on device Text Localization and High-level Script Clustering simultaneously. The network drastically reduces the number of calls to a separate script identification module, by grouping and identifying some majorly used scripts through a single feed-forward pass over the localization network. We also introduce a novel structural similarity based channel pruning mechanism to build an efficient network with only 1.15M parameters. Experiments on benchmark datasets suggest that our method achieves state-of-the-art performance, with execution latency of 60 ms for the entire pipeline on the Exynos 990 chipset device.

ScreenSeg: On-Device Screenshot Layout Analysis

Apr 21, 2021

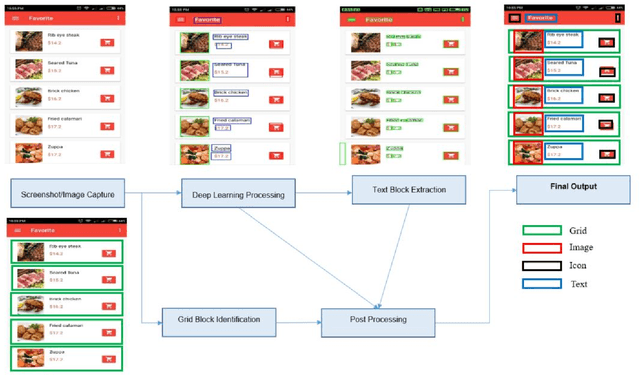

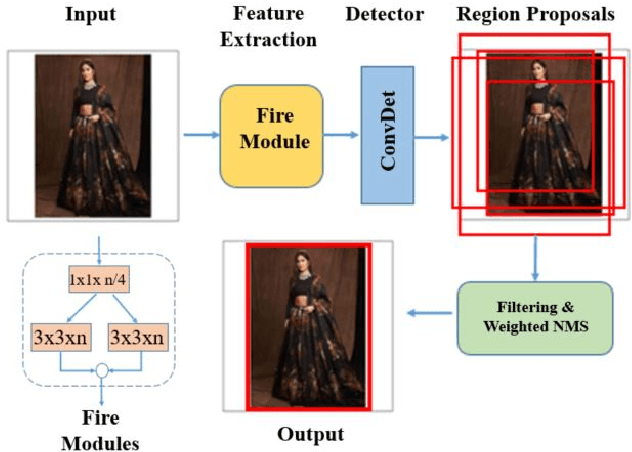

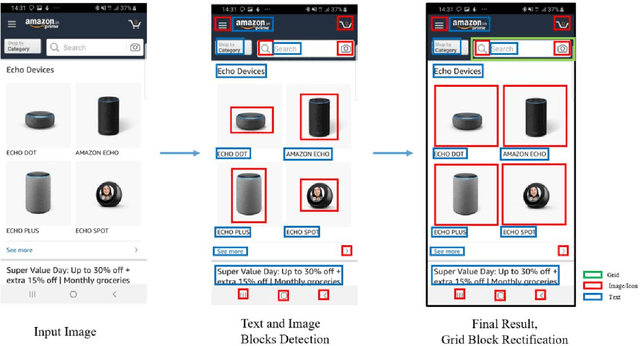

Abstract:We propose a novel end-to-end solution that performs a Hierarchical Layout Analysis of screenshots and document images on resource constrained devices like mobilephones. Our approach segments entities like Grid, Image, Text and Icon blocks occurring in a screenshot. We provide an option for smart editing by auto highlighting these entities for saving or sharing. Further this multi-level layout analysis of screenshots has many use cases including content extraction, keyword-based image search, style transfer, etc. We have addressed the limitations of known baseline approaches, supported a wide variety of semantically complex screenshots, and developed an approach which is highly optimized for on-device deployment. In addition, we present a novel weighted NMS technique for filtering object proposals. We achieve an average precision of about 0.95 with a latency of around 200ms on Samsung Galaxy S10 Device for a screenshot of 1080p resolution. The solution pipeline is already commercialized in Samsung Device applications i.e. Samsung Capture, Smart Crop, My Filter in Camera Application, Bixby Touch.

On-Device Text Image Super Resolution

Nov 20, 2020

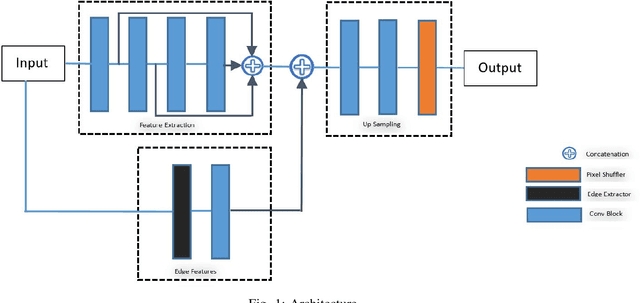

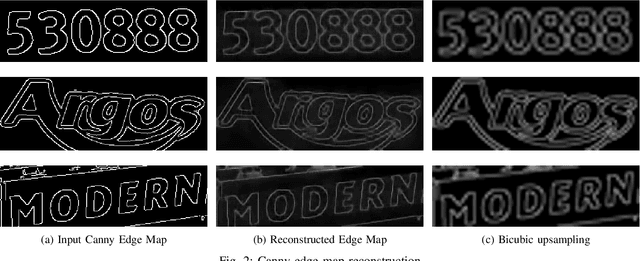

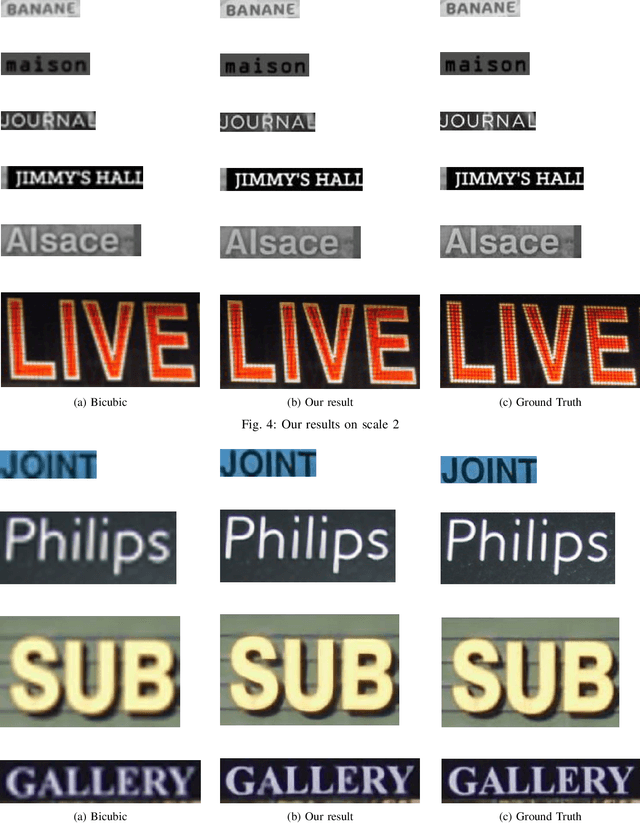

Abstract:Recent research on super-resolution (SR) has witnessed major developments with the advancements of deep convolutional neural networks. There is a need for information extraction from scenic text images or even document images on device, most of which are low-resolution (LR) images. Therefore, SR becomes an essential pre-processing step as Bicubic Upsampling, which is conventionally present in smartphones, performs poorly on LR images. To give the user more control over his privacy, and to reduce the carbon footprint by reducing the overhead of cloud computing and hours of GPU usage, executing SR models on the edge is a necessity in the recent times. There are various challenges in running and optimizing a model on resource-constrained platforms like smartphones. In this paper, we present a novel deep neural network that reconstructs sharper character edges and thus boosts OCR confidence. The proposed architecture not only achieves significant improvement in PSNR over bicubic upsampling on various benchmark datasets but also runs with an average inference time of 11.7 ms per image. We have outperformed state-of-the-art on the Text330 dataset. We also achieve an OCR accuracy of 75.89% on the ICDAR 2015 TextSR dataset, where ground truth has an accuracy of 78.10%.

On- Device Information Extraction from Screenshots in form of tags

Jan 11, 2020

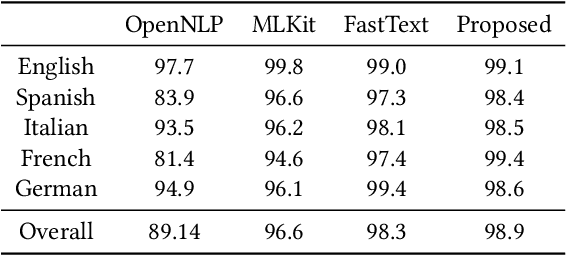

Abstract:We propose a method to make mobile screenshots easily searchable. In this paper, we present the workflow in which we: 1) preprocessed a collection of screenshots, 2) identified script presentin image, 3) extracted unstructured text from images, 4) identifiedlanguage of the extracted text, 5) extracted keywords from the text, 6) identified tags based on image features, 7) expanded tag set by identifying related keywords, 8) inserted image tags with relevant images after ranking and indexed them to make it searchable on device. We made the pipeline which supports multiple languages and executed it on-device, which addressed privacy concerns. We developed novel architectures for components in the pipeline, optimized performance and memory for on-device computation. We observed from experimentation that the solution developed can reduce overall user effort and improve end user experience while searching, whose results are published.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge