Rutika Moharir

On-Device Spatial Attention based Sequence Learning Approach for Scene Text Script Identification

Dec 01, 2021

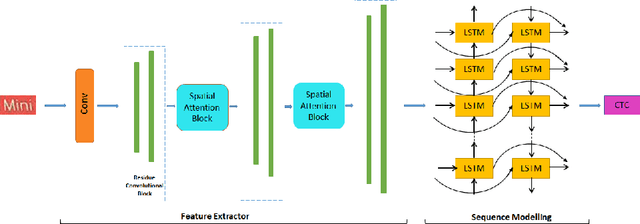

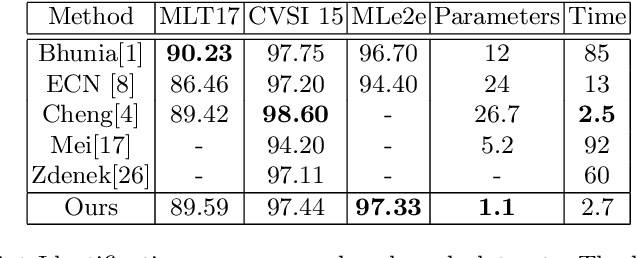

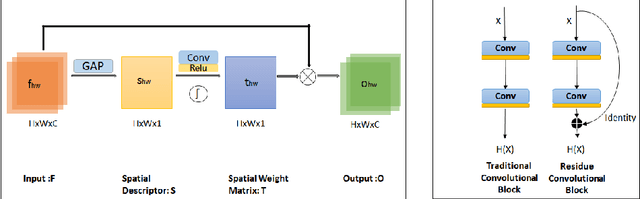

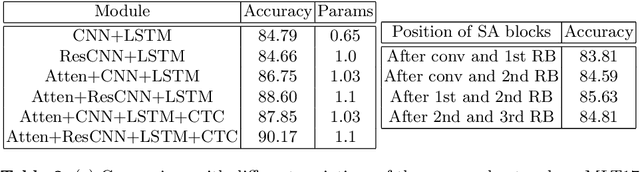

Abstract:Automatic identification of script is an essential component of a multilingual OCR engine. In this paper, we present an efficient, lightweight, real-time and on-device spatial attention based CNN-LSTM network for scene text script identification, feasible for deployment on resource constrained mobile devices. Our network consists of a CNN, equipped with a spatial attention module which helps reduce the spatial distortions present in natural images. This allows the feature extractor to generate rich image representations while ignoring the deformities and thereby, enhancing the performance of this fine grained classification task. The network also employs residue convolutional blocks to build a deep network to focus on the discriminative features of a script. The CNN learns the text feature representation by identifying each character as belonging to a particular script and the long term spatial dependencies within the text are captured using the sequence learning capabilities of the LSTM layers. Combining the spatial attention mechanism with the residue convolutional blocks, we are able to enhance the performance of the baseline CNN to build an end-to-end trainable network for script identification. The experimental results on several standard benchmarks demonstrate the effectiveness of our method. The network achieves competitive accuracy with state-of-the-art methods and is superior in terms of network size, with a total of just 1.1 million parameters and inference time of 2.7 milliseconds.

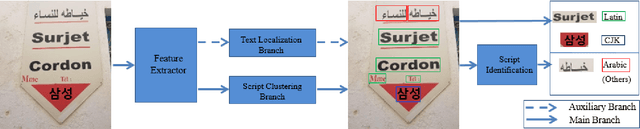

TeLCoS: OnDevice Text Localization with Clustering of Script

Apr 21, 2021

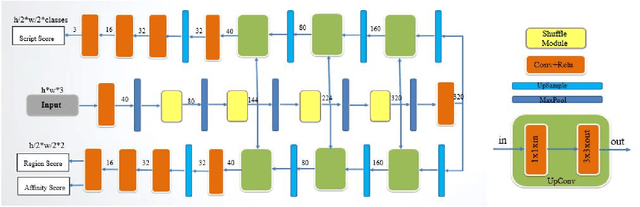

Abstract:Recent research in the field of text localization in a resource constrained environment has made extensive use of deep neural networks. Scene text localization and recognition on low-memory mobile devices have a wide range of applications including content extraction, image categorization and keyword based image search. For text recognition of multi-lingual localized text, the OCR systems require prior knowledge of the script of each text instance. This leads to word script identification being an essential step for text recognition. Most existing methods treat text localization, script identification and text recognition as three separate tasks. This makes script identification an overhead in the recognition pipeline. To reduce this overhead, we propose TeLCoS: OnDevice Text Localization with Clustering of Script, a multi-task dual branch lightweight CNN network that performs real-time on device Text Localization and High-level Script Clustering simultaneously. The network drastically reduces the number of calls to a separate script identification module, by grouping and identifying some majorly used scripts through a single feed-forward pass over the localization network. We also introduce a novel structural similarity based channel pruning mechanism to build an efficient network with only 1.15M parameters. Experiments on benchmark datasets suggest that our method achieves state-of-the-art performance, with execution latency of 60 ms for the entire pipeline on the Exynos 990 chipset device.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge