Lutz Lampe

Neural Probabilistic Amplitude Shaping for Nonlinear Fiber Channels

Feb 02, 2026Abstract:We introduce neural probabilistic amplitude shaping, a joint-distribution learning framework for coherent fiber systems. The proposed scheme provides a 0.5 dB signal-to-noise ratio gain over sequence selection for dual-polarized 64-QAM transmission across a single-span 205 km link.

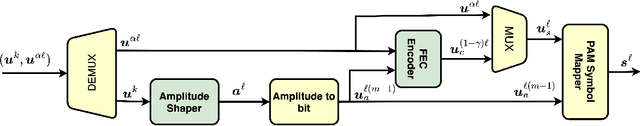

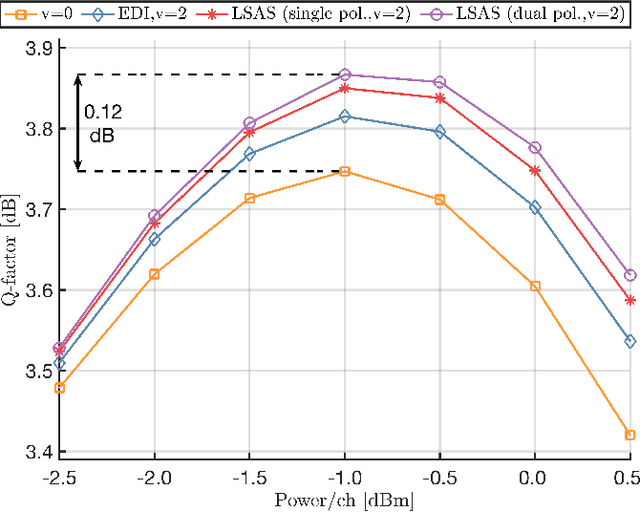

Probabilistic Shaping for Nonlinearity Tolerance

Dec 12, 2024Abstract:Optimizing the input probability distribution of a discrete-time channel is a standard step in the information-theoretic analysis of digital communication systems. Nevertheless, many practical communication systems transmit uniformly and independently distributed symbols drawn from regular constellation sets. The introduction of the probabilistic amplitude shaping architecture has renewed interest in using optimized probability distributions, i.e., probabilistic shaping. Traditionally, probabilistic shaping has been employed to reduce the transmit power required for a given information rate over additive noise channels. While this translates into substantive performance gains for optical fiber communication systems, the interaction of shaping and fiber nonlinearity has posed intriguing questions. At first glance, probabilistic shaping seems to exacerbate nonlinear interference noise (NLIN) due to larger higher-order standardized moments. Therefore, the optimization of shaping distributions must differ from those used for linear channels. Secondly, finite-length effects related to the memory of the nonlinear fiber channel have been observed. This suggests that the marginal input-symbol distribution is not the only consideration. This paper provides a tutorial-style discussion of probabilistic shaping for optical fiber communication. Since the distinguishing property of the channel is the signal-dependent NLIN, we speak of probabilistic shaping for nonlinearity tolerance. Our analysis builds on the first-order time-domain perturbation approximation of the nonlinear fiber channel and revisits the notion of linear and nonlinear shaping gain. We largely focus on probabilistic amplitude shaping with popular shaping methods. The concept of shaping via sequence selection is given special consideration, as it inherently optimizes a multivariate distribution for shaped constellations.

Perturbation-based Sequence Selection for Probabilistic Amplitude Shaping

Jul 12, 2024Abstract:We introduce a practical sign-dependent sequence selection metric for probabilistic amplitude shaping and propose a simple method to predict the gains in signal-to-noise ratio (SNR) for sequence selection. The proposed metric provides a $0.5$ dB SNR gain for single-polarized 256-QAM transmission over a long-haul fiber link.

Bayesian Phase Search for Probabilistic Amplitude Shaping

Sep 08, 2023

Abstract:We introduce a Bayesian carrier phase recovery (CPR) algorithm which is robust against low signal-to-noise ratio scenarios. It is therefore effective for phase recovery for probabilistic amplitude shaping (PAS). Results validate that the new algorithm overcomes the degradation experienced by blind phase-search CPR for PAS.

SmartPUR: An Autonomous PUR Transmission Solution for Mobile C-IoT Devices

Jun 21, 2023

Abstract:Cellular Internet-of-things (C-IoT) user equipments (UEs) typically transmit frequent but small amounts of uplink data to the base station. Undergoing a traditional random access procedure (RAP) to transmit a small but frequent data presents a considerable overhead. As an antidote, preconfigured uplink resources (PURs) are typically used in newer UEs, where the devices are allocated uplink resources beforehand to transmit on without following the RAP. A prerequisite for transmitting on PURs is that the UEs must use a valid timing advance (TA) so that they do not interfere with transmissions of other nodes in adjacent resources. One solution to this end is to validate the previously held TA by the UE to ensure that it is still valid. While this validation is trivial for stationary UEs, mobile UEs often encounter conditions where the previous TA is no longer valid and a new one is to be requested by falling back on legacy RAP. This limits the applicability of PURs in mobile UEs. To counter this drawback and ensure a near-universal adoption of transmitting on PURs, we propose new machine learning aided solutions for validation and prediction of TA for UEs of any type of mobility. We conduct comprehensive simulation evaluations across different types of communication environments to demonstrate that our proposed solutions provide up to a 98.7% accuracy in predicting the TA.

Enhanced Hybrid Automatic Repeat Request Scheduling for Non-Terrestrial IoT Networks

May 11, 2023

Abstract:Non-terrestrial networks (NTNs) complement their terrestrial counterparts in enabling ubiquitous connectivity globally by serving unserved and/or underserved areas of the world. While supporting enhanced mobile broadband (eMBB) data over NTNs has been extensively studied in the past, focus on massive machine type communication (mMTC) over NTNs is currently growing, as also witnessed by the new study and work items included into the 3rd generation partnership project (3GPP) agenda for commissioning specifications for Internet-of-Things (IoT) communications over NTNs. Supporting mMTC in non-terrestrial cellular IoT (C-IoT) networks requires jointly addressing the unique challenges introduced in NTNs and CIoT communications. In this paper, we tackle one such issue caused due to the extended round-trip time and increased path loss in NTNs resulting in a degraded network throughput. We propose smarter transport blocks scheduling methods that can increase the efficiency of resource utilization. We conduct end-to-end link-level simulations of C-IoT traffic over NTNs and present numerical results of the data rate gains achieved to show the performance of our proposed solutions against legacy scheduling methods.

Deep Learning-Aided Perturbation Model-Based Fiber Nonlinearity Compensation

Nov 19, 2022

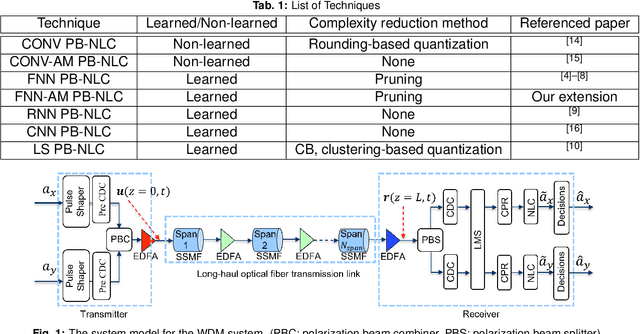

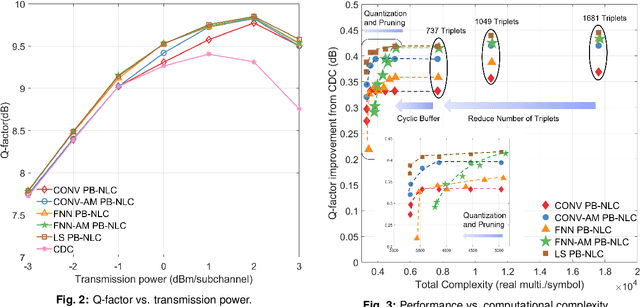

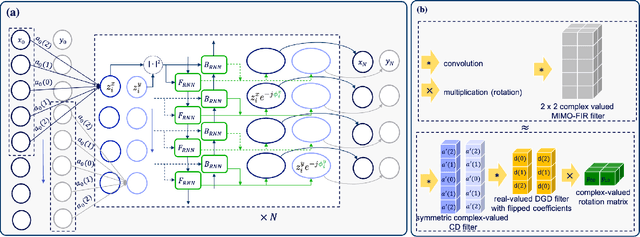

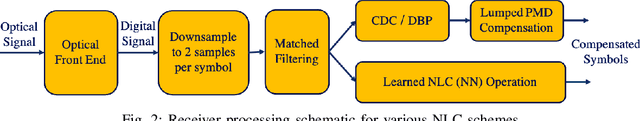

Abstract:Fiber nonlinearity effects cap achievable rates and ranges in long-haul optical fiber communication links. Conventional nonlinearity compensation methods, such as perturbation theory-based nonlinearity compensation (PB-NLC), attempt to compensate for the nonlinearity by approximating analytical solutions to the signal propagation over optical fibers. However, their practical usability is limited by model mismatch and the immense computational complexity associated with the analytical computation of perturbation triplets and the nonlinearity distortion field. Recently, machine learning techniques have been used to optimise parameters of PB-based approaches, which traditionally have been determined analytically from physical models. It has been claimed in the literature that the learned PB-NLC approaches have improved performance and/or reduced computational complexity over their non-learned counterparts. In this paper, we first revisit the acclaimed benefits of the learned PB-NLC approaches by carefully carrying out a comprehensive performance-complexity analysis utilizing state-of-the-art complexity reduction methods. Interestingly, our results show that least squares-based PB-NLC with clustering quantization has the best performance-complexity trade-off among the learned PB-NLC approaches. Second, we advance the state-of-the-art of learned PB-NLC by proposing and designing a fully learned structure. We apply a bi-directional recurrent neural network for learning perturbation triplets that are alike those obtained from the analytical computation and are used as input features for the neural network to estimate the nonlinearity distortion field. Finally, we demonstrate through numerical simulations that our proposed fully learned approach achieves an improved performance-complexity trade-off compared to the existing learned and non-learned PB-NLC techniques.

Learning for Perturbation-Based Fiber Nonlinearity Compensation

Oct 07, 2022

Abstract:Several machine learning inspired methods for perturbation-based fiber nonlinearity (PBNLC) compensation have been presented in recent literature. We critically revisit acclaimed benefits of those over non-learned methods. Numerical results suggest that learned linear processing of perturbation triplets of PB-NLC is preferable over feedforward neural-network solutions.

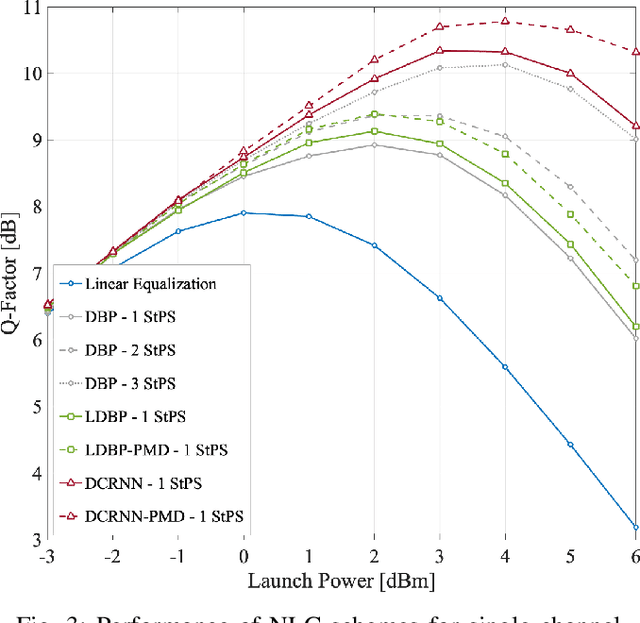

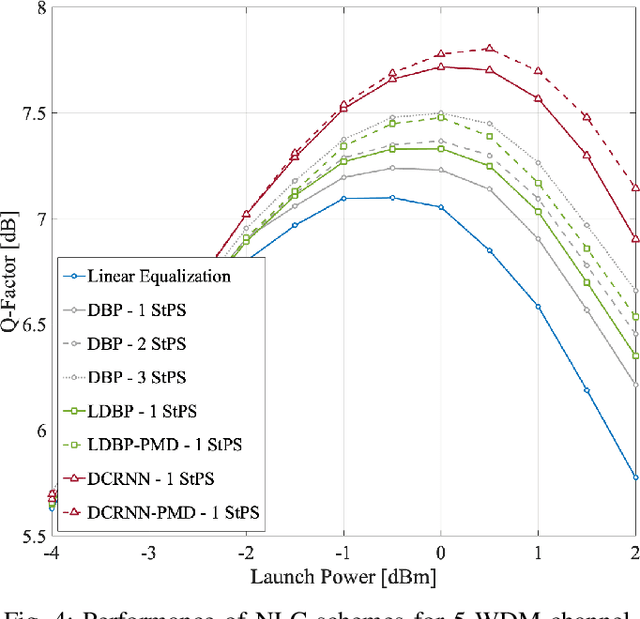

Joint PMD Tracking and Nonlinearity Compensation with Deep Neural Networks

Sep 21, 2022

Abstract:Overcoming fiber nonlinearity is one of the core challenges limiting the capacity of optical fiber communication systems. Machine learning based solutions such as learned digital backpropagation (LDBP) and the recently proposed deep convolutional recurrent neural network (DCRNN) have been shown to be effective for fiber nonlinearity compensation (NLC). Incorporating distributed compensation of polarization mode dispersion (PMD) within the learned models can improve their performance even further but at the same time, it also couples the compensation of nonlinearity and PMD. Consequently, it is important to consider the time variation of PMD for such a joint compensation scheme. In this paper, we investigate the impact of PMD drift on the DCRNN model with distributed compensation of PMD. We propose a transfer learning based selective training scheme to adapt the learned neural network model to changes in PMD. We demonstrate that fine-tuning only a small subset of weights as per the proposed method is sufficient for adapting the model to PMD drift. Using decision directed feedback for online learning, we track continuous PMD drift resulting from a time-varying rotation of the state of polarization (SOP). We show that transferring knowledge from a pre-trained base model using the proposed scheme significantly reduces the re-training efforts for different PMD realizations. Applying the hinge model for SOP rotation, our simulation results show that the learned models maintain their performance gains while tracking the PMD.

Probabilistic Amplitude Shaping and Nonlinearity Tolerance: Analysis and Sequence Selection Method

Aug 06, 2022

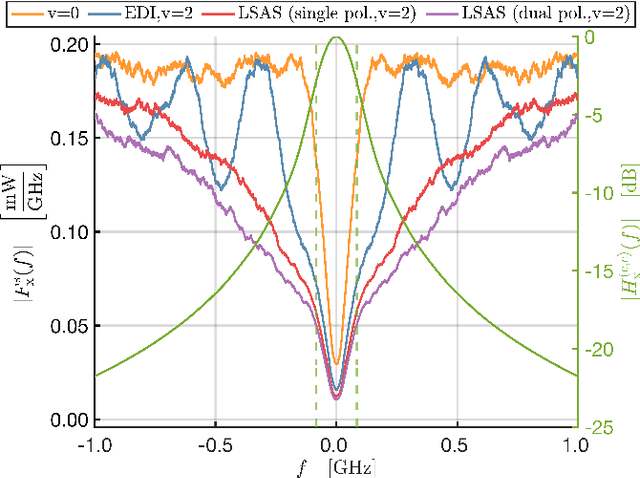

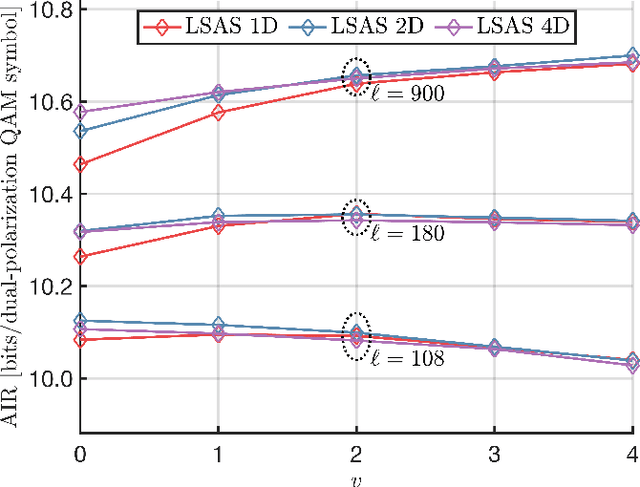

Abstract:Probabilistic amplitude shaping (PAS) is a practical means to achieve a shaping gain in optical fiber communication. However, PAS and shaping in general also affect the signal-dependent generation of nonlinear interference. This provides an opportunity for nonlinearity mitigation through PAS, which is also referred to as a nonlinear shaping gain. In this paper, we introduce a linear lowpass filter model that relates transmitted symbol-energy sequences and nonlinear distortion experienced in an optical fiber channel. Based on this model, we conduct a nonlinearity analysis of PAS with respect to shaping blocklength and mapping strategy. Our model explains results and relationships found in literature and can be used as a design tool for PAS with improved nonlinearity tolerance. We use the model to introduce a new metric for PAS with sequence selection. We perform simulations of selection-based PAS with various amplitude shapers and mapping strategies to demonstrate the effectiveness of the new metric in different optical fiber system scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge