Lucio Soibelman

A New Method of Pixel-level In-situ U-value Measurement for Building Envelopes Based on Infrared Thermography

Jan 13, 2024Abstract:The potential energy loss of aging buildings traps building owners in a cycle of underfunding operations and overpaying maintenance costs. Energy auditors intending to generate an energy model of a target building for performance assessment may struggle to obtain accurate results as the spatial distribution of temperatures is not considered when calculating the U-value of the building envelope. This paper proposes a pixel-level method based on infrared thermography (IRT) that considers two-dimensional (2D) spatial temperature distributions of the outdoor and indoor surfaces of the target wall to generate a 2D U-value map of the wall. The result supports that the proposed method can better reflect the actual thermal insulation performance of the target wall compared to the current IRT-based methods that use a single-point room temperature as input.

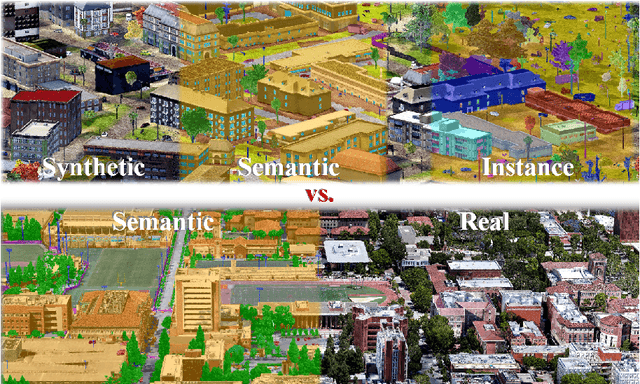

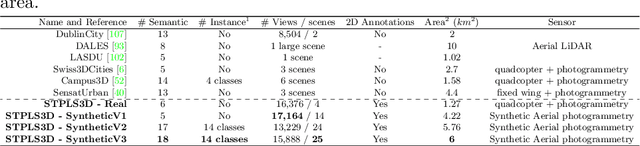

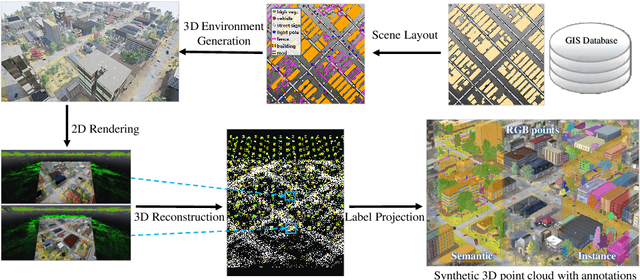

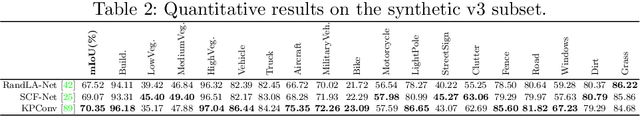

STPLS3D: A Large-Scale Synthetic and Real Aerial Photogrammetry 3D Point Cloud Dataset

Mar 17, 2022

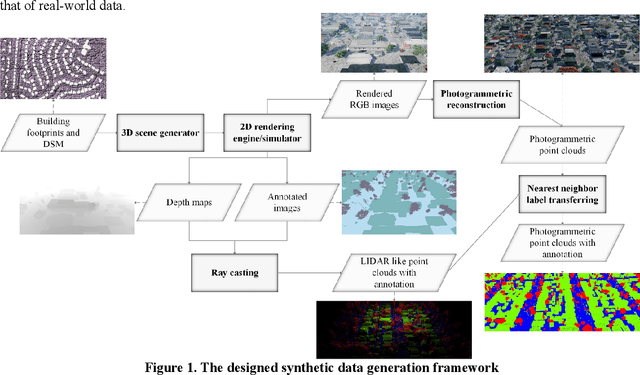

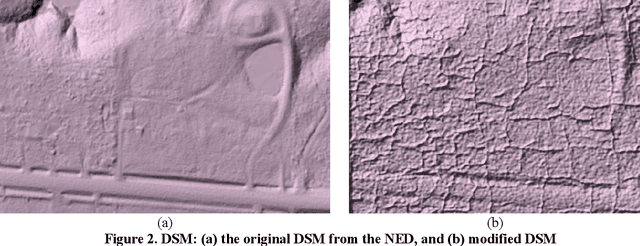

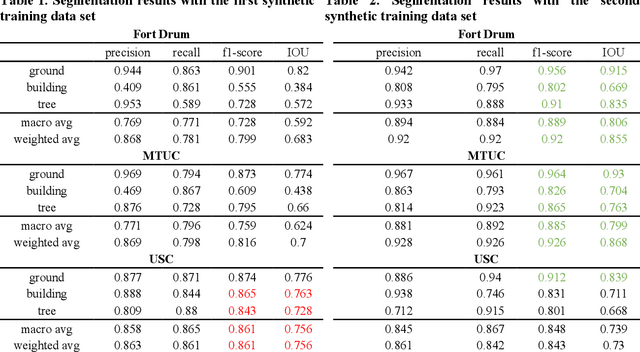

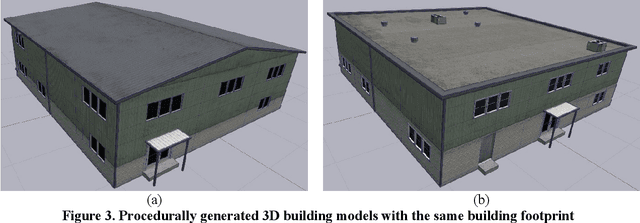

Abstract:Although various 3D datasets with different functions and scales have been proposed recently, it remains challenging for individuals to complete the whole pipeline of large-scale data collection, sanitization, and annotation. Moreover, the created datasets usually suffer from extremely imbalanced class distribution or partial low-quality data samples. Motivated by this, we explore the procedurally synthetic 3D data generation paradigm to equip individuals with the full capability of creating large-scale annotated photogrammetry point clouds. Specifically, we introduce a synthetic aerial photogrammetry point clouds generation pipeline that takes full advantage of open geospatial data sources and off-the-shelf commercial packages. Unlike generating synthetic data in virtual games, where the simulated data usually have limited gaming environments created by artists, the proposed pipeline simulates the reconstruction process of the real environment by following the same UAV flight pattern on different synthetic terrain shapes and building densities, which ensure similar quality, noise pattern, and diversity with real data. In addition, the precise semantic and instance annotations can be generated fully automatically, avoiding the expensive and time-consuming manual annotation. Based on the proposed pipeline, we present a richly-annotated synthetic 3D aerial photogrammetry point cloud dataset, termed STPLS3D, with more than 16 $km^2$ of landscapes and up to 18 fine-grained semantic categories. For verification purposes, we also provide a parallel dataset collected from four areas in the real environment. Extensive experiments conducted on our datasets demonstrate the effectiveness and quality of the proposed synthetic dataset.

Ground material classification and for UAV-based photogrammetric 3D data A 2D-3D Hybrid Approach

Sep 24, 2021

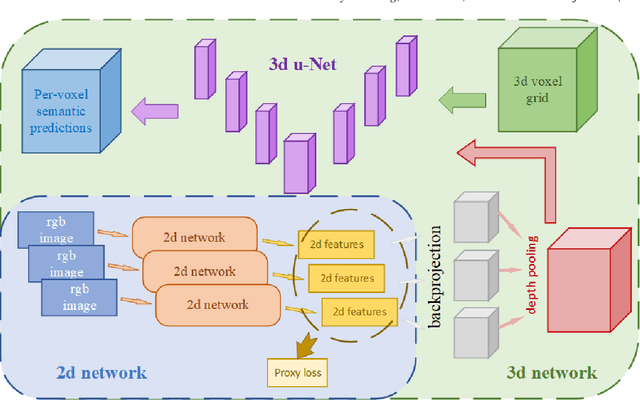

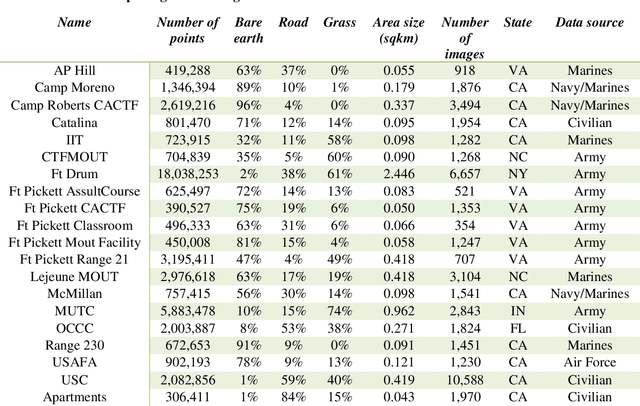

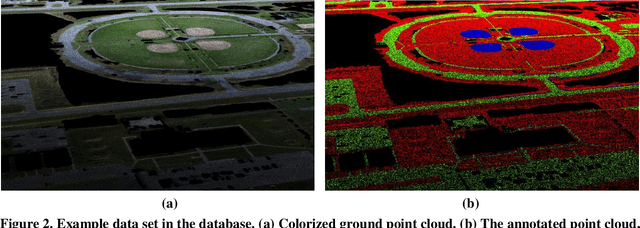

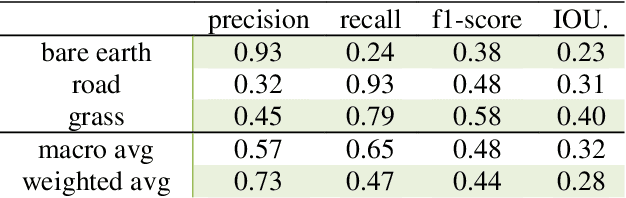

Abstract:In recent years, photogrammetry has been widely used in many areas to create photorealistic 3D virtual data representing the physical environment. The innovation of small unmanned aerial vehicles (sUAVs) has provided additional high-resolution imaging capabilities with low cost for mapping a relatively large area of interest. These cutting-edge technologies have caught the US Army and Navy's attention for the purpose of rapid 3D battlefield reconstruction, virtual training, and simulations. Our previous works have demonstrated the importance of information extraction from the derived photogrammetric data to create semantic-rich virtual environments (Chen et al., 2019). For example, an increase of simulation realism and fidelity was achieved by segmenting and replacing photogrammetric trees with game-ready tree models. In this work, we further investigated the semantic information extraction problem and focused on the ground material segmentation and object detection tasks. The main innovation of this work was that we leveraged both the original 2D images and the derived 3D photogrammetric data to overcome the challenges faced when using each individual data source. For ground material segmentation, we utilized an existing convolutional neural network architecture (i.e., 3DMV) which was originally designed for segmenting RGB-D sensed indoor data. We improved its performance for outdoor photogrammetric data by introducing a depth pooling layer in the architecture to take into consideration the distance between the source images and the reconstructed terrain model. To test the performance of our improved 3DMV, a ground truth ground material database was created using data from the One World Terrain (OWT) data repository. Finally, a workflow for importing the segmented ground materials into a virtual simulation scene was introduced, and visual results are reported in this paper.

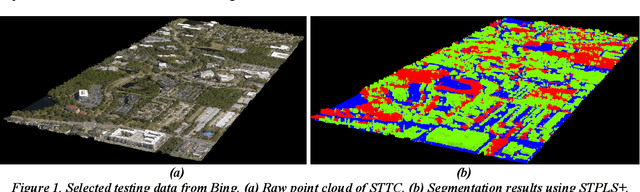

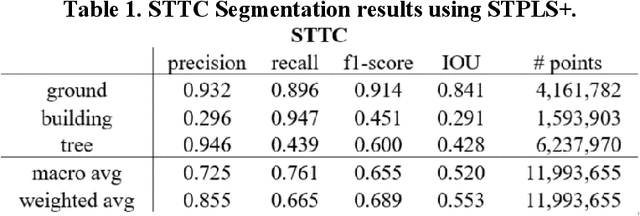

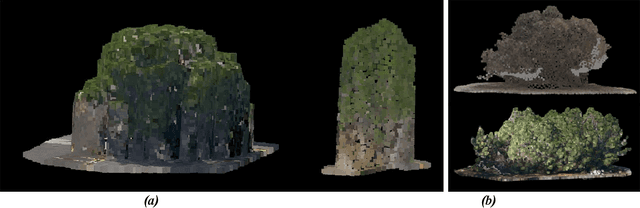

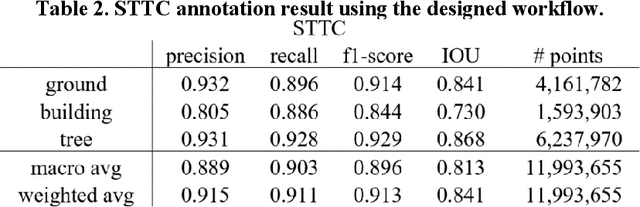

Semantic Segmentation and Data Fusion of Microsoft Bing 3D Cities and Small UAV-based Photogrammetric Data

Aug 21, 2020

Abstract:With state-of-the-art sensing and photogrammetric techniques, Microsoft Bing Maps team has created over 125 highly detailed 3D cities from 11 different countries that cover hundreds of thousands of square kilometer areas. The 3D city models were created using the photogrammetric technique with high-resolution images that were captured from aircraft-mounted cameras. Such a large 3D city database has caught the attention of the US Army for creating virtual simulation environments to support military operations. However, the 3D city models do not have semantic information such as buildings, vegetation, and ground and cannot allow sophisticated user-level and system-level interaction. At I/ITSEC 2019, the authors presented a fully automated data segmentation and object information extraction framework for creating simulation terrain using UAV-based photogrammetric data. This paper discusses the next steps in extending our designed data segmentation framework for segmenting 3D city data. In this study, the authors first investigated the strengths and limitations of the existing framework when applied to the Bing data. The main differences between UAV-based and aircraft-based photogrammetric data are highlighted. The data quality issues in the aircraft-based photogrammetric data, which can negatively affect the segmentation performance, are identified. Based on the findings, a workflow was designed specifically for segmenting Bing data while considering its characteristics. In addition, since the ultimate goal is to combine the use of both small unmanned aerial vehicle (UAV) collected data and the Bing data in a virtual simulation environment, data from these two sources needed to be aligned and registered together. To this end, the authors also proposed a data registration workflow that utilized the traditional iterative closest point (ICP) with the extracted semantic information.

Generating synthetic photogrammetric data for training deep learning based 3D point cloud segmentation models

Aug 21, 2020

Abstract:At I/ITSEC 2019, the authors presented a fully-automated workflow to segment 3D photogrammetric point-clouds/meshes and extract object information, including individual tree locations and ground materials (Chen et al., 2019). The ultimate goal is to create realistic virtual environments and provide the necessary information for simulation. We tested the generalizability of the previously proposed framework using a database created under the U.S. Army's One World Terrain (OWT) project with a variety of landscapes (i.e., various buildings styles, types of vegetation, and urban density) and different data qualities (i.e., flight altitudes and overlap between images). Although the database is considerably larger than existing databases, it remains unknown whether deep-learning algorithms have truly achieved their full potential in terms of accuracy, as sizable data sets for training and validation are currently lacking. Obtaining large annotated 3D point-cloud databases is time-consuming and labor-intensive, not only from a data annotation perspective in which the data must be manually labeled by well-trained personnel, but also from a raw data collection and processing perspective. Furthermore, it is generally difficult for segmentation models to differentiate objects, such as buildings and tree masses, and these types of scenarios do not always exist in the collected data set. Thus, the objective of this study is to investigate using synthetic photogrammetric data to substitute real-world data in training deep-learning algorithms. We have investigated methods for generating synthetic UAV-based photogrammetric data to provide a sufficiently sized database for training a deep-learning algorithm with the ability to enlarge the data size for scenarios in which deep-learning models have difficulties.

Fully Automated Photogrammetric Data Segmentation and Object Information Extraction Approach for Creating Simulation Terrain

Aug 09, 2020Abstract:Our previous works have demonstrated that visually realistic 3D meshes can be automatically reconstructed with low-cost, off-the-shelf unmanned aerial systems (UAS) equipped with capable cameras, and efficient photogrammetric software techniques. However, such generated data do not contain semantic information/features of objects (i.e., man-made objects, vegetation, ground, object materials, etc.) and cannot allow the sophisticated user-level and system-level interaction. Considering the use case of the data in creating realistic virtual environments for training and simulations (i.e., mission planning, rehearsal, threat detection, etc.), segmenting the data and extracting object information are essential tasks. Thus, the objective of this research is to design and develop a fully automated photogrammetric data segmentation and object information extraction framework. To validate the proposed framework, the segmented data and extracted features were used to create virtual environments in the authors previously designed simulation tool i.e., Aerial Terrain Line of Sight Analysis System (ATLAS). The results showed that 3D mesh trees could be replaced with geo-typical 3D tree models using the extracted individual tree locations. The extracted tree features (i.e., color, width, height) are valuable for selecting the appropriate tree species and enhance visual quality. Furthermore, the identified ground material information can be taken into consideration for pathfinding. The shortest path can be computed not only considering the physical distance, but also considering the off-road vehicle performance capabilities on different ground surface materials.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge