Lucas Cordeiro

Interventional Probing in High Dimensions: An NLI Case Study

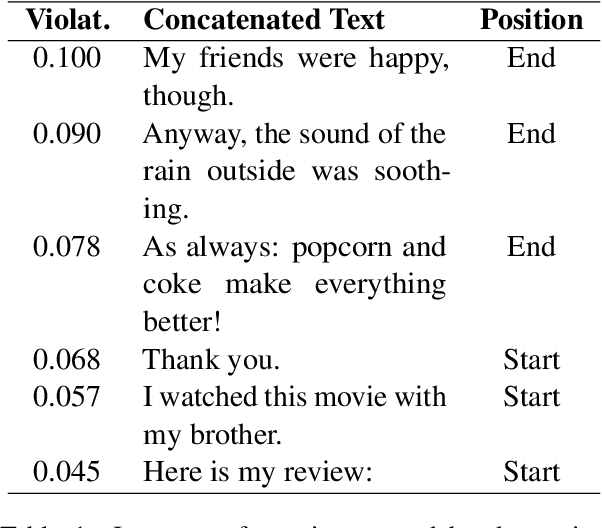

Apr 20, 2023Abstract:Probing strategies have been shown to detect the presence of various linguistic features in large language models; in particular, semantic features intermediate to the "natural logic" fragment of the Natural Language Inference task (NLI). In the case of natural logic, the relation between the intermediate features and the entailment label is explicitly known: as such, this provides a ripe setting for interventional studies on the NLI models' representations, allowing for stronger causal conjectures and a deeper critical analysis of interventional probing methods. In this work, we carry out new and existing representation-level interventions to investigate the effect of these semantic features on NLI classification: we perform amnesic probing (which removes features as directed by learned linear probes) and introduce the mnestic probing variation (which forgets all dimensions except the probe-selected ones). Furthermore, we delve into the limitations of these methods and outline some pitfalls have been obscuring the effectivity of interventional probing studies.

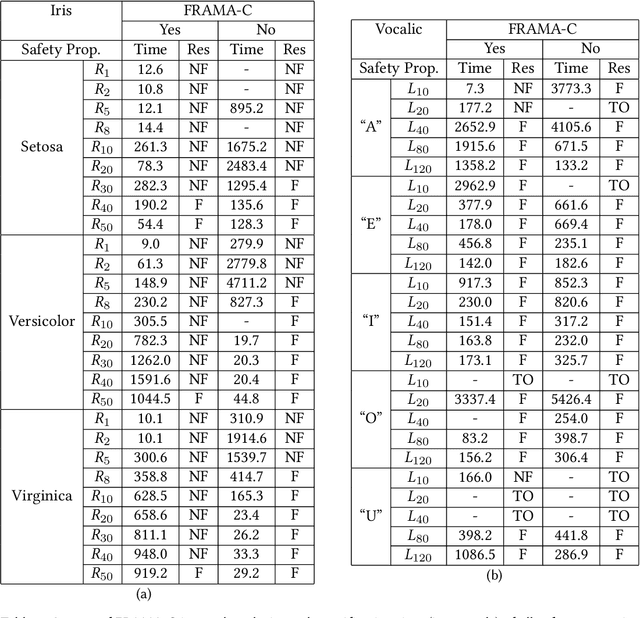

AIREPAIR: A Repair Platform for Neural Networks

Nov 24, 2022

Abstract:We present AIREPAIR, a platform for repairing neural networks. It features the integration of existing network repair tools. Based on AIREPAIR, one can run different repair methods on the same model, thus enabling the fair comparison of different repair techniques. We evaluate AIREPAIR with three state-of-the-art repair tools on popular deep-learning datasets and models. Our evaluation confirms the utility of AIREPAIR, by comparing and analyzing the results from different repair techniques. A demonstration is available at https://youtu.be/UkKw5neeWhw.

Systematicity, Compositionality and Transitivity of Deep NLP Models: a Metamorphic Testing Perspective

Apr 26, 2022

Abstract:Metamorphic testing has recently been used to check the safety of neural NLP models. Its main advantage is that it does not rely on a ground truth to generate test cases. However, existing studies are mostly concerned with robustness-like metamorphic relations, limiting the scope of linguistic properties they can test. We propose three new classes of metamorphic relations, which address the properties of systematicity, compositionality and transitivity. Unlike robustness, our relations are defined over multiple source inputs, thus increasing the number of test cases that we can produce by a polynomial factor. With them, we test the internal consistency of state-of-the-art NLP models, and show that they do not always behave according to their expected linguistic properties. Lastly, we introduce a novel graphical notation that efficiently summarises the inner structure of metamorphic relations.

Privacy-Friendly Peer-to-Peer Energy Trading: A Game Theoretical Approach

Jan 05, 2022

Abstract:In this paper, we propose a decentralized, privacy-friendly energy trading platform (PFET) based on game theoretical approach - specifically Stackelberg competition. Unlike existing trading schemes, PFET provides a competitive market in which prices and demands are determined based on competition, and computations are performed in a decentralized manner which does not rely on trusted third parties. It uses homomorphic encryption cryptosystem to encrypt sensitive information of buyers and sellers such as sellers$'$ prices and buyers$'$ demands. Buyers calculate total demand on particular seller using an encrypted data and sensitive buyer profile data is hidden from sellers. Hence, privacy of both sellers and buyers is preserved. Through privacy analysis and performance evaluation, we show that PFET preserves users$'$ privacy in an efficient manner.

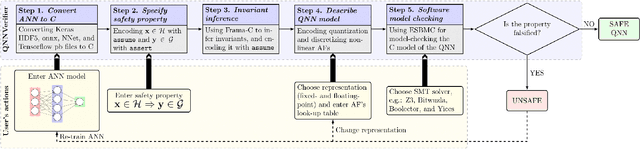

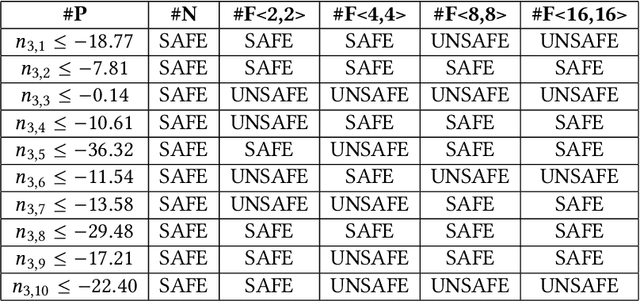

QNNVerifier: A Tool for Verifying Neural Networks using SMT-Based Model Checking

Nov 25, 2021

Abstract:QNNVerifier is the first open-source tool for verifying implementations of neural networks that takes into account the finite word-length (i.e. quantization) of their operands. The novel support for quantization is achieved by employing state-of-the-art software model checking (SMC) techniques. It translates the implementation of neural networks to a decidable fragment of first-order logic based on satisfiability modulo theories (SMT). The effects of fixed- and floating-point operations are represented through direct implementations given a hardware-determined precision. Furthermore, QNNVerifier allows to specify bespoke safety properties and verify the resulting model with different verification strategies (incremental and k-induction) and SMT solvers. Finally, QNNVerifier is the first tool that combines invariant inference via interval analysis and discretization of non-linear activation functions to speed up the verification of neural networks by orders of magnitude. A video presentation of QNNVerifier is available at https://youtu.be/7jMgOL41zTY

Verifying Quantized Neural Networks using SMT-Based Model Checking

Jun 10, 2021

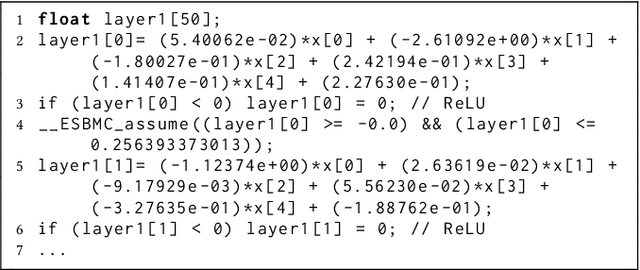

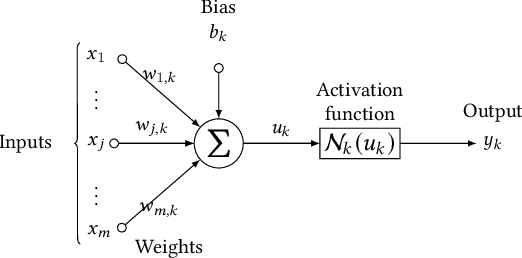

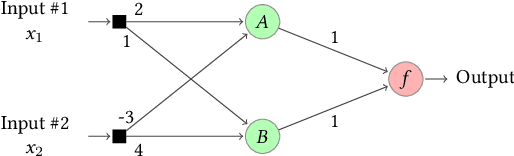

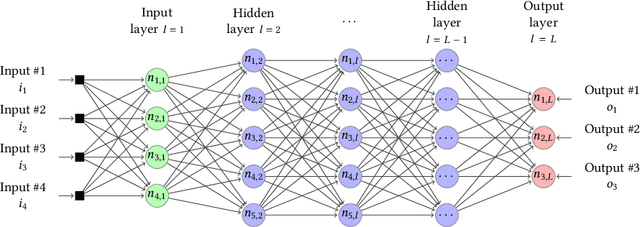

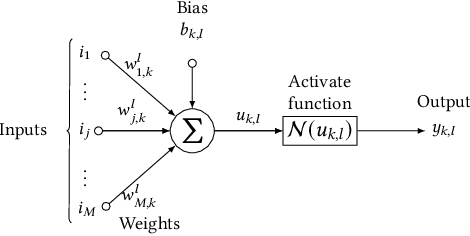

Abstract:Artificial Neural Networks (ANNs) are being deployed on an increasing number of safety-critical applications, including autonomous cars and medical diagnosis. However, concerns about their reliability have been raised due to their black-box nature and apparent fragility to adversarial attacks. Here, we develop and evaluate a symbolic verification framework using incremental model checking (IMC) and satisfiability modulo theories (SMT) to check for vulnerabilities in ANNs. More specifically, we propose several ANN-related optimizations for IMC, including invariant inference via interval analysis and the discretization of non-linear activation functions. With this, we can provide guarantees on the safe behavior of ANNs implemented both in floating-point and fixed-point (quantized) arithmetic. In this regard, our verification approach was able to verify and produce adversarial examples for 52 test cases spanning image classification and general machine learning applications. For small- to medium-sized ANN, our approach completes most of its verification runs in minutes. Moreover, in contrast to most state-of-the-art methods, our approach is not restricted to specific choices of activation functions or non-quantized representations.

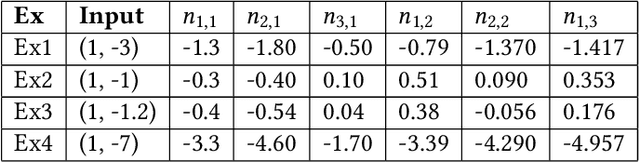

Incremental Verification of Fixed-Point Implementations of Neural Networks

Dec 21, 2020

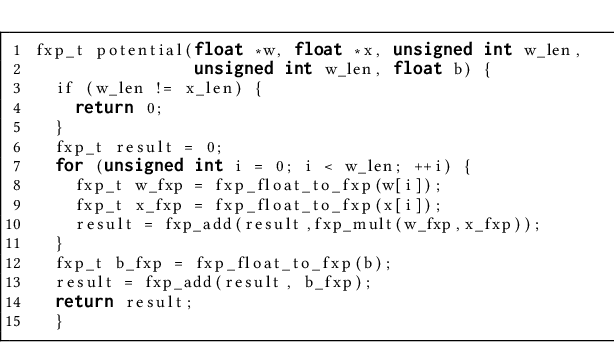

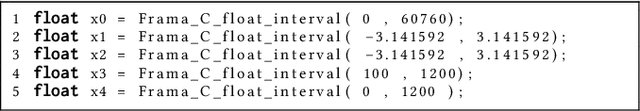

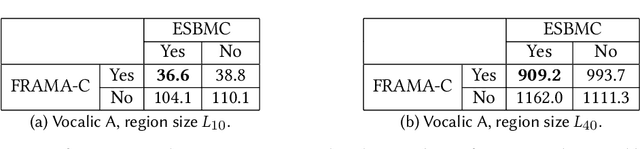

Abstract:Implementations of artificial neural networks (ANNs) might lead to failures, which are hardly predicted in the design phase since ANNs are highly parallel and their parameters are barely interpretable. Here, we develop and evaluate a novel symbolic verification framework using incremental bounded model checking (BMC), satisfiability modulo theories (SMT), and invariant inference, to obtain adversarial cases and validate coverage methods in a multi-layer perceptron (MLP). We exploit incremental BMC based on interval analysis to compute boundaries from a neuron's input. Then, the latter are propagated to effectively find a neuron's output since it is the input of the next one. This paper describes the first bit-precise symbolic verification framework to reason over actual implementations of ANNs in CUDA, based on invariant inference, therefore providing further guarantees about finite-precision arithmetic and its rounding errors, which are routinely ignored in the existing literature. We have implemented the proposed approach on top of the efficient SMT-based bounded model checker (ESBMC), and its experimental results show that it can successfully verify safety properties, in actual implementations of ANNs, and generate real adversarial cases in MLPs. Our approach was able to verify and produce adversarial examples for 85.8% of 21 test cases considering different input images, and 100% of the properties related to covering methods. Although our verification time is higher than existing approaches, our methodology can consider fixed-point implementation aspects that are disregarded by the state-of-the-art verification methodologies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge