Luca Magri

Efficient Real-Time Adaptation of ROMs for Unsteady Flows Using Data Assimilation

Feb 26, 2026Abstract:We propose an efficient retraining strategy for a parameterized Reduced Order Model (ROM) that attains accuracy comparable to full retraining while requiring only a fraction of the computational time and relying solely on sparse observations of the full system. The architecture employs an encode-process-decode structure: a Variational Autoencoder (VAE) to perform dimensionality reduction, and a transformer network to evolve the latent states and model the dynamics. The ROM is parameterized by an external control variable, the Reynolds number in the Navier-Stokes setting, with the transformer exploiting attention mechanisms to capture both temporal dependencies and parameter effects. The probabilistic VAE enables stochastic sampling of trajectory ensembles, providing predictive means and uncertainty quantification through the first two moments. After initial training on a limited set of dynamical regimes, the model is adapted to out-of-sample parameter regions using only sparse data. Its probabilistic formulation naturally supports ensemble generation, which we employ within an ensemble Kalman filtering framework to assimilate data and reconstruct full-state trajectories from minimal observations. We further show that, for the dynamical system considered, the dominant source of error in out-of-sample forecasts stems from distortions of the latent manifold rather than changes in the latent dynamics. Consequently, retraining can be limited to the autoencoder, allowing for a lightweight, computationally efficient, real-time adaptation procedure with very sparse fine-tuning data.

LCF3D: A Robust and Real-Time Late-Cascade Fusion Framework for 3D Object Detection in Autonomous Driving

Jan 14, 2026Abstract:Accurately localizing 3D objects like pedestrians, cyclists, and other vehicles is essential in Autonomous Driving. To ensure high detection performance, Autonomous Vehicles complement RGB cameras with LiDAR sensors, but effectively combining these data sources for 3D object detection remains challenging. We propose LCF3D, a novel sensor fusion framework that combines a 2D object detector on RGB images with a 3D object detector on LiDAR point clouds. By leveraging multimodal fusion principles, we compensate for inaccuracies in the LiDAR object detection network. Our solution combines two key principles: (i) late fusion, to reduce LiDAR False Positives by matching LiDAR 3D detections with RGB 2D detections and filtering out unmatched LiDAR detections; and (ii) cascade fusion, to recover missed objects from LiDAR by generating new 3D frustum proposals corresponding to unmatched RGB detections. Experiments show that LCF3D is beneficial for domain generalization, as it turns out to be successful in handling different sensor configurations between training and testing domains. LCF3D achieves significant improvements over LiDAR-based methods, particularly for challenging categories like pedestrians and cyclists in the KITTI dataset, as well as motorcycles and bicycles in nuScenes. Code can be downloaded from: https://github.com/CarloSgaravatti/LCF3D.

One target to align them all: LiDAR, RGB and event cameras extrinsic calibration for Autonomous Driving

Nov 15, 2025Abstract:We present a novel multi-modal extrinsic calibration framework designed to simultaneously estimate the relative poses between event cameras, LiDARs, and RGB cameras, with particular focus on the challenging event camera calibration. Core of our approach is a novel 3D calibration target, specifically designed and constructed to be concurrently perceived by all three sensing modalities. The target encodes features in planes, ChArUco, and active LED patterns, each tailored to the unique characteristics of LiDARs, RGB cameras, and event cameras respectively. This unique design enables a one-shot, joint extrinsic calibration process, in contrast to existing approaches that typically rely on separate, pairwise calibrations. Our calibration pipeline is designed to accurately calibrate complex vision systems in the context of autonomous driving, where precise multi-sensor alignment is critical. We validate our approach through an extensive experimental evaluation on a custom built dataset, recorded with an advanced autonomous driving sensor setup, confirming the accuracy and robustness of our method.

MultiLink: Multi-class Structure Recovery via Agglomerative Clustering and Model Selection

May 16, 2025Abstract:We address the problem of recovering multiple structures of different classes in a dataset contaminated by noise and outliers. In particular, we consider geometric structures defined by a mixture of underlying parametric models (e.g. planes and cylinders, homographies and fundamental matrices), and we tackle the robust fitting problem by preference analysis and clustering. We present a new algorithm, termed MultiLink, that simultaneously deals with multiple classes of models. MultiLink combines on-the-fly model fitting and model selection in a novel linkage scheme that determines whether two clusters are to be merged. The resulting method features many practical advantages with respect to methods based on preference analysis, being faster, less sensitive to the inlier threshold, and able to compensate limitations deriving from hypotheses sampling. Experiments on several public datasets demonstrate that Multi-Link favourably compares with state of the art alternatives, both in multi-class and single-class problems. Code is publicly made available for download.

Preference Isolation Forest for Structure-based Anomaly Detection

May 16, 2025Abstract:We address the problem of detecting anomalies as samples that do not conform to structured patterns represented by low-dimensional manifolds. To this end, we conceive a general anomaly detection framework called Preference Isolation Forest (PIF), that combines the benefits of adaptive isolation-based methods with the flexibility of preference embedding. The key intuition is to embed the data into a high-dimensional preference space by fitting low-dimensional manifolds, and to identify anomalies as isolated points. We propose three isolation approaches to identify anomalies: $i$) Voronoi-iForest, the most general solution, $ii$) RuzHash-iForest, that avoids explicit computation of distances via Local Sensitive Hashing, and $iii$) Sliding-PIF, that leverages a locality prior to improve efficiency and effectiveness.

Hashing for Structure-based Anomaly Detection

May 16, 2025Abstract:We focus on the problem of identifying samples in a set that do not conform to structured patterns represented by low-dimensional manifolds. An effective way to solve this problem is to embed data in a high dimensional space, called Preference Space, where anomalies can be identified as the most isolated points. In this work, we employ Locality Sensitive Hashing to avoid explicit computation of distances in high dimensions and thus improve Anomaly Detection efficiency. Specifically, we present an isolation-based anomaly detection technique designed to work in the Preference Space which achieves state-of-the-art performance at a lower computational cost. Code is publicly available at https://github.com/ineveLoppiliF/Hashing-for-Structure-based-Anomaly-Detection.

PIF: Anomaly detection via preference embedding

May 15, 2025Abstract:We address the problem of detecting anomalies with respect to structured patterns. To this end, we conceive a novel anomaly detection method called PIF, that combines the advantages of adaptive isolation methods with the flexibility of preference embedding. Specifically, we propose to embed the data in a high dimensional space where an efficient tree-based method, PI-Forest, is employed to compute an anomaly score. Experiments on synthetic and real datasets demonstrate that PIF favorably compares with state-of-the-art anomaly detection techniques, and confirm that PI-Forest is better at measuring arbitrary distances and isolate points in the preference space.

A Multimodal Hybrid Late-Cascade Fusion Network for Enhanced 3D Object Detection

Apr 25, 2025

Abstract:We present a new way to detect 3D objects from multimodal inputs, leveraging both LiDAR and RGB cameras in a hybrid late-cascade scheme, that combines an RGB detection network and a 3D LiDAR detector. We exploit late fusion principles to reduce LiDAR False Positives, matching LiDAR detections with RGB ones by projecting the LiDAR bounding boxes on the image. We rely on cascade fusion principles to recover LiDAR False Negatives leveraging epipolar constraints and frustums generated by RGB detections of separate views. Our solution can be plugged on top of any underlying single-modal detectors, enabling a flexible training process that can take advantage of pre-trained LiDAR and RGB detectors, or train the two branches separately. We evaluate our results on the KITTI object detection benchmark, showing significant performance improvements, especially for the detection of Pedestrians and Cyclists.

Data-Assimilated Model-Based Reinforcement Learning for Partially Observed Chaotic Flows

Apr 23, 2025

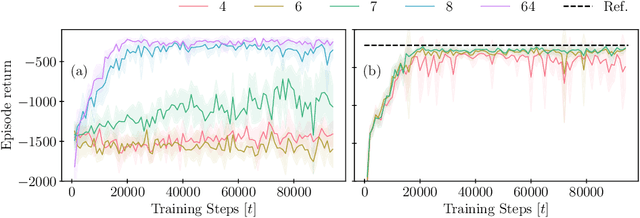

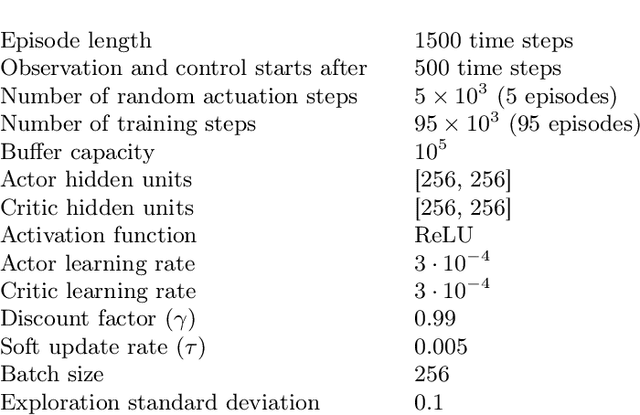

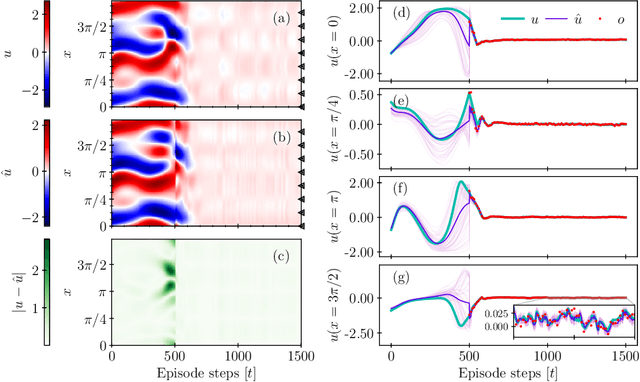

Abstract:The goal of many applications in energy and transport sectors is to control turbulent flows. However, because of chaotic dynamics and high dimensionality, the control of turbulent flows is exceedingly difficult. Model-free reinforcement learning (RL) methods can discover optimal control policies by interacting with the environment, but they require full state information, which is often unavailable in experimental settings. We propose a data-assimilated model-based RL (DA-MBRL) framework for systems with partial observability and noisy measurements. Our framework employs a control-aware Echo State Network for data-driven prediction of the dynamics, and integrates data assimilation with an Ensemble Kalman Filter for real-time state estimation. An off-policy actor-critic algorithm is employed to learn optimal control strategies from state estimates. The framework is tested on the Kuramoto-Sivashinsky equation, demonstrating its effectiveness in stabilizing a spatiotemporally chaotic flow from noisy and partial measurements.

Online model learning with data-assimilated reservoir computers

Apr 23, 2025Abstract:We propose an online learning framework for forecasting nonlinear spatio-temporal signals (fields). The method integrates (i) dimensionality reduction, here, a simple proper orthogonal decomposition (POD) projection; (ii) a generalized autoregressive model to forecast reduced dynamics, here, a reservoir computer; (iii) online adaptation to update the reservoir computer (the model), here, ensemble sequential data assimilation.We demonstrate the framework on a wake past a cylinder governed by the Navier-Stokes equations, exploring the assimilation of full flow fields (projected onto POD modes) and sparse sensors. Three scenarios are examined: a na\"ive physical state estimation; a two-fold estimation of physical and reservoir states; and a three-fold estimation that also adjusts the model parameters. The two-fold strategy significantly improves ensemble convergence and reduces reconstruction error compared to the na\"ive approach. The three-fold approach enables robust online training of partially-trained reservoir computers, overcoming limitations of a priori training. By unifying data-driven reduced order modelling with Bayesian data assimilation, this work opens new opportunities for scalable online model learning for nonlinear time series forecasting.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge