Louis J. Vaickus

Longevity Associated Geometry Identified in Satellite Images: Sidewalks, Driveways and Hiking Trails

Mar 05, 2020

Abstract:Importance: Following a century of increase, life expectancy in the United States has stagnated and begun to decline in recent decades. Using satellite images and street view images prior work has demonstrated associations of the built environment with income, education, access to care and health factors such as obesity. However, assessment of learned image feature relationships with variation in crude mortality rate across the United States has been lacking. Objective: Investigate prediction of county-level mortality rates in the U.S. using satellite images. Design: Satellite images were extracted with the Google Static Maps application programming interface for 430 counties representing approximately 68.9% of the US population. A convolutional neural network was trained using crude mortality rates for each county in 2015 to predict mortality. Learned image features were interpreted using Shapley Additive Feature Explanations, clustered, and compared to mortality and its associated covariate predictors. Main Outcomes and Measures: County mortality was predicted using satellite images. Results: Predicted mortality from satellite images in a held-out test set of counties was strongly correlated to the true crude mortality rate (Pearson r=0.72). Learned image features were clustered, and we identified 10 clusters that were associated with education, income, geographical region, race and age. Conclusion and Relevance: The application of deep learning techniques to remotely-sensed features of the built environment can serve as a useful predictor of mortality in the United States. Tools that are able to identify image features associated with health-related outcomes can inform targeted public health interventions.

Deep neural networks for automated classification of colorectal polyps on histopathology slides: A multi-institutional evaluation

Sep 27, 2019

Abstract:Histological classification of colorectal polyps plays a critical role in both screening for colorectal cancer and care of affected patients. In this study, we developed a deep neural network for classification of four major colorectal polyp types on digitized histopathology slides and compared its performance to local pathologists' diagnoses at the point-of-care retrieved from corresponding pathology labs. We evaluated the deep neural network on an internal dataset of 157 histopathology slides from the Dartmouth-Hitchcock Medical Center (DHMC) in New Hampshire, as well as an external dataset of 513 histopathology slides from 24 different institutions spanning 13 states in the United States. For the internal evaluation, the deep neural network had a mean accuracy of 93.5% (95% CI 89.6%-97.4%), compared with local pathologists' accuracy of 91.4% (95% CI 87.0%-95.8%). On the external test set, the deep neural network achieved an accuracy of 85.7% (95% CI 82.7%-88.7%), significantly outperforming the accuracy of local pathologists at 80.9% (95% CI 77.5%-84.3%, p<0.05) at the point-of-care. If confirmed in clinical settings, our model could assist pathologists by improving the diagnostic efficiency, reproducibility, and accuracy of colorectal cancer screenings.

Pathologist-level classification of histologic patterns on resected lung adenocarcinoma slides with deep neural networks

Jan 31, 2019

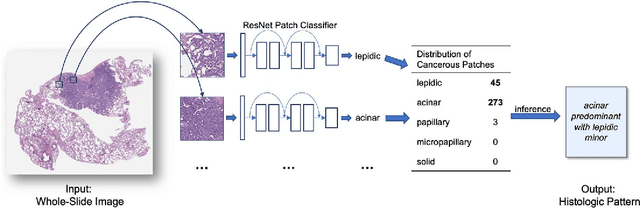

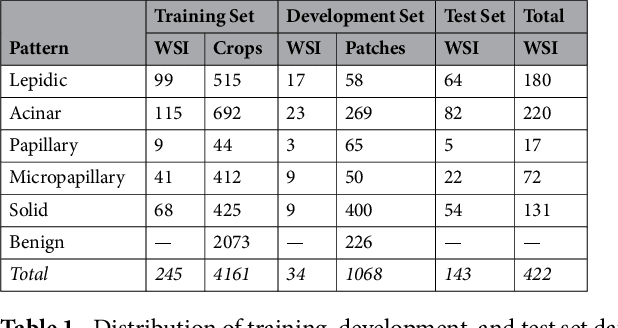

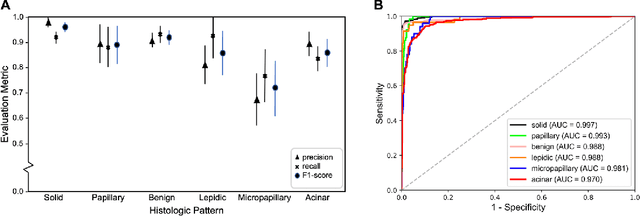

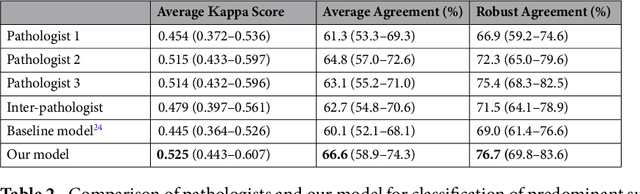

Abstract:Classification of histologic patterns in lung adenocarcinoma is critical for determining tumor grade and treatment for patients. However, this task is often challenging due to the heterogeneous nature of lung adenocarcinoma and the subjective criteria for evaluation. In this study, we propose a deep learning model that automatically classifies the histologic patterns of lung adenocarcinoma on surgical resection slides. Our model uses a convolutional neural network to identify regions of neoplastic cells, then aggregates those classifications to infer predominant and minor histologic patterns for any given whole-slide image. We evaluated our model on an independent set of 143 whole-slide images. It achieved a kappa score of 0.525 and an agreement of 66.6% with three pathologists for classifying the predominant patterns, slightly higher than the inter-pathologist kappa score of 0.485 and agreement of 62.7% on this test set. All evaluation metrics for our model and the three pathologists were within 95% confidence intervals of agreement. If confirmed in clinical practice, our model can assist pathologists in improving classification of lung adenocarcinoma patterns by automatically pre-screening and highlighting cancerous regions prior to review. Our approach can be generalized to any whole-slide image classification task, and code is made publicly available at https://github.com/BMIRDS/deepslide.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge