Loong Fah Cheong

Degeneracy in Self-Calibration Revisited and a Deep Learning Solution for Uncalibrated SLAM

Jul 30, 2019

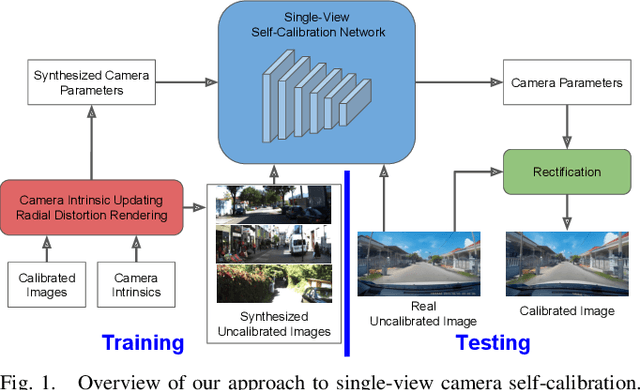

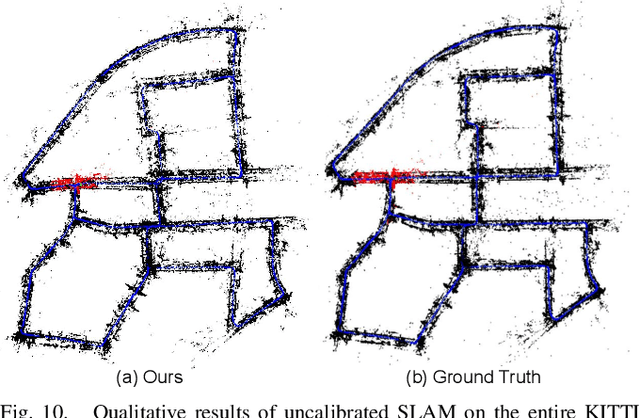

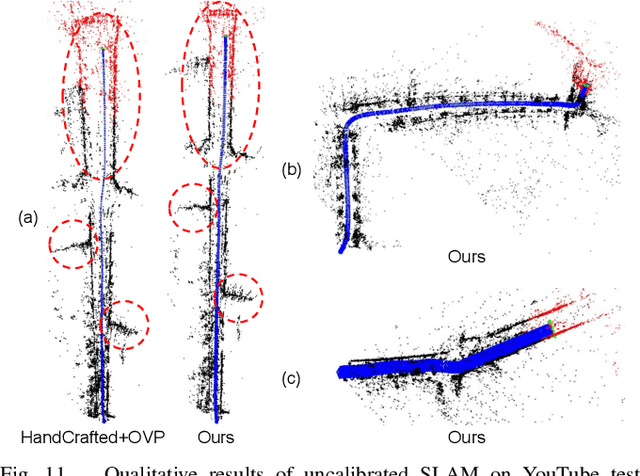

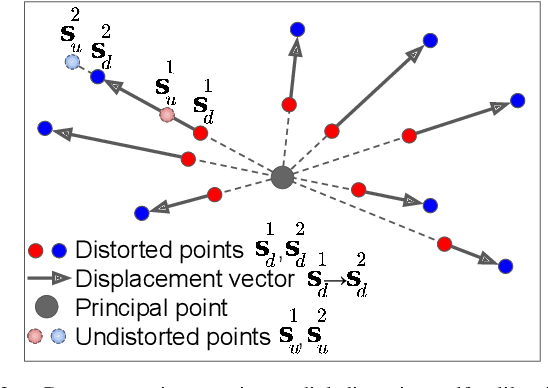

Abstract:Self-calibration of camera intrinsics and radial distortion has a long history of research in the computer vision community. However, it remains rare to see real applications of such techniques to modern Simultaneous Localization And Mapping (SLAM) systems, especially in driving scenarios. In this paper, we revisit the geometric approach to this problem, and provide a theoretical proof that explicitly shows the ambiguity between radial distortion and scene depth when two-view geometry is used to self-calibrate the radial distortion. In view of such geometric degeneracy, we propose a learning approach that trains a convolutional neural network (CNN) on a large amount of synthetic data. We demonstrate the utility of our proposed method by applying it as a checkerboard-free calibration tool for SLAM, achieving comparable or superior performance to previous learning and hand-crafted methods.

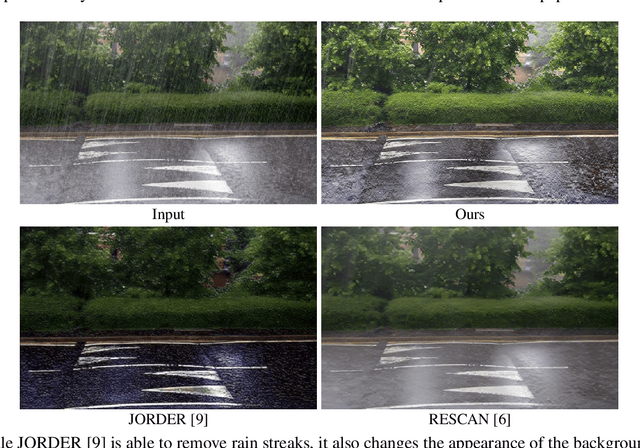

Heavy Rain Image Restoration: Integrating Physics Model and Conditional Adversarial Learning

Apr 10, 2019

Abstract:Most deraining works focus on rain streaks removal but they cannot deal adequately with heavy rain images. In heavy rain, streaks are strongly visible, dense rain accumulation or rain veiling effect significantly washes out the image, further scenes are relatively more blurry, etc. In this paper, we propose a novel method to address these problems. We put forth a 2-stage network: a physics-based backbone followed by a depth-guided GAN refinement. The first stage estimates the rain streaks, the transmission, and the atmospheric light governed by the underlying physics. To tease out these components more reliably, a guided filtering framework is used to decompose the image into its low- and high-frequency components. This filtering is guided by a rain-free residue image --- its content is used to set the passbands for the two channels in a spatially-variant manner so that the background details do not get mixed up with the rain-streaks. For the second stage, the refinement stage, we put forth a depth-guided GAN to recover the background details failed to be retrieved by the first stage, as well as correcting artefacts introduced by that stage. We have evaluated our method against the state of the art methods. Extensive experiments show that our method outperforms them on real rain image data, recovering visually clean images with good details.

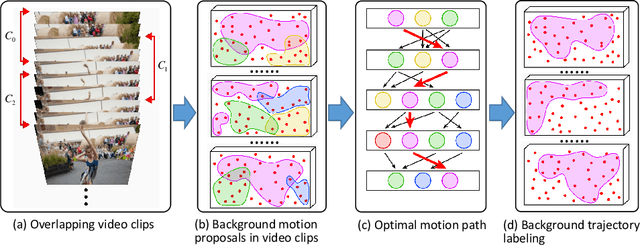

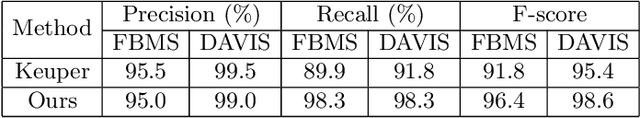

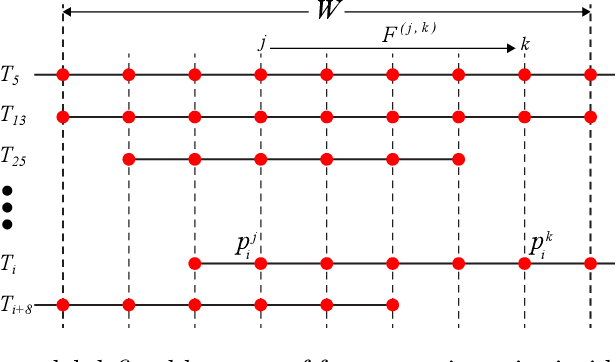

Robust Video Background Identification by Dominant Rigid Motion Estimation

Mar 06, 2019

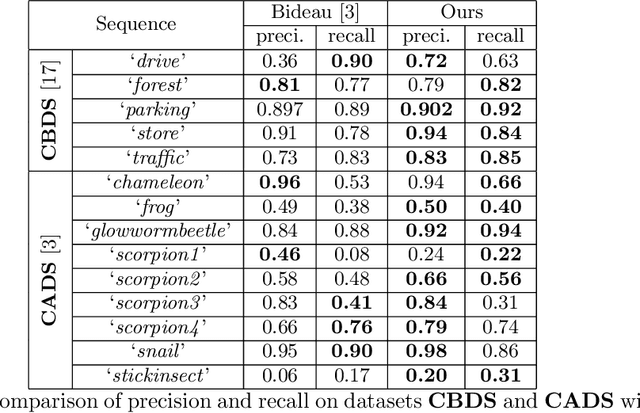

Abstract:The ability to identify the static background in videos captured by a moving camera is an important pre-requisite for many video applications (e.g. video stabilization, stitching, and segmentation). Existing methods usually face difficulties when the foreground objects occupy a larger area than the background in the image. Many methods also cannot scale up to handle densely sampled feature trajectories. In this paper, we propose an efficient local-to-global method to identify background, based on the assumption that as long as there is sufficient camera motion, the cumulative background features will have the largest amount of trajectories. Our motion model at the two-frame level is based on the epipolar geometry so that there will be no over-segmentation problem, another issue that plagues the 2D motion segmentation approach. Foreground objects erroneously labelled due to intermittent motions are also taken care of by checking their global consistency with the final estimated background motion. Lastly, by virtue of its efficiency, our method can deal with densely sampled trajectories. It outperforms several state-of-the-art motion segmentation methods on public datasets, both quantitatively and qualitatively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge