Liwei Shen

Enhancing Robot Program Synthesis Through Environmental Context

Dec 13, 2023

Abstract:Program synthesis aims to automatically generate an executable program that conforms to the given specification. Recent advancements have demonstrated that deep neural methodologies and large-scale pretrained language models are highly proficient in capturing program semantics. For robot programming, prior works have facilitated program synthesis by incorporating global environments. However, the assumption of acquiring a comprehensive understanding of the entire environment is often excessively challenging to achieve. In this work, we present a framework that learns to synthesize a program by rectifying potentially erroneous code segments, with the aid of partially observed environments. To tackle the issue of inadequate attention to partial observations, we propose to first learn an environment embedding space that can implicitly evaluate the impacts of each program token based on the precondition. Furthermore, by employing a graph structure, the model can aggregate both environmental and syntactic information flow and furnish smooth program rectification guidance. Extensive experimental evaluations and ablation studies on the partially observed VizDoom domain authenticate that our method offers superior generalization capability across various tasks and greater robustness when encountering noises.

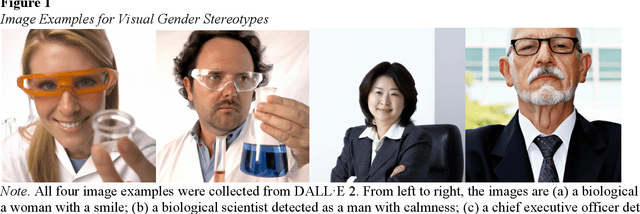

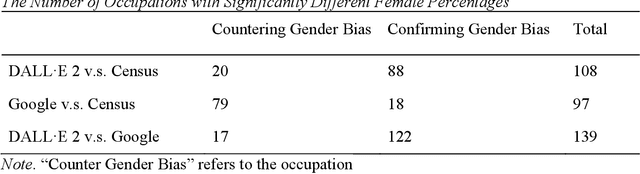

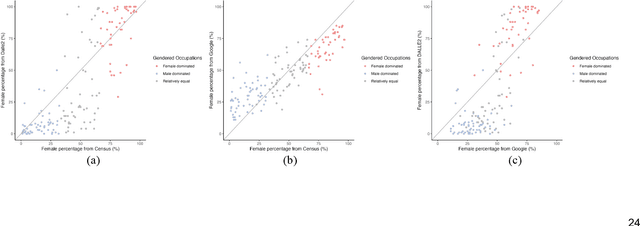

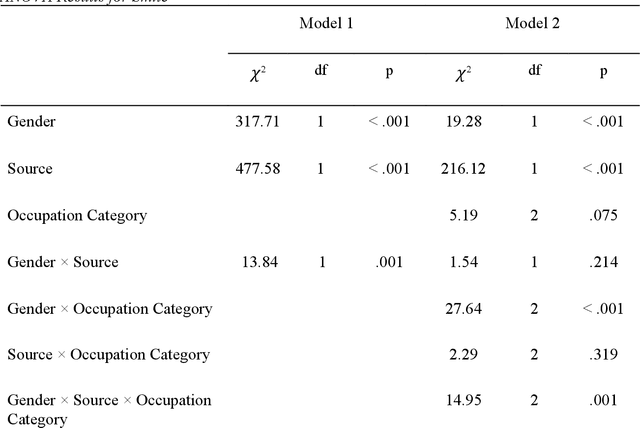

Smiling Women Pitching Down: Auditing Representational and Presentational Gender Biases in Image Generative AI

May 17, 2023

Abstract:Generative AI models like DALL-E 2 can interpret textual prompts and generate high-quality images exhibiting human creativity. Though public enthusiasm is booming, systematic auditing of potential gender biases in AI-generated images remains scarce. We addressed this gap by examining the prevalence of two occupational gender biases (representational and presentational biases) in 15,300 DALL-E 2 images spanning 153 occupations, and assessed potential bias amplification by benchmarking against 2021 census labor statistics and Google Images. Our findings reveal that DALL-E 2 underrepresents women in male-dominated fields while overrepresenting them in female-dominated occupations. Additionally, DALL-E 2 images tend to depict more women than men with smiling faces and downward-pitching heads, particularly in female-dominated (vs. male-dominated) occupations. Our computational algorithm auditing study demonstrates more pronounced representational and presentational biases in DALL-E 2 compared to Google Images and calls for feminist interventions to prevent such bias-laden AI-generated images to feedback into the media ecology.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge