Lisa Zahray

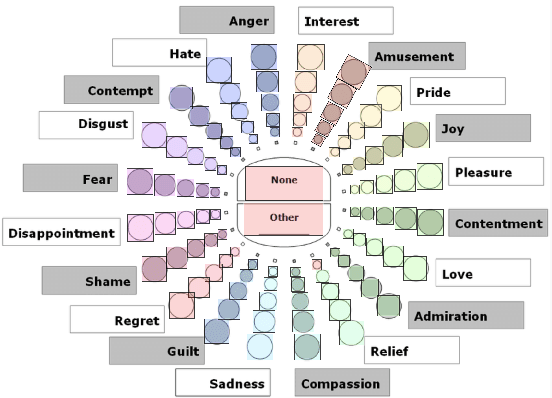

Emotional Musical Prosody: Validated Vocal Dataset for Human Robot Interaction

Oct 09, 2020

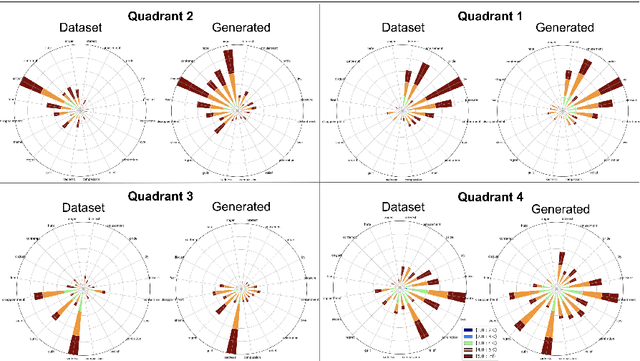

Abstract:Human collaboration with robotics is dependant on the development of a relationship between human and robot, without which performance and utilization can decrease. Emotion and personality conveyance has been shown to enhance robotic collaborations, with improved human-robot relationships and increased trust. One under-explored way for an artificial agent to convey emotions is through non-linguistic musical prosody. In this work we present a new 4.2 hour dataset of improvised emotional vocal phrases based on the Geneva Emotion Wheel. This dataset has been validated through extensive listening tests and shows promising preliminary results for use in generative systems.

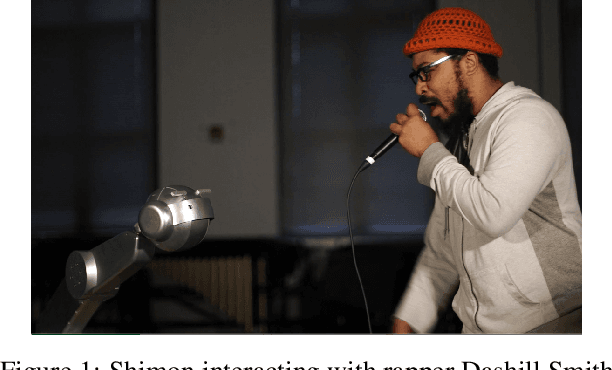

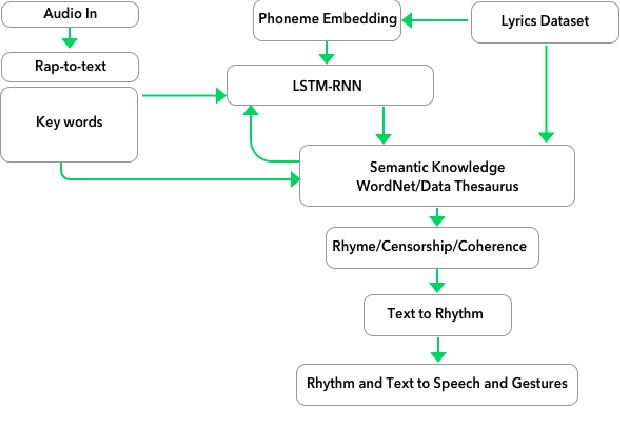

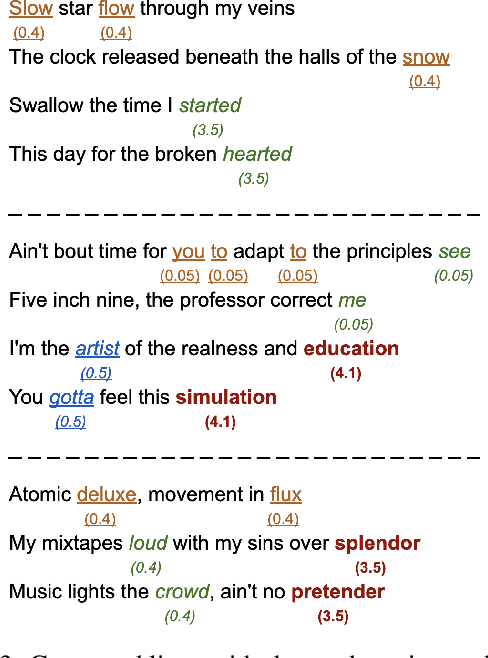

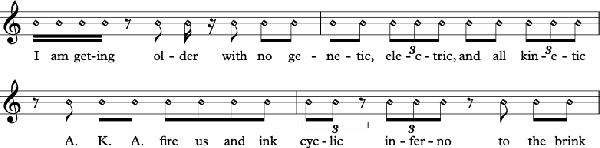

Shimon the Rapper: A Real-Time System for Human-Robot Interactive Rap Battles

Sep 19, 2020

Abstract:We present a system for real-time lyrical improvisation between a human and a robot in the style of hip hop. Our system takes vocal input from a human rapper, analyzes the semantic meaning, and generates a response that is rapped back by a robot over a musical groove. Previous work with real-time interactive music systems has largely focused on instrumental output, and vocal interactions with robots have been explored, but not in a musical context. Our generative system includes custom methods for censorship, voice, rhythm, rhyming and a novel deep learning pipeline based on phoneme embeddings. The rap performances are accompanied by synchronized robotic gestures and mouth movements. Key technical challenges that were overcome in the system are developing rhymes, performing with low-latency and dataset censorship. We evaluated several aspects of the system through a survey of videos and sample text output. Analysis of comments showed that the overall perception of the system was positive. The model trained on our hip hop dataset was rated significantly higher than our metal dataset in coherence, rhyme quality, and enjoyment. Participants preferred outputs generated by a given input phrase over outputs generated from unknown keywords, indicating that the system successfully relates its output to its input.

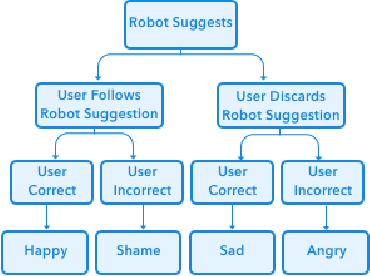

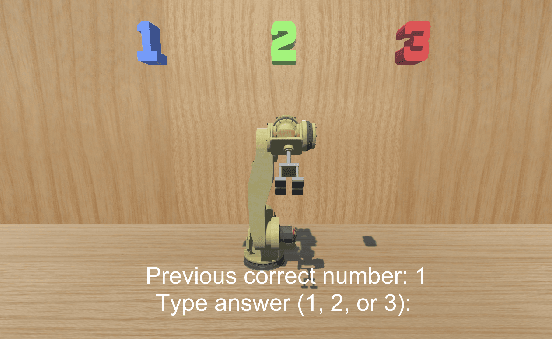

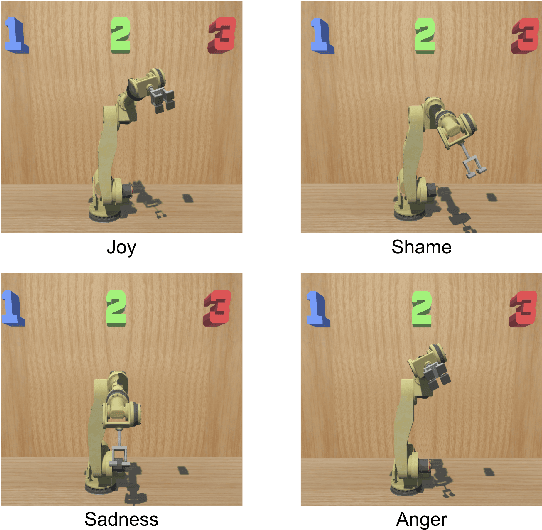

Emotional Musical Prosody for the Enhancement of Trust in Robotic Arm Communication

Sep 18, 2020

Abstract:As robotic arms become prevalent in industry it is crucial to improve levels of trust from human collaborators. Low levels of trust in human-robot interaction can reduce overall performance and prevent full robot utilization. We investigated the potential benefits of using emotional musical prosody to allow the robot to respond emotionally to the user's actions. We tested participants' responses to interacting with a virtual robot arm that acted as a decision agent, helping participants select the next number in a sequence. We compared results from three versions of the application in a between-group experiment, where the robot had different emotional reactions to the user's input depending on whether the user agreed with the robot and whether the user's choice was correct. In all versions, the robot reacted with emotional gestures. One version used prosody-based emotional audio phrases selected from our dataset of singer improvisations, the second version used audio consisting of a single pitch randomly assigned to each emotion, and the final version used no audio, only gestures. Our results showed no significant difference for the percentage of times users from each group agreed with the robot, and no difference between user's agreement with the robot after it made a mistake. However, participants also took a trust survey following the interaction, and we found that the reported trust ratings of the musical prosody group were significantly higher than both the single-pitch and no audio groups.

Mechatronics-Driven Musical Expressivity for Robotic Percussionists

Jul 29, 2020

Abstract:Musical expressivity is an important aspect of musical performance for humans as well as robotic musicians. We present a novel mechatronics-driven implementation of Brushless Direct Current (BLDC) motors in a robotic marimba player, named Shimon, designed to improve speed, dynamic range (loudness), and ultimately perceived musical expressivity in comparison to state-of-the-art robotic percussionist actuators. In an objective test of dynamic range, we find that our implementation provides wider and more consistent dynamic range response in comparison with solenoid-based robotic percussionists. Our implementation also outperforms both solenoid and human marimba players in striking speed. In a subjective listening test measuring musical expressivity, our system performs significantly better than a solenoid-based system and is statistically indistinguishable from human performers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge