Emotional Musical Prosody: Validated Vocal Dataset for Human Robot Interaction

Paper and Code

Oct 09, 2020

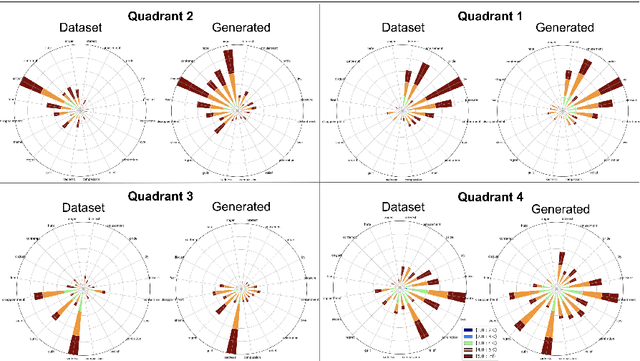

Human collaboration with robotics is dependant on the development of a relationship between human and robot, without which performance and utilization can decrease. Emotion and personality conveyance has been shown to enhance robotic collaborations, with improved human-robot relationships and increased trust. One under-explored way for an artificial agent to convey emotions is through non-linguistic musical prosody. In this work we present a new 4.2 hour dataset of improvised emotional vocal phrases based on the Geneva Emotion Wheel. This dataset has been validated through extensive listening tests and shows promising preliminary results for use in generative systems.

* 2020 Joint Conference on AI Music Creativity (CSMC + MuMe 2020)

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge