Lior Rokach

Dark LLMs: The Growing Threat of Unaligned AI Models

May 15, 2025Abstract:Large Language Models (LLMs) rapidly reshape modern life, advancing fields from healthcare to education and beyond. However, alongside their remarkable capabilities lies a significant threat: the susceptibility of these models to jailbreaking. The fundamental vulnerability of LLMs to jailbreak attacks stems from the very data they learn from. As long as this training data includes unfiltered, problematic, or 'dark' content, the models can inherently learn undesirable patterns or weaknesses that allow users to circumvent their intended safety controls. Our research identifies the growing threat posed by dark LLMs models deliberately designed without ethical guardrails or modified through jailbreak techniques. In our research, we uncovered a universal jailbreak attack that effectively compromises multiple state-of-the-art models, enabling them to answer almost any question and produce harmful outputs upon request. The main idea of our attack was published online over seven months ago. However, many of the tested LLMs were still vulnerable to this attack. Despite our responsible disclosure efforts, responses from major LLM providers were often inadequate, highlighting a concerning gap in industry practices regarding AI safety. As model training becomes more accessible and cheaper, and as open-source LLMs proliferate, the risk of widespread misuse escalates. Without decisive intervention, LLMs may continue democratizing access to dangerous knowledge, posing greater risks than anticipated.

X-Cross: Dynamic Integration of Language Models for Cross-Domain Sequential Recommendation

Apr 29, 2025

Abstract:As new products are emerging daily, recommendation systems are required to quickly adapt to possible new domains without needing extensive retraining. This work presents ``X-Cross'' -- a novel cross-domain sequential-recommendation model that recommends products in new domains by integrating several domain-specific language models; each model is fine-tuned with low-rank adapters (LoRA). Given a recommendation prompt, operating layer by layer, X-Cross dynamically refines the representation of each source language model by integrating knowledge from all other models. These refined representations are propagated from one layer to the next, leveraging the activations from each domain adapter to ensure domain-specific nuances are preserved while enabling adaptability across domains. Using Amazon datasets for sequential recommendation, X-Cross achieves performance comparable to a model that is fine-tuned with LoRA, while using only 25% of the additional parameters. In cross-domain tasks, such as adapting from Toys domain to Tools, Electronics or Sports, X-Cross demonstrates robust performance, while requiring about 50%-75% less fine-tuning data than LoRA to make fine-tuning effective. Furthermore, X-Cross achieves significant improvement in accuracy over alternative cross-domain baselines. Overall, X-Cross enables scalable and adaptive cross-domain recommendations, reducing computational overhead and providing an efficient solution for data-constrained environments.

Forget What You Know about LLMs Evaluations - LLMs are Like a Chameleon

Feb 11, 2025

Abstract:Large language models (LLMs) often appear to excel on public benchmarks, but these high scores may mask an overreliance on dataset-specific surface cues rather than true language understanding. We introduce the Chameleon Benchmark Overfit Detector (C-BOD), a meta-evaluation framework that systematically distorts benchmark prompts via a parametric transformation and detects overfitting of LLMs. By rephrasing inputs while preserving their semantic content and labels, C-BOD exposes whether a model's performance is driven by memorized patterns. Evaluated on the MMLU benchmark using 26 leading LLMs, our method reveals an average performance degradation of 2.15% under modest perturbations, with 20 out of 26 models exhibiting statistically significant differences. Notably, models with higher baseline accuracy exhibit larger performance differences under perturbation, and larger LLMs tend to be more sensitive to rephrasings indicating that both cases may overrely on fixed prompt patterns. In contrast, the Llama family and models with lower baseline accuracy show insignificant degradation, suggesting reduced dependency on superficial cues. Moreover, C-BOD's dataset- and model-agnostic design allows easy integration into training pipelines to promote more robust language understanding. Our findings challenge the community to look beyond leaderboard scores and prioritize resilience and generalization in LLM evaluation.

DFPE: A Diverse Fingerprint Ensemble for Enhancing LLM Performance

Jan 29, 2025Abstract:Large Language Models (LLMs) have shown remarkable capabilities across various natural language processing tasks but often struggle to excel uniformly in diverse or complex domains. We propose a novel ensemble method - Diverse Fingerprint Ensemble (DFPE), which leverages the complementary strengths of multiple LLMs to achieve more robust performance. Our approach involves: (1) clustering models based on response "fingerprints" patterns, (2) applying a quantile-based filtering mechanism to remove underperforming models at a per-subject level, and (3) assigning adaptive weights to remaining models based on their subject-wise validation accuracy. In experiments on the Massive Multitask Language Understanding (MMLU) benchmark, DFPE outperforms the best single model by 3% overall accuracy and 5% in discipline-level accuracy. This method increases the robustness and generalization of LLMs and underscores how model selection, diversity preservation, and performance-driven weighting can effectively address challenging, multi-faceted language understanding tasks.

FairTTTS: A Tree Test Time Simulation Method for Fairness-Aware Classification

Jan 14, 2025Abstract:Algorithmic decision-making has become deeply ingrained in many domains, yet biases in machine learning models can still produce discriminatory outcomes, often harming unprivileged groups. Achieving fair classification is inherently challenging, requiring a careful balance between predictive performance and ethical considerations. We present FairTTTS, a novel post-processing bias mitigation method inspired by the Tree Test Time Simulation (TTTS) method. Originally developed to enhance accuracy and robustness against adversarial inputs through probabilistic decision-path adjustments, TTTS serves as the foundation for FairTTTS. By building on this accuracy-enhancing technique, FairTTTS mitigates bias and improves predictive performance. FairTTTS uses a distance-based heuristic to adjust decisions at protected attribute nodes, ensuring fairness for unprivileged samples. This fairness-oriented adjustment occurs as a post-processing step, allowing FairTTTS to be applied to pre-trained models, diverse datasets, and various fairness metrics without retraining. Extensive evaluation on seven benchmark datasets shows that FairTTTS outperforms traditional methods in fairness improvement, achieving a 20.96% average increase over the baseline compared to 18.78% for related work, and further enhances accuracy by 0.55%. In contrast, competing methods typically reduce accuracy by 0.42%. These results confirm that FairTTTS effectively promotes more equitable decision-making while simultaneously improving predictive performance.

BiasGuard: Guardrailing Fairness in Machine Learning Production Systems

Jan 07, 2025Abstract:As machine learning (ML) systems increasingly impact critical sectors such as hiring, financial risk assessments, and criminal justice, the imperative to ensure fairness has intensified due to potential negative implications. While much ML fairness research has focused on enhancing training data and processes, addressing the outputs of already deployed systems has received less attention. This paper introduces 'BiasGuard', a novel approach designed to act as a fairness guardrail in production ML systems. BiasGuard leverages Test-Time Augmentation (TTA) powered by Conditional Generative Adversarial Network (CTGAN), a cutting-edge generative AI model, to synthesize data samples conditioned on inverted protected attribute values, thereby promoting equitable outcomes across diverse groups. This method aims to provide equal opportunities for both privileged and unprivileged groups while significantly enhancing the fairness metrics of deployed systems without the need for retraining. Our comprehensive experimental analysis across diverse datasets reveals that BiasGuard enhances fairness by 31% while only reducing accuracy by 0.09% compared to non-mitigated benchmarks. Additionally, BiasGuard outperforms existing post-processing methods in improving fairness, positioning it as an effective tool to safeguard against biases when retraining the model is impractical.

A Novel Method for News Article Event-Based Embedding

May 20, 2024Abstract:Embedding news articles is a crucial tool for multiple fields, such as media bias detection, identifying fake news, and news recommendations. However, existing news embedding methods are not optimized for capturing the latent context of news events. In many cases, news embedding methods rely on full-textual information and neglect the importance of time-relevant embedding generation. Here, we aim to address these shortcomings by presenting a novel lightweight method that optimizes news embedding generation by focusing on the entities and themes mentioned in the articles and their historical connections to specific events. We suggest a method composed of three stages. First, we process and extract the events, entities, and themes for the given news articles. Second, we generate periodic time embeddings for themes and entities by training timely separated GloVe models on current and historical data. Lastly, we concatenate the news embeddings generated by two distinct approaches: Smooth Inverse Frequency (SIF) for article-level vectors and Siamese Neural Networks for embeddings with nuanced event-related information. To test and evaluate our method, we leveraged over 850,000 news articles and 1,000,000 events from the GDELT project. For validation purposes, we conducted a comparative analysis of different news embedding generation methods, applying them twice to a shared event detection task - first on articles published within the same day and subsequently on those published within the same month. Our experiments show that our method significantly improves the Precision-Recall (PR) AUC across all tasks and datasets. Specifically, we observed an average PR AUC improvement of 2.15% and 2.57% compared to SIF, as well as 2.57% and 2.43% compared to the semi-supervised approach for daily and monthly shared event detection tasks, respectively.

The Branch Not Taken: Predicting Branching in Online Conversations

Apr 21, 2024

Abstract:Multi-participant discussions tend to unfold in a tree structure rather than a chain structure. Branching may occur for multiple reasons -- from the asynchronous nature of online platforms to a conscious decision by an interlocutor to disengage with part of the conversation. Predicting branching and understanding the reasons for creating new branches is important for many downstream tasks such as summarization and thread disentanglement and may help develop online spaces that encourage users to engage in online discussions in more meaningful ways. In this work, we define the novel task of branch prediction and propose GLOBS (Global Branching Score) -- a deep neural network model for predicting branching. GLOBS is evaluated on three large discussion forums from Reddit, achieving significant improvements over an array of competitive baselines and demonstrating better transferability. We affirm that structural, temporal, and linguistic features contribute to GLOBS success and find that branching is associated with a greater number of conversation participants and tends to occur in earlier levels of the conversation tree. We publicly release GLOBS and our implementation of all baseline models to allow reproducibility and promote further research on this important task.

BagStacking: An Integrated Ensemble Learning Approach for Freezing of Gait Detection in Parkinson's Disease

Feb 24, 2024

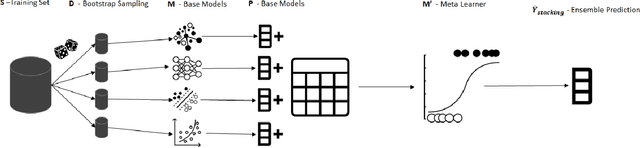

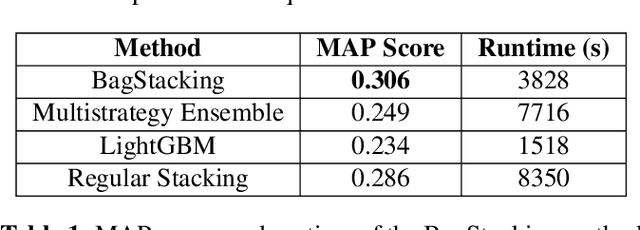

Abstract:This paper introduces BagStacking, a novel ensemble learning method designed to enhance the detection of Freezing of Gait (FOG) in Parkinson's Disease (PD) by using a lower-back sensor to track acceleration. Building on the principles of bagging and stacking, BagStacking aims to achieve the variance reduction benefit of bagging's bootstrap sampling while also learning sophisticated blending through stacking. The method involves training a set of base models on bootstrap samples from the training data, followed by a meta-learner trained on the base model outputs and true labels to find an optimal aggregation scheme. The experimental evaluation demonstrates significant improvements over other state-of-the-art machine learning methods on the validation set. Specifically, BagStacking achieved a MAP score of 0.306, outperforming LightGBM (0.234) and classic Stacking (0.286). Additionally, the run-time of BagStacking was measured at 3828 seconds, illustrating an efficient approach compared to Regular Stacking's 8350 seconds. BagStacking presents a promising direction for handling the inherent variability in FOG detection data, offering a robust and scalable solution to improve patient care in PD.

AMFPMC -- An improved method of detecting multiple types of drug-drug interactions using only known drug-drug interactions

Feb 07, 2023

Abstract:Adverse drug interactions are largely preventable causes of medical accidents, which frequently result in physician and emergency room encounters. The detection of drug interactions in a lab, prior to a drug's use in medical practice, is essential, however it is costly and time-consuming. Machine learning techniques can provide an efficient and accurate means of predicting possible drug-drug interactions and combat the growing problem of adverse drug interactions. Most existing models for predicting interactions rely on the chemical properties of drugs. While such models can be accurate, the required properties are not always available.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge