Bracha Shapira

EncodeRec: An Embedding Backbone for Recommendation Systems

Jan 15, 2026Abstract:Recent recommender systems increasingly leverage embeddings from large pre-trained language models (PLMs). However, such embeddings exhibit two key limitations: (1) PLMs are not explicitly optimized to produce structured and discriminative embedding spaces, and (2) their representations remain overly generic, often failing to capture the domain-specific semantics crucial for recommendation tasks. We present EncodeRec, an approach designed to align textual representations with recommendation objectives while learning compact, informative embeddings directly from item descriptions. EncodeRec keeps the language model parameters frozen during recommender system training, making it computationally efficient without sacrificing semantic fidelity. Experiments across core recommendation benchmarks demonstrate its effectiveness both as a backbone for sequential recommendation models and for semantic ID tokenization, showing substantial gains over PLM-based and embedding model baselines. These results underscore the pivotal role of embedding adaptation in bridging the gap between general-purpose language models and practical recommender systems.

Calibration and Discrimination Optimization Using Clusters of Learned Representation

Oct 22, 2025Abstract:Machine learning models are essential for decision-making and risk assessment, requiring highly reliable predictions in terms of both discrimination and calibration. While calibration often receives less attention, it is crucial for critical decisions, such as those in clinical predictions. We introduce a novel calibration pipeline that leverages an ensemble of calibration functions trained on clusters of learned representations of the input samples to enhance overall calibration. This approach not only improves the calibration score of various methods from 82.28% up to 100% but also introduces a unique matching metric that ensures model selection optimizes both discrimination and calibration. Our generic scheme adapts to any underlying representation, clustering, calibration methods and metric, offering flexibility and superior performance across commonly used calibration methods.

X-Cross: Dynamic Integration of Language Models for Cross-Domain Sequential Recommendation

Apr 29, 2025

Abstract:As new products are emerging daily, recommendation systems are required to quickly adapt to possible new domains without needing extensive retraining. This work presents ``X-Cross'' -- a novel cross-domain sequential-recommendation model that recommends products in new domains by integrating several domain-specific language models; each model is fine-tuned with low-rank adapters (LoRA). Given a recommendation prompt, operating layer by layer, X-Cross dynamically refines the representation of each source language model by integrating knowledge from all other models. These refined representations are propagated from one layer to the next, leveraging the activations from each domain adapter to ensure domain-specific nuances are preserved while enabling adaptability across domains. Using Amazon datasets for sequential recommendation, X-Cross achieves performance comparable to a model that is fine-tuned with LoRA, while using only 25% of the additional parameters. In cross-domain tasks, such as adapting from Toys domain to Tools, Electronics or Sports, X-Cross demonstrates robust performance, while requiring about 50%-75% less fine-tuning data than LoRA to make fine-tuning effective. Furthermore, X-Cross achieves significant improvement in accuracy over alternative cross-domain baselines. Overall, X-Cross enables scalable and adaptive cross-domain recommendations, reducing computational overhead and providing an efficient solution for data-constrained environments.

Forget What You Know about LLMs Evaluations - LLMs are Like a Chameleon

Feb 11, 2025

Abstract:Large language models (LLMs) often appear to excel on public benchmarks, but these high scores may mask an overreliance on dataset-specific surface cues rather than true language understanding. We introduce the Chameleon Benchmark Overfit Detector (C-BOD), a meta-evaluation framework that systematically distorts benchmark prompts via a parametric transformation and detects overfitting of LLMs. By rephrasing inputs while preserving their semantic content and labels, C-BOD exposes whether a model's performance is driven by memorized patterns. Evaluated on the MMLU benchmark using 26 leading LLMs, our method reveals an average performance degradation of 2.15% under modest perturbations, with 20 out of 26 models exhibiting statistically significant differences. Notably, models with higher baseline accuracy exhibit larger performance differences under perturbation, and larger LLMs tend to be more sensitive to rephrasings indicating that both cases may overrely on fixed prompt patterns. In contrast, the Llama family and models with lower baseline accuracy show insignificant degradation, suggesting reduced dependency on superficial cues. Moreover, C-BOD's dataset- and model-agnostic design allows easy integration into training pipelines to promote more robust language understanding. Our findings challenge the community to look beyond leaderboard scores and prioritize resilience and generalization in LLM evaluation.

DFPE: A Diverse Fingerprint Ensemble for Enhancing LLM Performance

Jan 29, 2025Abstract:Large Language Models (LLMs) have shown remarkable capabilities across various natural language processing tasks but often struggle to excel uniformly in diverse or complex domains. We propose a novel ensemble method - Diverse Fingerprint Ensemble (DFPE), which leverages the complementary strengths of multiple LLMs to achieve more robust performance. Our approach involves: (1) clustering models based on response "fingerprints" patterns, (2) applying a quantile-based filtering mechanism to remove underperforming models at a per-subject level, and (3) assigning adaptive weights to remaining models based on their subject-wise validation accuracy. In experiments on the Massive Multitask Language Understanding (MMLU) benchmark, DFPE outperforms the best single model by 3% overall accuracy and 5% in discipline-level accuracy. This method increases the robustness and generalization of LLMs and underscores how model selection, diversity preservation, and performance-driven weighting can effectively address challenging, multi-faceted language understanding tasks.

FairTTTS: A Tree Test Time Simulation Method for Fairness-Aware Classification

Jan 14, 2025Abstract:Algorithmic decision-making has become deeply ingrained in many domains, yet biases in machine learning models can still produce discriminatory outcomes, often harming unprivileged groups. Achieving fair classification is inherently challenging, requiring a careful balance between predictive performance and ethical considerations. We present FairTTTS, a novel post-processing bias mitigation method inspired by the Tree Test Time Simulation (TTTS) method. Originally developed to enhance accuracy and robustness against adversarial inputs through probabilistic decision-path adjustments, TTTS serves as the foundation for FairTTTS. By building on this accuracy-enhancing technique, FairTTTS mitigates bias and improves predictive performance. FairTTTS uses a distance-based heuristic to adjust decisions at protected attribute nodes, ensuring fairness for unprivileged samples. This fairness-oriented adjustment occurs as a post-processing step, allowing FairTTTS to be applied to pre-trained models, diverse datasets, and various fairness metrics without retraining. Extensive evaluation on seven benchmark datasets shows that FairTTTS outperforms traditional methods in fairness improvement, achieving a 20.96% average increase over the baseline compared to 18.78% for related work, and further enhances accuracy by 0.55%. In contrast, competing methods typically reduce accuracy by 0.42%. These results confirm that FairTTTS effectively promotes more equitable decision-making while simultaneously improving predictive performance.

BiasGuard: Guardrailing Fairness in Machine Learning Production Systems

Jan 07, 2025Abstract:As machine learning (ML) systems increasingly impact critical sectors such as hiring, financial risk assessments, and criminal justice, the imperative to ensure fairness has intensified due to potential negative implications. While much ML fairness research has focused on enhancing training data and processes, addressing the outputs of already deployed systems has received less attention. This paper introduces 'BiasGuard', a novel approach designed to act as a fairness guardrail in production ML systems. BiasGuard leverages Test-Time Augmentation (TTA) powered by Conditional Generative Adversarial Network (CTGAN), a cutting-edge generative AI model, to synthesize data samples conditioned on inverted protected attribute values, thereby promoting equitable outcomes across diverse groups. This method aims to provide equal opportunities for both privileged and unprivileged groups while significantly enhancing the fairness metrics of deployed systems without the need for retraining. Our comprehensive experimental analysis across diverse datasets reveals that BiasGuard enhances fairness by 31% while only reducing accuracy by 0.09% compared to non-mitigated benchmarks. Additionally, BiasGuard outperforms existing post-processing methods in improving fairness, positioning it as an effective tool to safeguard against biases when retraining the model is impractical.

AMFPMC -- An improved method of detecting multiple types of drug-drug interactions using only known drug-drug interactions

Feb 07, 2023

Abstract:Adverse drug interactions are largely preventable causes of medical accidents, which frequently result in physician and emergency room encounters. The detection of drug interactions in a lab, prior to a drug's use in medical practice, is essential, however it is costly and time-consuming. Machine learning techniques can provide an efficient and accurate means of predicting possible drug-drug interactions and combat the growing problem of adverse drug interactions. Most existing models for predicting interactions rely on the chemical properties of drugs. While such models can be accurate, the required properties are not always available.

Transfer learning for time series classification using synthetic data generation

Jul 16, 2022Abstract:In this paper, we propose an innovative Transfer learning for Time series classification method. Instead of using an existing dataset from the UCR archive as the source dataset, we generated a 15,000,000 synthetic univariate time series dataset that was created using our unique synthetic time series generator algorithm which can generate data with diverse patterns and angles and different sequence lengths. Furthermore, instead of using classification tasks provided by the UCR archive as the source task as previous studies did,we used our own 55 regression tasks as the source tasks, which produced better results than selecting classification tasks from the UCR archive

Boosting Anomaly Detection Using Unsupervised Diverse Test-Time Augmentation

Oct 29, 2021

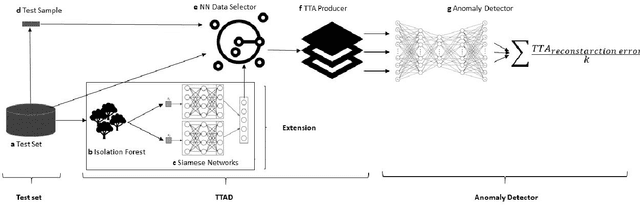

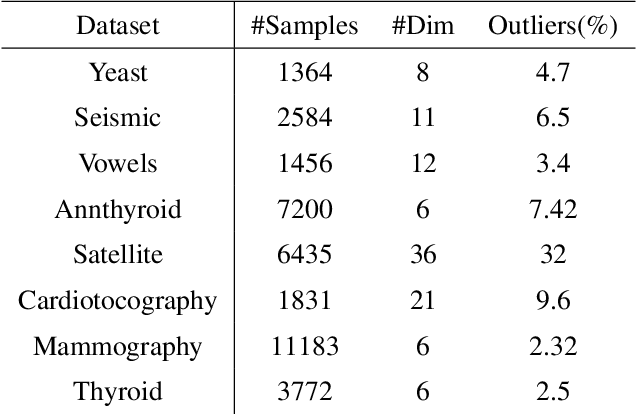

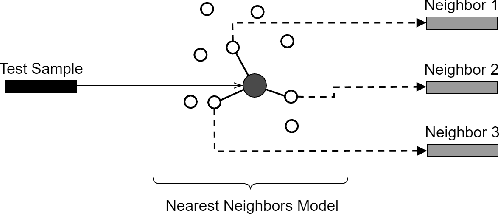

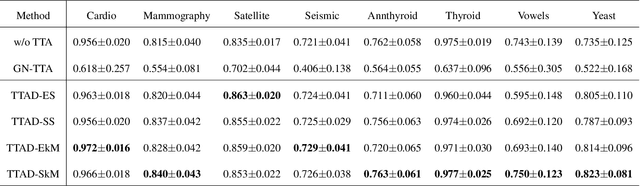

Abstract:Anomaly detection is a well-known task that involves the identification of abnormal events that occur relatively infrequently. Methods for improving anomaly detection performance have been widely studied. However, no studies utilizing test-time augmentation (TTA) for anomaly detection in tabular data have been performed. TTA involves aggregating the predictions of several synthetic versions of a given test sample; TTA produces different points of view for a specific test instance and might decrease its prediction bias. We propose the Test-Time Augmentation for anomaly Detection (TTAD) technique, a TTA-based method aimed at improving anomaly detection performance. TTAD augments a test instance based on its nearest neighbors; various methods, including the k-Means centroid and SMOTE methods, are used to produce the augmentations. Our technique utilizes a Siamese network to learn an advanced distance metric when retrieving a test instance's neighbors. Our experiments show that the anomaly detector that uses our TTA technique achieved significantly higher AUC results on all datasets evaluated.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge