Linus Gisslén

Towards Better Sample Efficiency in Multi-Agent Reinforcement Learning via Exploration

Mar 17, 2025Abstract:Multi-agent reinforcement learning has shown promise in learning cooperative behaviors in team-based environments. However, such methods often demand extensive training time. For instance, the state-of-the-art method TiZero takes 40 days to train high-quality policies for a football environment. In this paper, we hypothesize that better exploration mechanisms can improve the sample efficiency of multi-agent methods. We propose two different approaches for better exploration in TiZero: a self-supervised intrinsic reward and a random network distillation bonus. Additionally, we introduce architectural modifications to the original algorithm to enhance TiZero's computational efficiency. We evaluate the sample efficiency of these approaches through extensive experiments. Our results show that random network distillation improves training sample efficiency by 18.8% compared to the original TiZero. Furthermore, we evaluate the qualitative behavior of the models produced by both variants against a heuristic AI, with the self-supervised reward encouraging possession and random network distillation leading to a more offensive performance. Our results highlights the applicability of our random network distillation variant in practical settings. Lastly, due to the nature of the proposed method, we acknowledge its use beyond football simulation, especially in environments with strong multi-agent and strategic aspects.

Improving Conditional Level Generation using Automated Validation in Match-3 Games

Sep 10, 2024

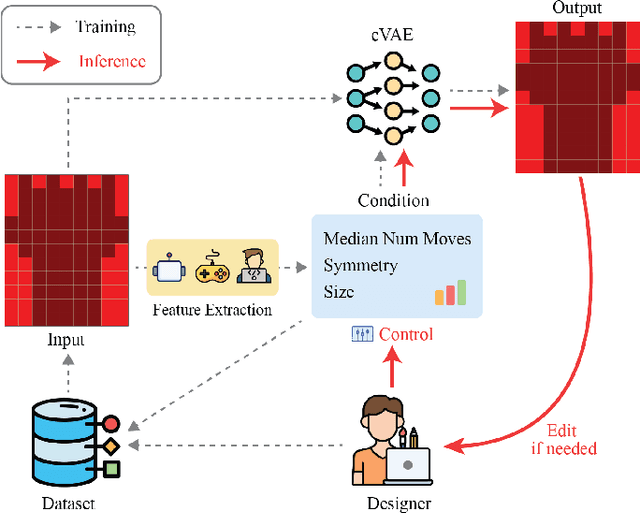

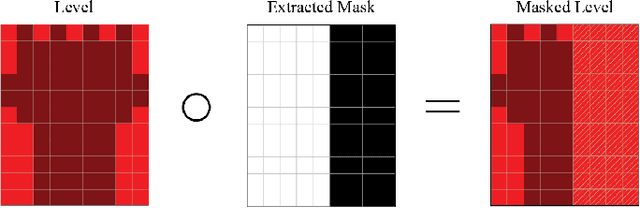

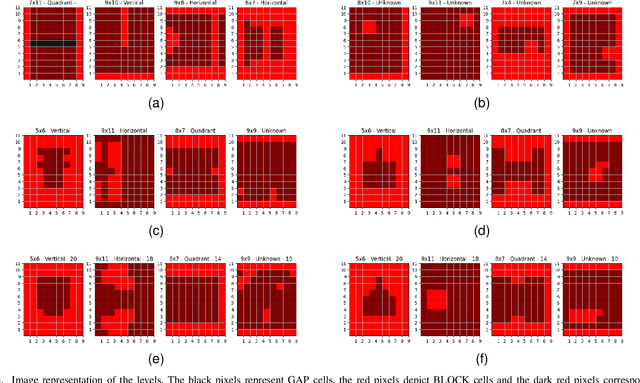

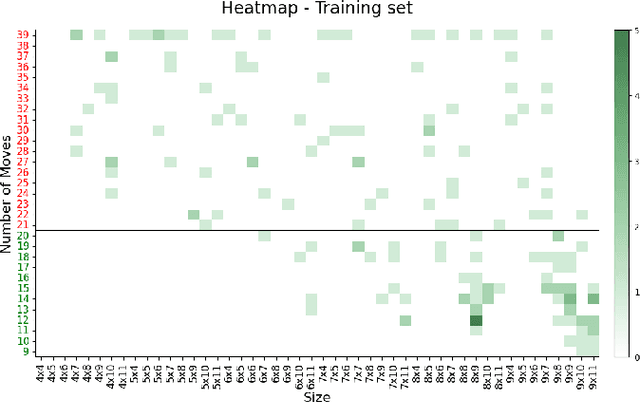

Abstract:Generative models for level generation have shown great potential in game production. However, they often provide limited control over the generation, and the validity of the generated levels is unreliable. Despite this fact, only a few approaches that learn from existing data provide the users with ways of controlling the generation, simultaneously addressing the generation of unsolvable levels. %One of the main challenges it faces is that levels generated through automation may not be solvable thus requiring validation. are not always engaging, challenging, or even solvable. This paper proposes Avalon, a novel method to improve models that learn from existing level designs using difficulty statistics extracted from gameplay. In particular, we use a conditional variational autoencoder to generate layouts for match-3 levels, conditioning the model on pre-collected statistics such as game mechanics like difficulty and relevant visual features like size and symmetry. Our method is general enough that multiple approaches could potentially be used to generate these statistics. We quantitatively evaluate our approach by comparing it to an ablated model without difficulty conditioning. Additionally, we analyze both quantitatively and qualitatively whether the style of the dataset is preserved in the generated levels. Our approach generates more valid levels than the same method without difficulty conditioning.

* 10 pages, 5 figures, 2 tables

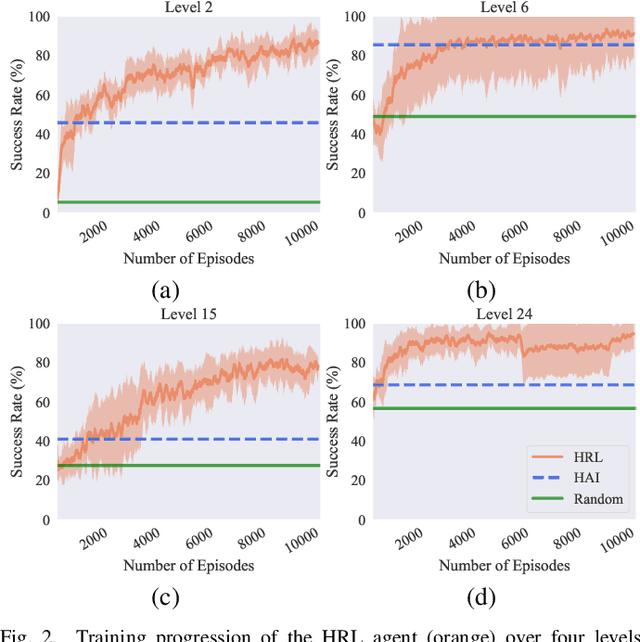

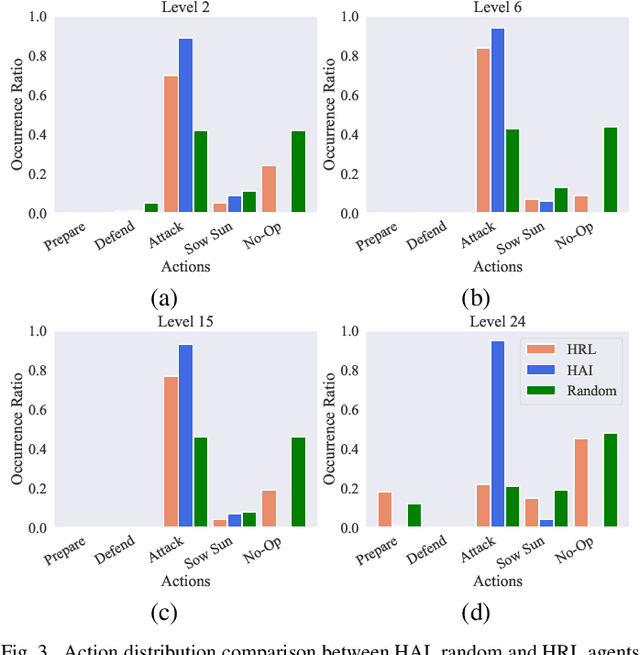

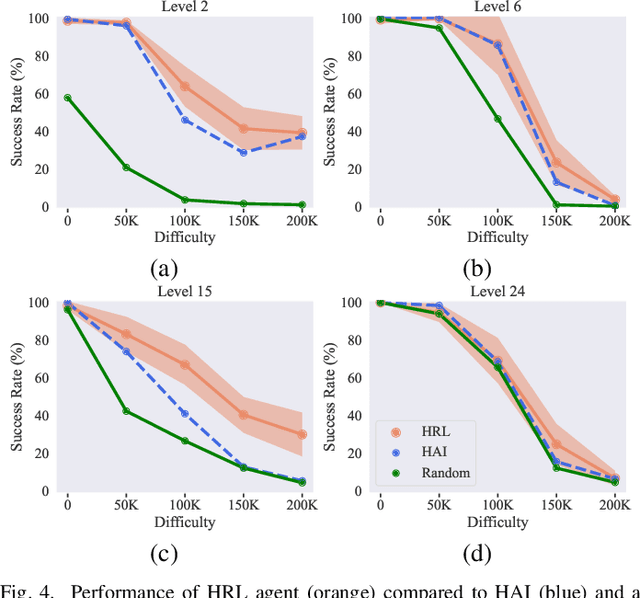

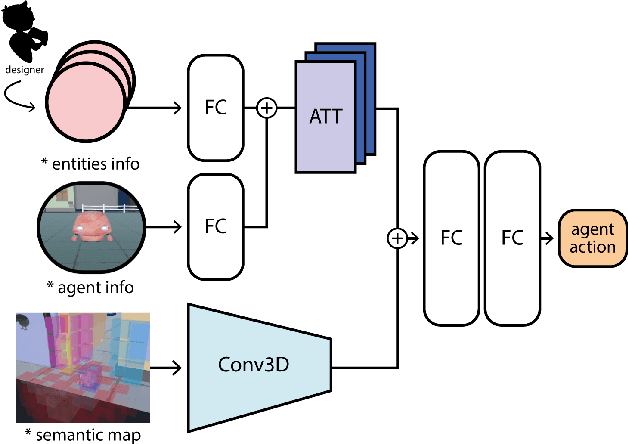

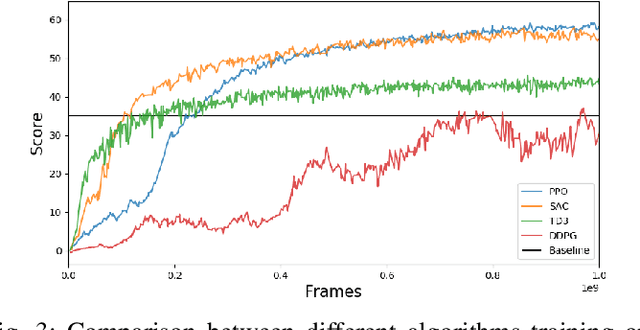

Reinforcement Learning for High-Level Strategic Control in Tower Defense Games

Jun 12, 2024

Abstract:In strategy games, one of the most important aspects of game design is maintaining a sense of challenge for players. Many mobile titles feature quick gameplay loops that allow players to progress steadily, requiring an abundance of levels and puzzles to prevent them from reaching the end too quickly. As with any content creation, testing and validation are essential to ensure engaging gameplay mechanics, enjoyable game assets, and playable levels. In this paper, we propose an automated approach that can be leveraged for gameplay testing and validation that combines traditional scripted methods with reinforcement learning, reaping the benefits of both approaches while adapting to new situations similarly to how a human player would. We test our solution on a popular tower defense game, Plants vs. Zombies. The results show that combining a learned approach, such as reinforcement learning, with a scripted AI produces a higher-performing and more robust agent than using only heuristic AI, achieving a 57.12% success rate compared to 47.95% in a set of 40 levels. Moreover, the results demonstrate the difficulty of training a general agent for this type of puzzle-like game.

Improving Generalization in Game Agents with Data Augmentation in Imitation Learning

Sep 22, 2023

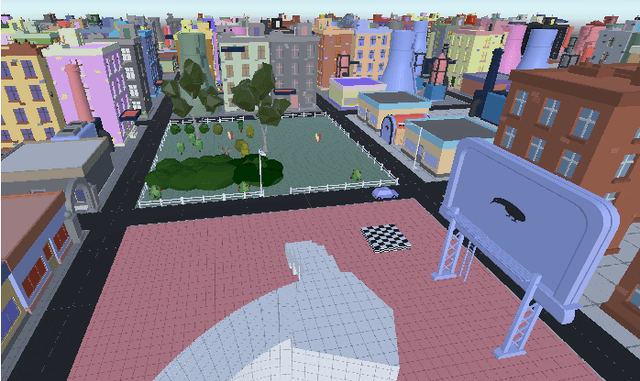

Abstract:Imitation learning is an effective approach for training game-playing agents and, consequently, for efficient game production. However, generalization - the ability to perform well in related but unseen scenarios - is an essential requirement that remains an unsolved challenge for game AI. Generalization is difficult for imitation learning agents because it requires the algorithm to take meaningful actions outside of the training distribution. In this paper we propose a solution to this challenge. Inspired by the success of data augmentation in supervised learning, we augment the training data so the distribution of states and actions in the dataset better represents the real state-action distribution. This study evaluates methods for combining and applying data augmentations to observations, to improve generalization of imitation learning agents. It also provides a performance benchmark of these augmentations across several 3D environments. These results demonstrate that data augmentation is a promising framework for improving generalization in imitation learning agents.

Generating Personas for Games with Multimodal Adversarial Imitation Learning

Aug 15, 2023Abstract:Reinforcement learning has been widely successful in producing agents capable of playing games at a human level. However, this requires complex reward engineering, and the agent's resulting policy is often unpredictable. Going beyond reinforcement learning is necessary to model a wide range of human playstyles, which can be difficult to represent with a reward function. This paper presents a novel imitation learning approach to generate multiple persona policies for playtesting. Multimodal Generative Adversarial Imitation Learning (MultiGAIL) uses an auxiliary input parameter to learn distinct personas using a single-agent model. MultiGAIL is based on generative adversarial imitation learning and uses multiple discriminators as reward models, inferring the environment reward by comparing the agent and distinct expert policies. The reward from each discriminator is weighted according to the auxiliary input. Our experimental analysis demonstrates the effectiveness of our technique in two environments with continuous and discrete action spaces.

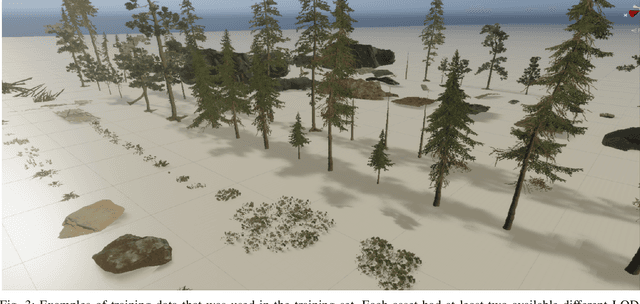

Automatic Testing and Validation of Level of Detail Reductions Through Supervised Learning

Aug 25, 2022

Abstract:Modern video games are rapidly growing in size and scale, and to create rich and interesting environments, a large amount of content is needed. As a consequence, often several thousands of detailed 3D assets are used to create a single scene. As each asset's polygon mesh can contain millions of polygons, the number of polygons that need to be drawn every frame may exceed several billions. Therefore, the computational resources often limit how many detailed objects that can be displayed in a scene. To push this limit and to optimize performance one can reduce the polygon count of the assets when possible. Basically, the idea is that an object at farther distance from the capturing camera, consequently with relatively smaller screen size, its polygon count may be reduced without affecting the perceived quality. Level of Detail (LOD) refers to the complexity level of a 3D model representation. The process of removing complexity is often called LOD reduction and can be done automatically with an algorithm or by hand by artists. However, this process may lead to deterioration of the visual quality if the different LODs differ significantly, or if LOD reduction transition is not seamless. Today the validation of these results is mainly done manually requiring an expert to visually inspect the results. However, this process is slow, mundane, and therefore prone to error. Herein we propose a method to automate this process based on the use of deep convolutional networks. We report promising results and envision that this method can be used to automate the process of LOD reduction testing and validation.

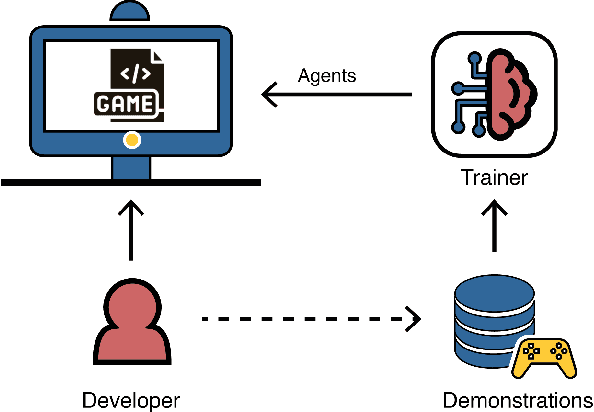

Towards Informed Design and Validation Assistance in Computer Games Using Imitation Learning

Aug 19, 2022

Abstract:In games, as in and many other domains, design validation and testing is a huge challenge as systems are growing in size and manual testing is becoming infeasible. This paper proposes a new approach to automated game validation and testing. Our method leverages a data-driven imitation learning technique, which requires little effort and time and no knowledge of machine learning or programming, that designers can use to efficiently train game testing agents. We investigate the validity of our approach through a user study with industry experts. The survey results show that our method is indeed a valid approach to game validation and that data-driven programming would be a useful aid to reducing effort and increasing quality of modern playtesting. The survey also highlights several open challenges. With the help of the most recent literature, we analyze the identified challenges and propose future research directions suitable for supporting and maximizing the utility of our approach.

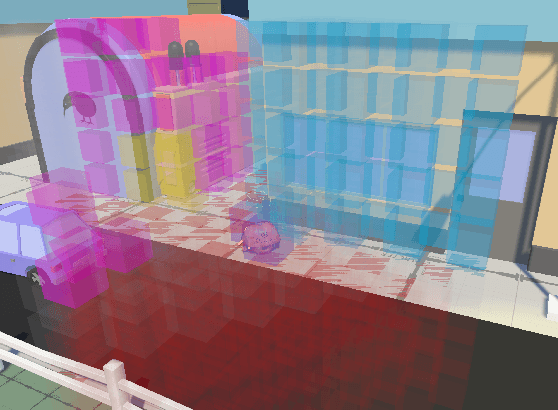

CCPT: Automatic Gameplay Testing and Validation with Curiosity-Conditioned Proximal Trajectories

Feb 21, 2022

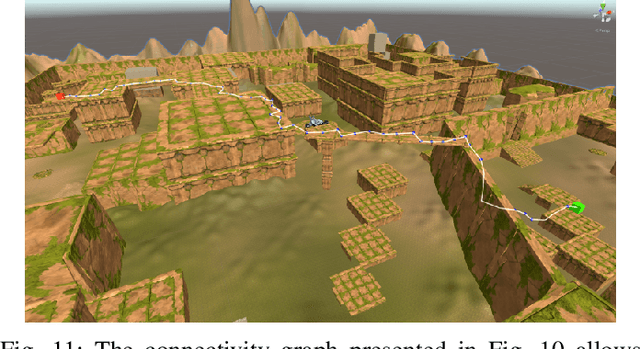

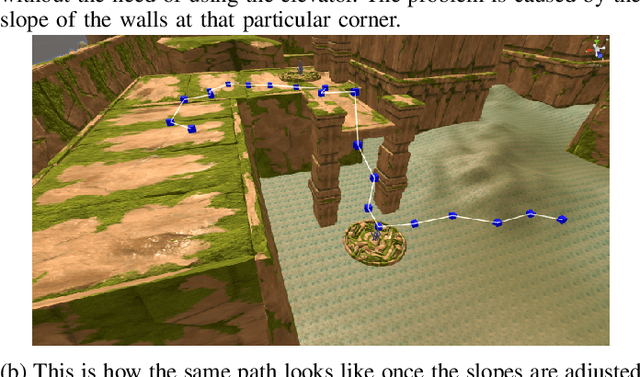

Abstract:This paper proposes a novel deep reinforcement learning algorithm to perform automatic analysis and detection of gameplay issues in complex 3D navigation environments. The Curiosity-Conditioned Proximal Trajectories (CCPT) method combines curiosity and imitation learning to train agents to methodically explore in the proximity of known trajectories derived from expert demonstrations. We show how CCPT can explore complex environments, discover gameplay issues and design oversights in the process, and recognize and highlight them directly to game designers. We further demonstrate the effectiveness of the algorithm in a novel 3D navigation environment which reflects the complexity of modern AAA video games. Our results show a higher level of coverage and bug discovery than baselines methods, and it hence can provide a valuable tool for game designers to identify issues in game design automatically.

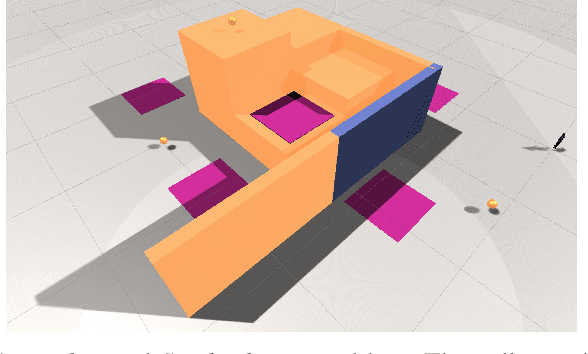

Augmenting Automated Game Testing with Deep Reinforcement Learning

Mar 29, 2021

Abstract:General game testing relies on the use of human play testers, play test scripting, and prior knowledge of areas of interest to produce relevant test data. Using deep reinforcement learning (DRL), we introduce a self-learning mechanism to the game testing framework. With DRL, the framework is capable of exploring and/or exploiting the game mechanics based on a user-defined, reinforcing reward signal. As a result, test coverage is increased and unintended game play mechanics, exploits and bugs are discovered in a multitude of game types. In this paper, we show that DRL can be used to increase test coverage, find exploits, test map difficulty, and to detect common problems that arise in the testing of first-person shooter (FPS) games.

* 4 pages, 6 figures, 2020 IEEE Conference on Games (CoG), 600-603

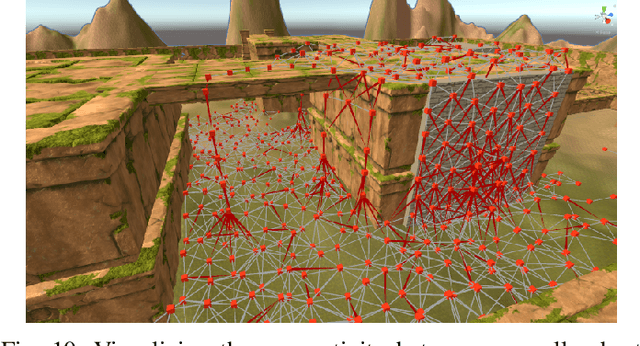

Improving Playtesting Coverage via Curiosity Driven Reinforcement Learning Agents

Mar 25, 2021

Abstract:As modern games continue growing both in size and complexity, it has become more challenging to ensure that all the relevant content is tested and that any potential issue is properly identified and fixed. Attempting to maximize testing coverage using only human participants, however, results in a tedious and hard to orchestrate process which normally slows down the development cycle. Complementing playtesting via autonomous agents has shown great promise accelerating and simplifying this process. This paper addresses the problem of automatically exploring and testing a given scenario using reinforcement learning agents trained to maximize game state coverage. Each of these agents is rewarded based on the novelty of its actions, thus encouraging a curious and exploratory behaviour on a complex 3D scenario where previously proposed exploration techniques perform poorly. The curious agents are able to learn the complex navigation mechanics required to reach the different areas around the map, thus providing the necessary data to identify potential issues. Moreover, the paper also explores different visualization strategies and evaluates how to make better use of the collected data to drive design decisions and to recognize possible problems and oversights.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge