Ling Lo

TriDF: Evaluating Perception, Detection, and Hallucination for Interpretable DeepFake Detection

Dec 23, 2025Abstract:Advances in generative modeling have made it increasingly easy to fabricate realistic portrayals of individuals, creating serious risks for security, communication, and public trust. Detecting such person-driven manipulations requires systems that not only distinguish altered content from authentic media but also provide clear and reliable reasoning. In this paper, we introduce TriDF, a comprehensive benchmark for interpretable DeepFake detection. TriDF contains high-quality forgeries from advanced synthesis models, covering 16 DeepFake types across image, video, and audio modalities. The benchmark evaluates three key aspects: Perception, which measures the ability of a model to identify fine-grained manipulation artifacts using human-annotated evidence; Detection, which assesses classification performance across diverse forgery families and generators; and Hallucination, which quantifies the reliability of model-generated explanations. Experiments on state-of-the-art multimodal large language models show that accurate perception is essential for reliable detection, but hallucination can severely disrupt decision-making, revealing the interdependence of these three aspects. TriDF provides a unified framework for understanding the interaction between detection accuracy, evidence identification, and explanation reliability, offering a foundation for building trustworthy systems that address real-world synthetic media threats.

RecipeGen: A Benchmark for Real-World Recipe Image Generation

Mar 07, 2025

Abstract:Recipe image generation is an important challenge in food computing, with applications from culinary education to interactive recipe platforms. However, there is currently no real-world dataset that comprehensively connects recipe goals, sequential steps, and corresponding images. To address this, we introduce RecipeGen, the first real-world goal-step-image benchmark for recipe generation, featuring diverse ingredients, varied recipe steps, multiple cooking styles, and a broad collection of food categories. Data is in https://github.com/zhangdaxia22/RecipeGen.

Future Sight and Tough Fights: Revolutionizing Sequential Recommendation with FENRec

Dec 16, 2024

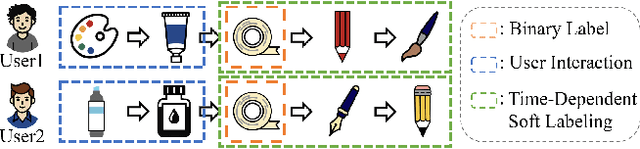

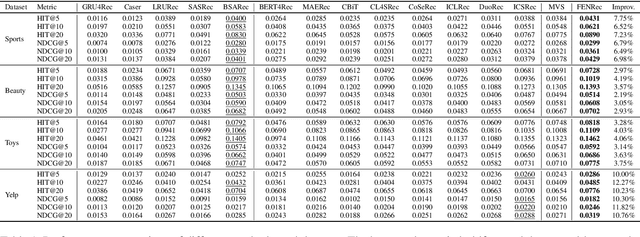

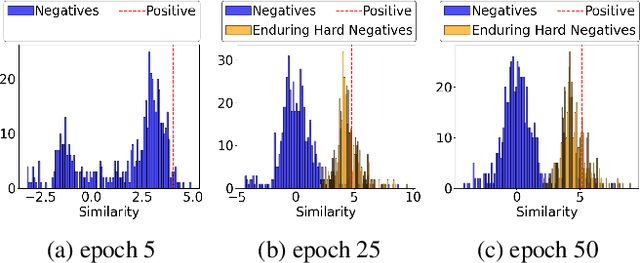

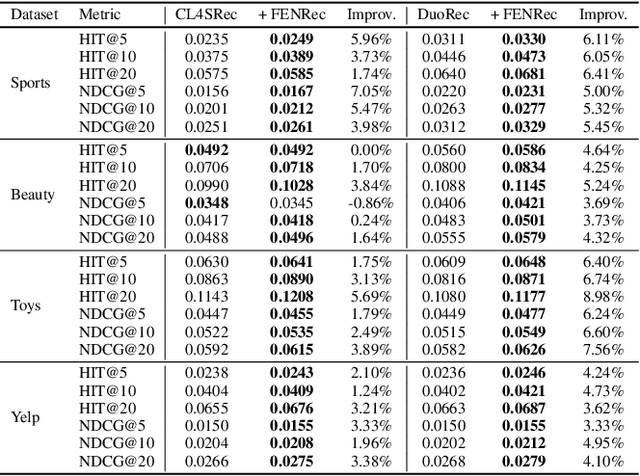

Abstract:Sequential recommendation (SR) systems predict user preferences by analyzing time-ordered interaction sequences. A common challenge for SR is data sparsity, as users typically interact with only a limited number of items. While contrastive learning has been employed in previous approaches to address the challenges, these methods often adopt binary labels, missing finer patterns and overlooking detailed information in subsequent behaviors of users. Additionally, they rely on random sampling to select negatives in contrastive learning, which may not yield sufficiently hard negatives during later training stages. In this paper, we propose Future data utilization with Enduring Negatives for contrastive learning in sequential Recommendation (FENRec). Our approach aims to leverage future data with time-dependent soft labels and generate enduring hard negatives from existing data, thereby enhancing the effectiveness in tackling data sparsity. Experiment results demonstrate our state-of-the-art performance across four benchmark datasets, with an average improvement of 6.16\% across all metrics.

ReCorD: Reasoning and Correcting Diffusion for HOI Generation

Jul 25, 2024

Abstract:Diffusion models revolutionize image generation by leveraging natural language to guide the creation of multimedia content. Despite significant advancements in such generative models, challenges persist in depicting detailed human-object interactions, especially regarding pose and object placement accuracy. We introduce a training-free method named Reasoning and Correcting Diffusion (ReCorD) to address these challenges. Our model couples Latent Diffusion Models with Visual Language Models to refine the generation process, ensuring precise depictions of HOIs. We propose an interaction-aware reasoning module to improve the interpretation of the interaction, along with an interaction correcting module to refine the output image for more precise HOI generation delicately. Through a meticulous process of pose selection and object positioning, ReCorD achieves superior fidelity in generated images while efficiently reducing computational requirements. We conduct comprehensive experiments on three benchmarks to demonstrate the significant progress in solving text-to-image generation tasks, showcasing ReCorD's ability to render complex interactions accurately by outperforming existing methods in HOI classification score, as well as FID and Verb CLIP-Score. Project website is available at https://alberthkyhky.github.io/ReCorD/ .

Technical Report for Valence-Arousal Estimation in ABAW2 Challenge

Jul 08, 2021

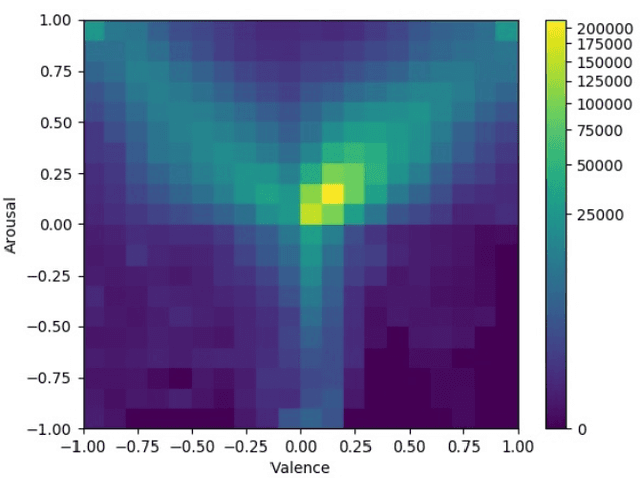

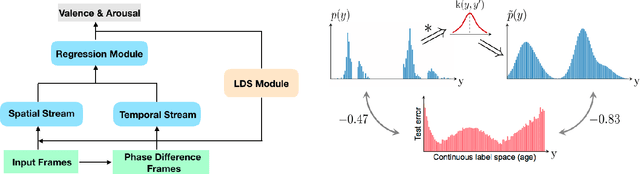

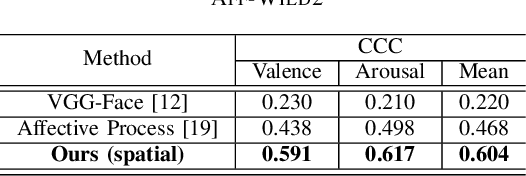

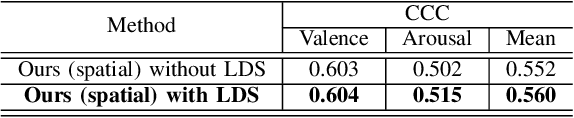

Abstract:In this work, we describe our method for tackling the valence-arousal estimation challenge from ABAW2 ICCV-2021 Competition. The competition organizers provide an in-the-wild Aff-Wild2 dataset for participants to analyze affective behavior in real-life settings. We use a two stream model to learn emotion features from appearance and action respectively. To solve data imbalanced problem, we apply label distribution smoothing (LDS) to re-weight labels. Our proposed method achieves Concordance Correlation Coefficient (CCC) of 0.591 and 0.617 for valence and arousal on the validation set of Aff-wild2 dataset.

An Overview of Facial Micro-Expression Analysis: Data, Methodology and Challenge

Dec 21, 2020

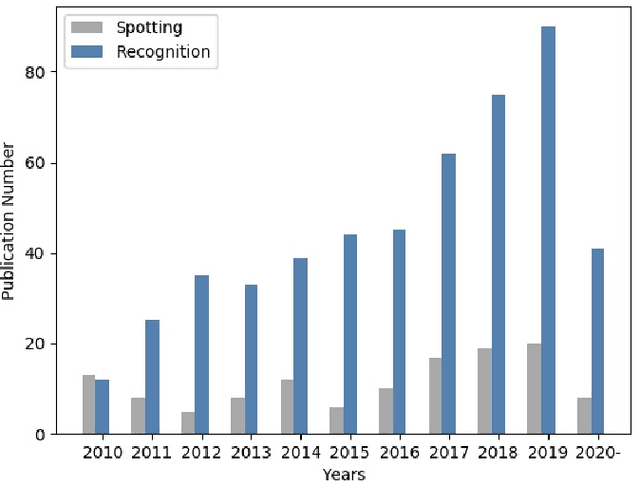

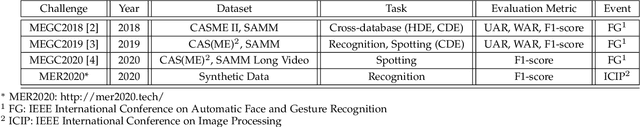

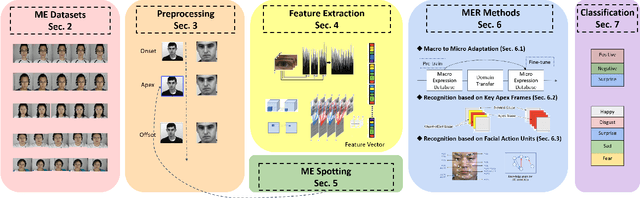

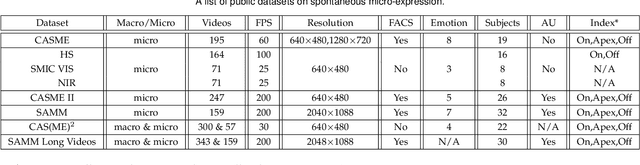

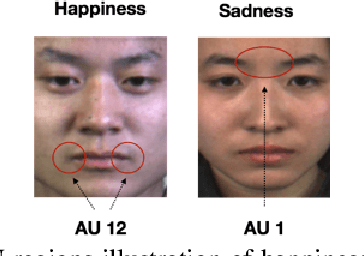

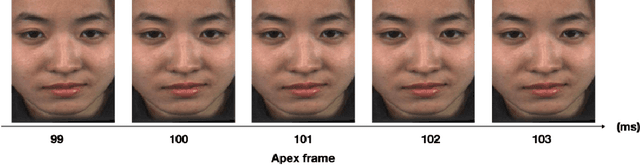

Abstract:Facial micro-expressions indicate brief and subtle facial movements that appear during emotional communication. In comparison to macro-expressions, micro-expressions are more challenging to be analyzed due to the short span of time and the fine-grained changes. In recent years, micro-expression recognition (MER) has drawn much attention because it can benefit a wide range of applications, e.g. police interrogation, clinical diagnosis, depression analysis, and business negotiation. In this survey, we offer a fresh overview to discuss new research directions and challenges these days for MER tasks. For example, we review MER approaches from three novel aspects: macro-to-micro adaptation, recognition based on key apex frames, and recognition based on facial action units. Moreover, to mitigate the problem of limited and biased ME data, synthetic data generation is surveyed for the diversity enrichment of micro-expression data. Since micro-expression spotting can boost micro-expression analysis, the state-of-the-art spotting works are also introduced in this paper. At last, we discuss the challenges in MER research and provide potential solutions as well as possible directions for further investigation.

MER-GCN: Micro Expression Recognition Based on Relation Modeling with Graph Convolutional Network

Apr 19, 2020

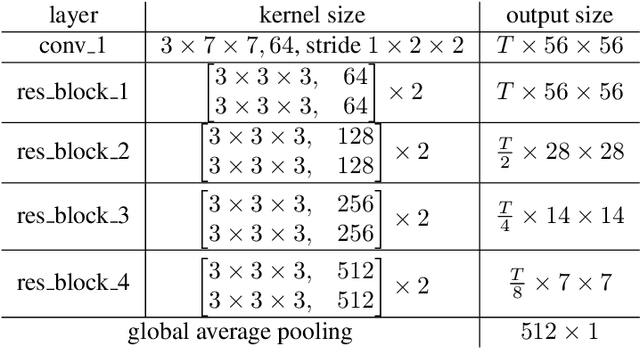

Abstract:Micro-Expression (ME) is the spontaneous, involuntary movement of a face that can reveal the true feeling. Recently, increasing researches have paid attention to this field combing deep learning techniques. Action units (AUs) are the fundamental actions reflecting the facial muscle movements and AU detection has been adopted by many researches to classify facial expressions. However, the time-consuming annotation process makes it difficult to correlate the combinations of AUs to specific emotion classes. Inspired by the nodes relationship building Graph Convolutional Networks (GCN), we propose an end-to-end AU-oriented graph classification network, namely MER-GCN, which uses 3D ConvNets to extract AU features and applies GCN layers to discover the dependency laying between AU nodes for ME categorization. To our best knowledge, this work is the first end-to-end architecture for Micro-Expression Recognition (MER) using AUs based GCN. The experimental results show that our approach outperforms CNN-based MER networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge