Linchuan Zhang

RoGER-SLAM: A Robust Gaussian Splatting SLAM System for Noisy and Low-light Environment Resilience

Oct 26, 2025Abstract:The reliability of Simultaneous Localization and Mapping (SLAM) is severely constrained in environments where visual inputs suffer from noise and low illumination. Although recent 3D Gaussian Splatting (3DGS) based SLAM frameworks achieve high-fidelity mapping under clean conditions, they remain vulnerable to compounded degradations that degrade mapping and tracking performance. A key observation underlying our work is that the original 3DGS rendering pipeline inherently behaves as an implicit low-pass filter, attenuating high-frequency noise but also risking over-smoothing. Building on this insight, we propose RoGER-SLAM, a robust 3DGS SLAM system tailored for noise and low-light resilience. The framework integrates three innovations: a Structure-Preserving Robust Fusion (SP-RoFusion) mechanism that couples rendered appearance, depth, and edge cues; an adaptive tracking objective with residual balancing regularization; and a Contrastive Language-Image Pretraining (CLIP)-based enhancement module, selectively activated under compounded degradations to restore semantic and structural fidelity. Comprehensive experiments on Replica, TUM, and real-world sequences show that RoGER-SLAM consistently improves trajectory accuracy and reconstruction quality compared with other 3DGS-SLAM systems, especially under adverse imaging conditions.

Measuring Calibration in Deep Learning

Apr 02, 2019

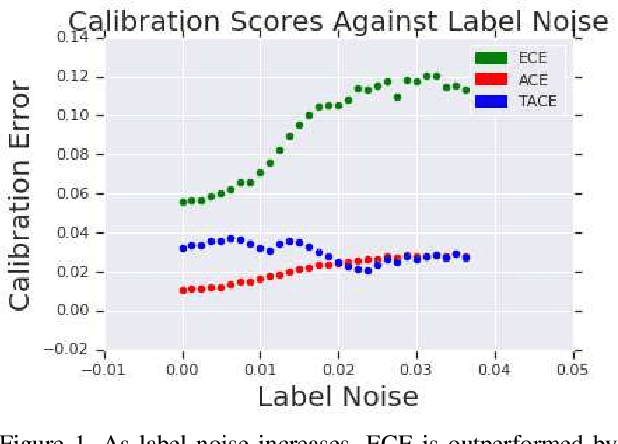

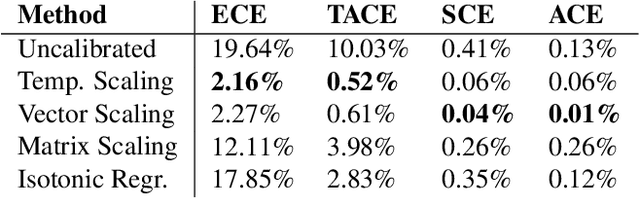

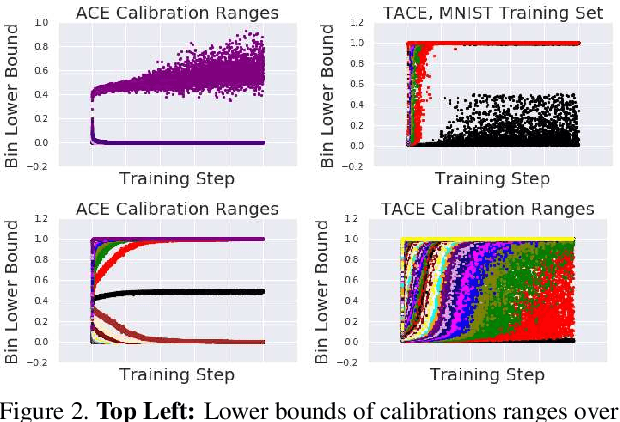

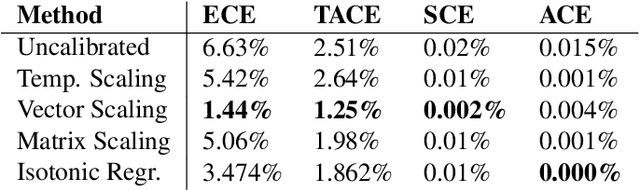

Abstract:The reliability of a machine learning model's confidence in its predictions is critical for highrisk applications. Calibration-the idea that a model's predicted probabilities of outcomes reflect true probabilities of those outcomes-formalizes this notion. While analyzing the calibration of deep neural networks, we've identified core problems with the way calibration is currently measured. We design the Thresholded Adaptive Calibration Error (TACE) metric to resolve these pathologies and show that it outperforms other metrics, especially in settings where predictions beyond the maximum prediction that is chosen as the output class matter. There are many cases where what a practitioner cares about is the calibration of a specific prediction, and so we introduce a dynamic programming based Prediction Specific Calibration Error (PSCE) that smoothly considers the calibration of nearby predictions to give an estimate of the calibration error of a specific prediction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge