Ligang Lu

Leveraging Deep Operator Networks (DeepONet) for Acoustic Full Waveform Inversion (FWI)

Apr 14, 2025

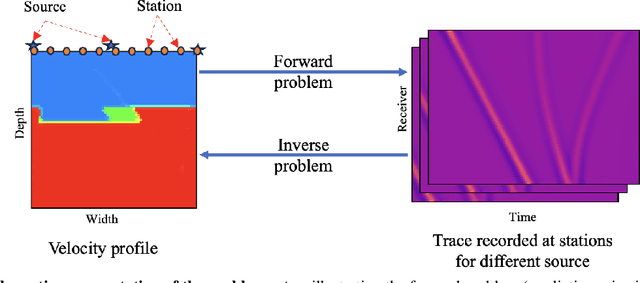

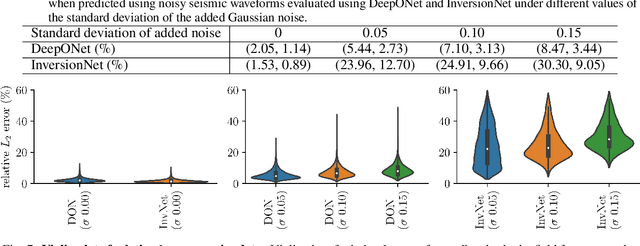

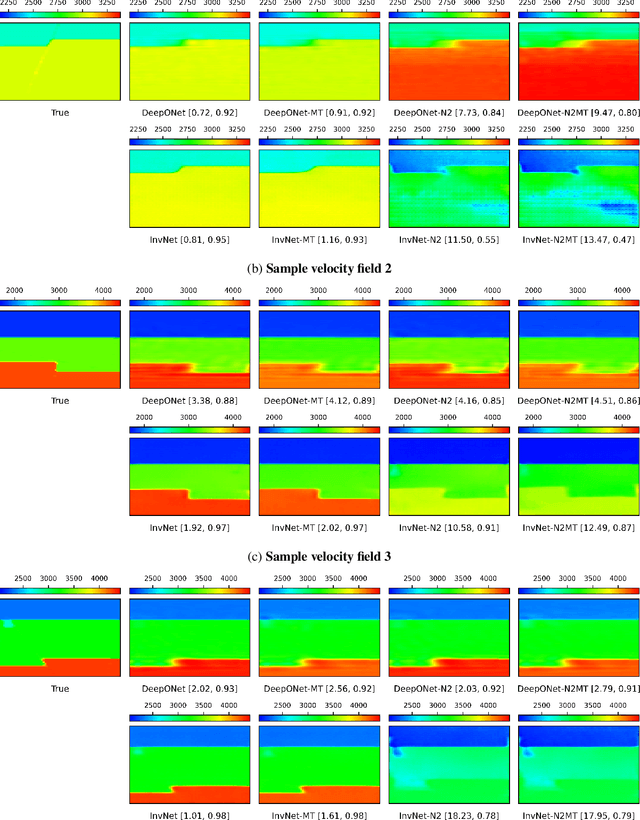

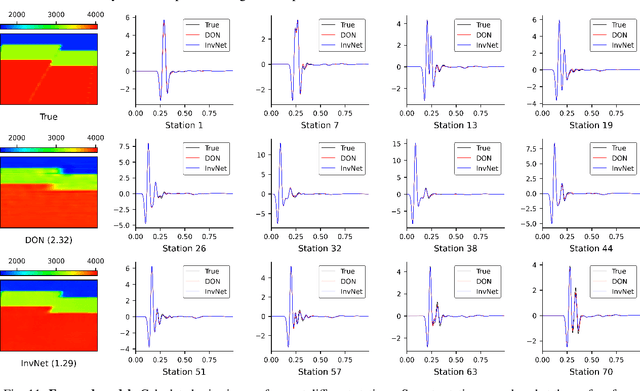

Abstract:Full Waveform Inversion (FWI) is an important geophysical technique considered in subsurface property prediction. It solves the inverse problem of predicting high-resolution Earth interior models from seismic data. Traditional FWI methods are computationally demanding. Inverse problems in geophysics often face challenges of non-uniqueness due to limited data, as data are often collected only on the surface. In this study, we introduce a novel methodology that leverages Deep Operator Networks (DeepONet) to attempt to improve both the efficiency and accuracy of FWI. The proposed DeepONet methodology inverts seismic waveforms for the subsurface velocity field. This approach is able to capture some key features of the subsurface velocity field. We have shown that the architecture can be applied to noisy seismic data with an accuracy that is better than some other machine learning methods. We also test our proposed method with out-of-distribution prediction for different velocity models. The proposed DeepONet shows comparable and better accuracy in some velocity models than some other machine learning methods. To improve the FWI workflow, we propose using the DeepONet output as a starting model for conventional FWI and that it may improve FWI performance. While we have only shown that DeepONet facilitates faster convergence than starting with a homogeneous velocity field, it may have some benefits compared to other approaches to constructing starting models. This integration of DeepONet into FWI may accelerate the inversion process and may also enhance its robustness and reliability.

Communication-Efficient Design of Learning System for Energy Demand Forecasting of Electrical Vehicles

Sep 04, 2023

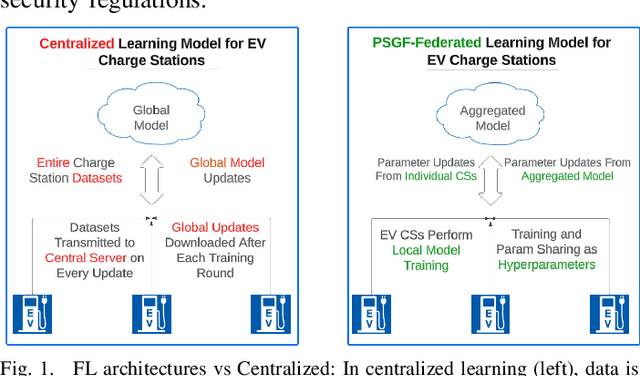

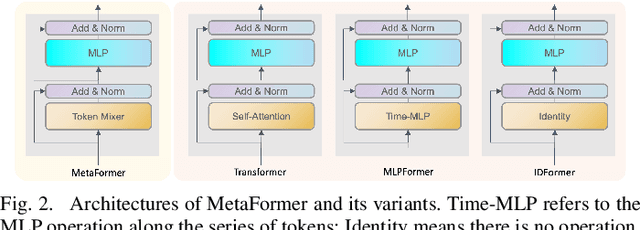

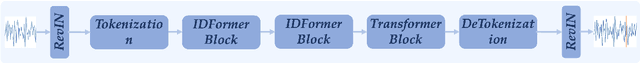

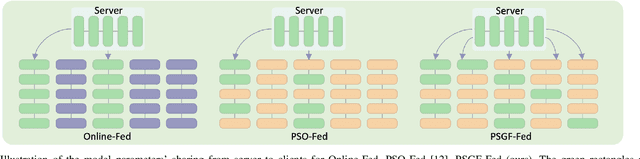

Abstract:Machine learning (ML) applications to time series energy utilization forecasting problems are a challenging assignment due to a variety of factors. Chief among these is the non-homogeneity of the energy utilization datasets and the geographical dispersion of energy consumers. Furthermore, these ML models require vast amounts of training data and communications overhead in order to develop an effective model. In this paper, we propose a communication-efficient time series forecasting model combining the most recent advancements in transformer architectures implemented across a geographically dispersed series of EV charging stations and an efficient variant of federated learning (FL) to enable distributed training. The time series prediction performance and communication overhead cost of our FL are compared against their counterpart models and shown to have parity in performance while consuming significantly lower data rates during training. Additionally, the comparison is made across EV charging as well as other time series datasets to demonstrate the flexibility of our proposed model in generalized time series prediction beyond energy demand. The source code for this work is available at https://github.com/XuJiacong/LoGTST_PSGF

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge