Lianyun Li

Self-supervised Graph Representation Learning for Black Market Account Detection

Dec 06, 2022Abstract:Nowadays, Multi-purpose Messaging Mobile App (MMMA) has become increasingly prevalent. MMMAs attract fraudsters and some cybercriminals provide support for frauds via black market accounts (BMAs). Compared to fraudsters, BMAs are not directly involved in frauds and are more difficult to detect. This paper illustrates our BMA detection system SGRL (Self-supervised Graph Representation Learning) used in WeChat, a representative MMMA with over a billion users. We tailor Graph Neural Network and Graph Self-supervised Learning in SGRL for BMA detection. The workflow of SGRL contains a pretraining phase that utilizes structural information, node attribute information and available human knowledge, and a lightweight detection phase. In offline experiments, SGRL outperforms state-of-the-art methods by 16.06%-58.17% on offline evaluation measures. We deploy SGRL in the online environment to detect BMAs on the billion-scale WeChat graph, and it exceeds the alternative by 7.27% on the online evaluation measure. In conclusion, SGRL can alleviate label reliance, generalize well to unseen data, and effectively detect BMAs in WeChat.

Attacking Recommender Systems with Augmented User Profiles

May 17, 2020

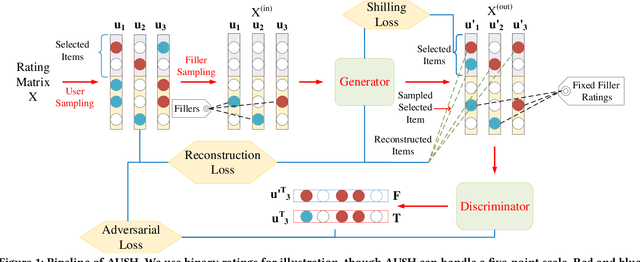

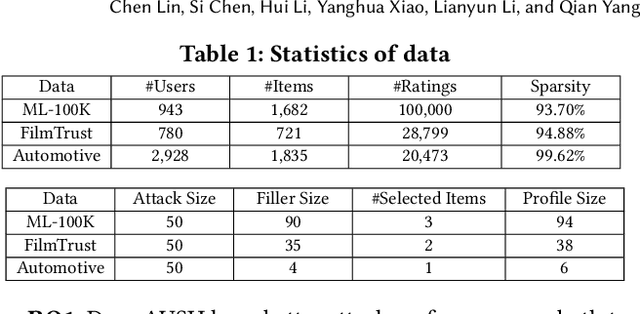

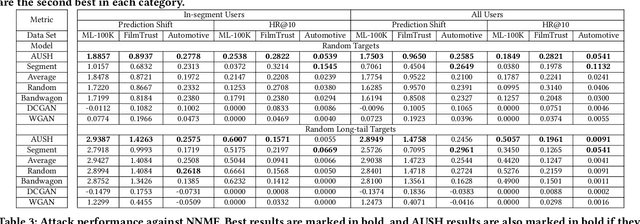

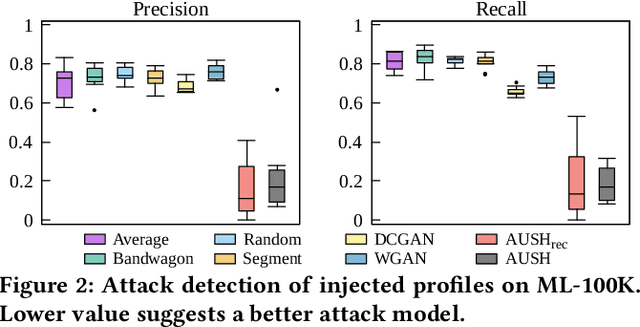

Abstract:Recommendation Systems (RS) have become an essential part of many online services. Due to its pivotal role of guiding customers towards purchasing, there is a natural motivation for unscrupulous parties to spoof RS for profits. In this paper we study the shilling attack: a subsistent and profitable attack where an adversarial party injects a number of user profiles to promote or demote a target item. Conventional shilling attack models are based on simple heuristics that can be easily detected, or directly adopt adversarial attack methods without a special design for RS. Moreover, the study on the attack impact on deep learning based RS is missing in the literature, making the effects of shilling attack against real RS doubtful. We present a novel Augmented Shilling Attack framework (AUSH) and implement it with the idea of Generative Adversarial Network. AUSH is capable of tailoring attacks against RS according to budget and complex attack goals such as targeting on a specific user group. We experimentally show that the attack impact of AUSH is noticeable on a wide range of RS including both classic and modern deep learning based RS, while it is virtually undetectable by the state-of-the-art attack detection model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge