Lexing Zhang

Vehicle Acceleration Prediction Considering Environmental Influence and Individual Driving Behavior

Apr 05, 2025Abstract:Accurate vehicle acceleration prediction is critical for intelligent driving control and energy efficiency management, particularly in environments with complex driving behavior dynamics. This paper proposes a general short-term vehicle acceleration prediction framework that jointly models environmental influence and individual driving behavior. The framework adopts a dual input design by incorporating environmental sequences, constructed from historical traffic variables such as percentile-based speed and acceleration statistics of multiple vehicles at specific spatial locations, capture group-level driving behavior influenced by the traffic environment. In parallel, individual driving behavior sequences represent motion characteristics of the target vehicle prior to the prediction point, reflecting personalized driving styles. These two inputs are processed using an LSTM Seq2Seq model enhanced with an attention mechanism, enabling accurate multi-step acceleration prediction. To demonstrate the effectiveness of the proposed method, an empirical study was conducted using high resolution radar video fused trajectory data collected from the exit section of the Guangzhou Baishi Tunnel. Drivers were clustered into three categories conservative, moderate, and aggressive based on key behavioral indicators, and a dedicated prediction model was trained for each group to account for driver heterogeneity.Experimental results show that the proposed method consistently outperforms four baseline models, yielding a 10.9% improvement in accuracy with the inclusion of historical traffic variables and a 33% improvement with driver classification. Although prediction errors increase with forecast distance, incorporating environment- and behavior-aware features significantly enhances model robustness.

Part-level Scene Reconstruction Affords Robot Interaction

Aug 01, 2023

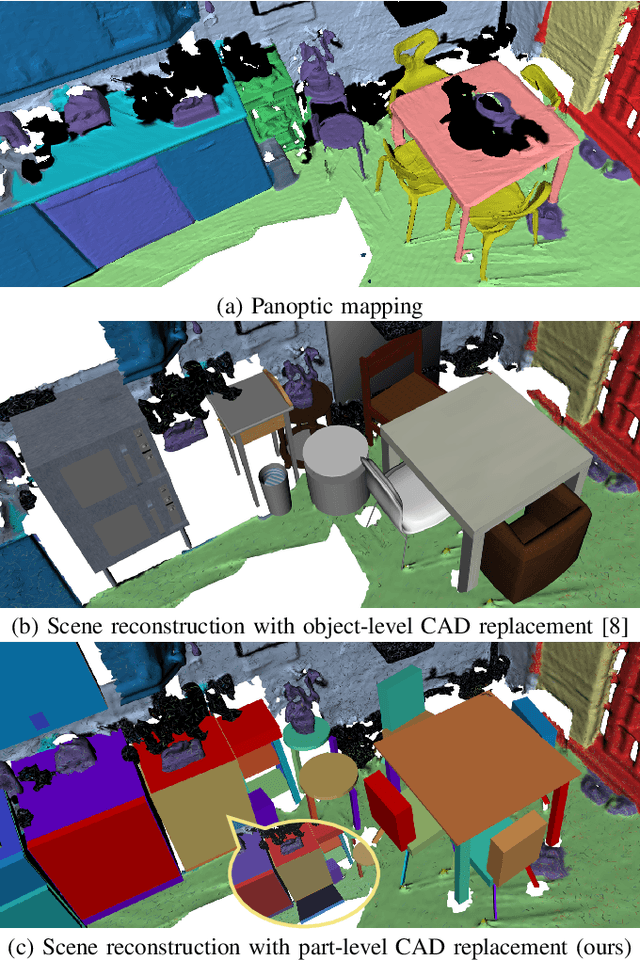

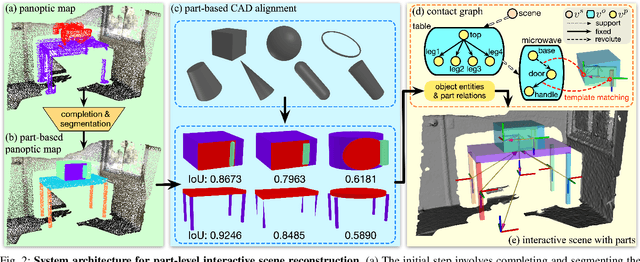

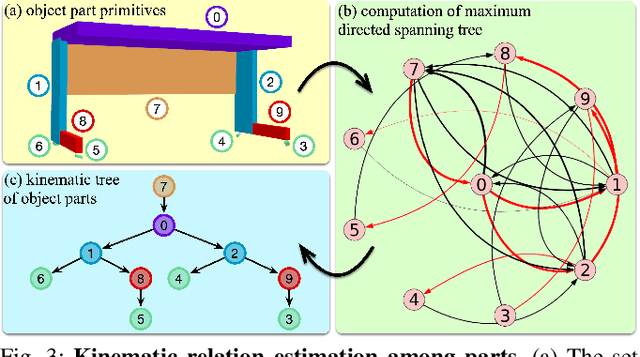

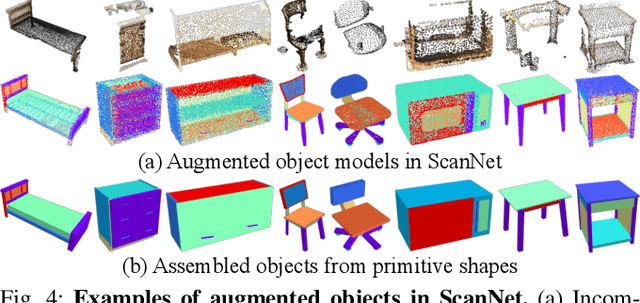

Abstract:Existing methods for reconstructing interactive scenes primarily focus on replacing reconstructed objects with CAD models retrieved from a limited database, resulting in significant discrepancies between the reconstructed and observed scenes. To address this issue, our work introduces a part-level reconstruction approach that reassembles objects using primitive shapes. This enables us to precisely replicate the observed physical scenes and simulate robot interactions with both rigid and articulated objects. By segmenting reconstructed objects into semantic parts and aligning primitive shapes to these parts, we assemble them as CAD models while estimating kinematic relations, including parent-child contact relations, joint types, and parameters. Specifically, we derive the optimal primitive alignment by solving a series of optimization problems, and estimate kinematic relations based on part semantics and geometry. Our experiments demonstrate that part-level scene reconstruction outperforms object-level reconstruction by accurately capturing finer details and improving precision. These reconstructed part-level interactive scenes provide valuable kinematic information for various robotic applications; we showcase the feasibility of certifying mobile manipulation planning in these interactive scenes before executing tasks in the physical world.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge