Lena Filatova

AdverX-Ray: Ensuring X-Ray Integrity Through Frequency-Sensitive Adversarial VAEs

Feb 23, 2025Abstract:Ensuring the quality and integrity of medical images is crucial for maintaining diagnostic accuracy in deep learning-based Computer-Aided Diagnosis and Computer-Aided Detection (CAD) systems. Covariate shifts are subtle variations in the data distribution caused by different imaging devices or settings and can severely degrade model performance, similar to the effects of adversarial attacks. Therefore, it is vital to have a lightweight and fast method to assess the quality of these images prior to using CAD models. AdverX-Ray addresses this need by serving as an image-quality assessment layer, designed to detect covariate shifts effectively. This Adversarial Variational Autoencoder prioritizes the discriminator's role, using the suboptimal outputs of the generator as negative samples to fine-tune the discriminator's ability to identify high-frequency artifacts. Images generated by adversarial networks often exhibit severe high-frequency artifacts, guiding the discriminator to focus excessively on these components. This makes the discriminator ideal for this approach. Trained on patches from X-ray images of specific machine models, AdverX-Ray can evaluate whether a scan matches the training distribution, or if a scan from the same machine is captured under different settings. Extensive comparisons with various OOD detection methods show that AdverX-Ray significantly outperforms existing techniques, achieving a 96.2% average AUROC using only 64 random patches from an X-ray. Its lightweight and fast architecture makes it suitable for real-time applications, enhancing the reliability of medical imaging systems. The code and pretrained models are publicly available.

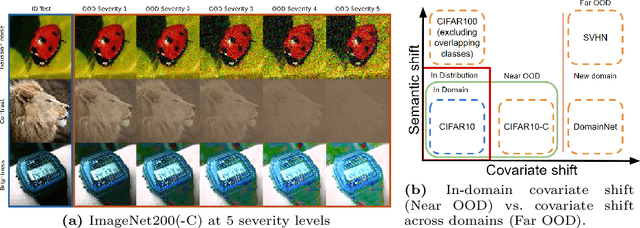

Can Your Generative Model Detect Out-of-Distribution Covariate Shift?

Sep 04, 2024

Abstract:Detecting Out-of-Distribution~(OOD) sensory data and covariate distribution shift aims to identify new test examples with different high-level image statistics to the captured, normal and In-Distribution (ID) set. Existing OOD detection literature largely focuses on semantic shift with little-to-no consensus over covariate shift. Generative models capture the ID data in an unsupervised manner, enabling them to effectively identify samples that deviate significantly from this learned distribution, irrespective of the downstream task. In this work, we elucidate the ability of generative models to detect and quantify domain-specific covariate shift through extensive analyses that involves a variety of models. To this end, we conjecture that it is sufficient to detect most occurring sensory faults (anomalies and deviations in global signals statistics) by solely modeling high-frequency signal-dependent and independent details. We propose a novel method, CovariateFlow, for OOD detection, specifically tailored to covariate heteroscedastic high-frequency image-components using conditional Normalizing Flows (cNFs). Our results on CIFAR10 vs. CIFAR10-C and ImageNet200 vs. ImageNet200-C demonstrate the effectiveness of the method by accurately detecting OOD covariate shift. This work contributes to enhancing the fidelity of imaging systems and aiding machine learning models in OOD detection in the presence of covariate shift.

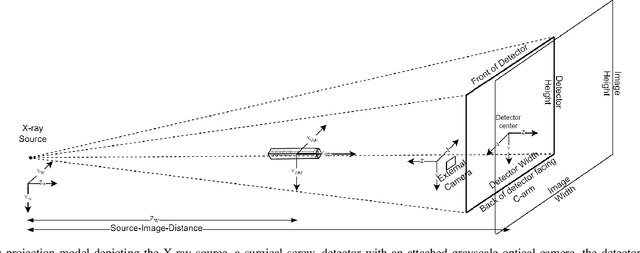

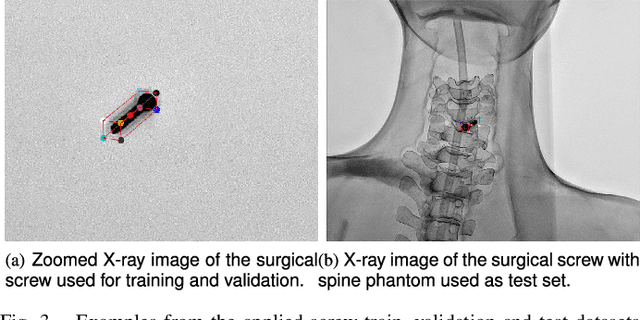

Advancing 6-DoF Instrument Pose Estimation in Variable X-Ray Imaging Geometries

May 19, 2024

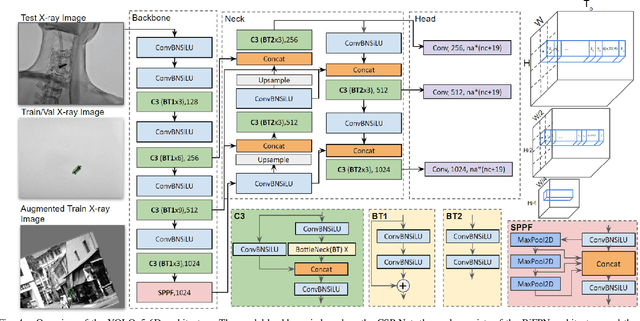

Abstract:Accurate 6-DoF pose estimation of surgical instruments during minimally invasive surgeries can substantially improve treatment strategies and eventual surgical outcome. Existing deep learning methods have achieved accurate results, but they require custom approaches for each object and laborious setup and training environments often stretching to extensive simulations, whilst lacking real-time computation. We propose a general-purpose approach of data acquisition for 6-DoF pose estimation tasks in X-ray systems, a novel and general purpose YOLOv5-6D pose architecture for accurate and fast object pose estimation and a complete method for surgical screw pose estimation under acquisition geometry consideration from a monocular cone-beam X-ray image. The proposed YOLOv5-6D pose model achieves competitive results on public benchmarks whilst being considerably faster at 42 FPS on GPU. In addition, the method generalizes across varying X-ray acquisition geometry and semantic image complexity to enable accurate pose estimation over different domains. Finally, the proposed approach is tested for bone-screw pose estimation for computer-aided guidance during spine surgeries. The model achieves a 92.41% by the 0.1 ADD-S metric, demonstrating a promising approach for enhancing surgical precision and patient outcomes. The code for YOLOv5-6D is publicly available at https://github.com/cviviers/YOLOv5-6D-Pose

* Early author version of paper. Refer to the full paper at https://ieeexplore.ieee.org/document/10478293

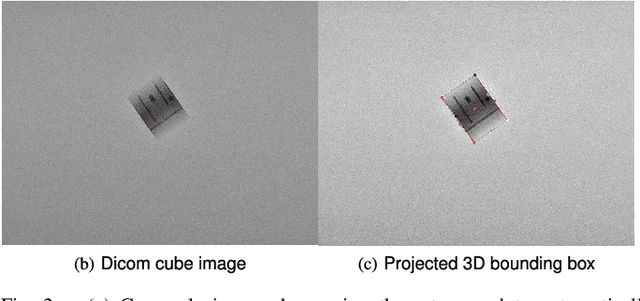

Towards real-time 6D pose estimation of objects in single-view cone-beam X-ray

Nov 06, 2022Abstract:Deep learning-based pose estimation algorithms can successfully estimate the pose of objects in an image, especially in the field of color images. 6D Object pose estimation based on deep learning models for X-ray images often use custom architectures that employ extensive CAD models and simulated data for training purposes. Recent RGB-based methods opt to solve pose estimation problems using small datasets, making them more attractive for the X-ray domain where medical data is scarcely available. We refine an existing RGB-based model (SingleShotPose) to estimate the 6D pose of a marked cube from grayscale X-ray images by creating a generic solution trained on only real X-ray data and adjusted for X-ray acquisition geometry. The model regresses 2D control points and calculates the pose through 2D/3D correspondences using Perspective-n-Point(PnP), allowing a single trained model to be used across all supporting cone-beam-based X-ray geometries. Since modern X-ray systems continuously adjust acquisition parameters during a procedure, it is essential for such a pose estimation network to consider these parameters in order to be deployed successfully and find a real use case. With a 5-cm/5-degree accuracy of 93% and an average 3D rotation error of 2.2 degrees, the results of the proposed approach are comparable with state-of-the-art alternatives, while requiring significantly less real training examples and being applicable in real-time applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge