Can Your Generative Model Detect Out-of-Distribution Covariate Shift?

Paper and Code

Sep 04, 2024

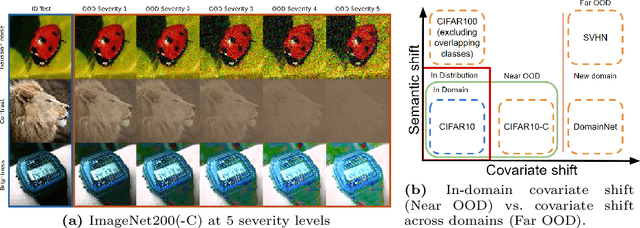

Detecting Out-of-Distribution~(OOD) sensory data and covariate distribution shift aims to identify new test examples with different high-level image statistics to the captured, normal and In-Distribution (ID) set. Existing OOD detection literature largely focuses on semantic shift with little-to-no consensus over covariate shift. Generative models capture the ID data in an unsupervised manner, enabling them to effectively identify samples that deviate significantly from this learned distribution, irrespective of the downstream task. In this work, we elucidate the ability of generative models to detect and quantify domain-specific covariate shift through extensive analyses that involves a variety of models. To this end, we conjecture that it is sufficient to detect most occurring sensory faults (anomalies and deviations in global signals statistics) by solely modeling high-frequency signal-dependent and independent details. We propose a novel method, CovariateFlow, for OOD detection, specifically tailored to covariate heteroscedastic high-frequency image-components using conditional Normalizing Flows (cNFs). Our results on CIFAR10 vs. CIFAR10-C and ImageNet200 vs. ImageNet200-C demonstrate the effectiveness of the method by accurately detecting OOD covariate shift. This work contributes to enhancing the fidelity of imaging systems and aiding machine learning models in OOD detection in the presence of covariate shift.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge