Le Mao

SRLM: Human-in-Loop Interactive Social Robot Navigation with Large Language Model and Deep Reinforcement Learning

Mar 22, 2024

Abstract:An interactive social robotic assistant must provide services in complex and crowded spaces while adapting its behavior based on real-time human language commands or feedback. In this paper, we propose a novel hybrid approach called Social Robot Planner (SRLM), which integrates Large Language Models (LLM) and Deep Reinforcement Learning (DRL) to navigate through human-filled public spaces and provide multiple social services. SRLM infers global planning from human-in-loop commands in real-time, and encodes social information into a LLM-based large navigation model (LNM) for low-level motion execution. Moreover, a DRL-based planner is designed to maintain benchmarking performance, which is blended with LNM by a large feedback model (LFM) to address the instability of current text and LLM-driven LNM. Finally, SRLM demonstrates outstanding performance in extensive experiments. More details about this work are available at: https://sites.google.com/view/navi-srlm

Hyper-STTN: Social Group-aware Spatial-Temporal Transformer Network for Human Trajectory Prediction with Hypergraph Reasoning

Jan 12, 2024

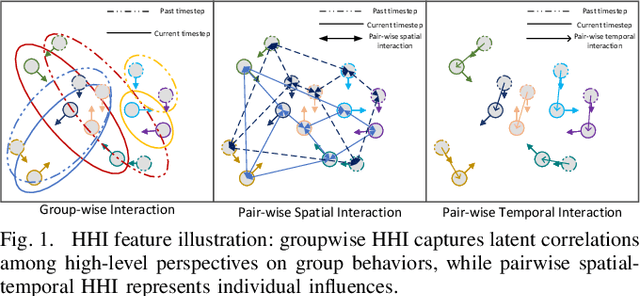

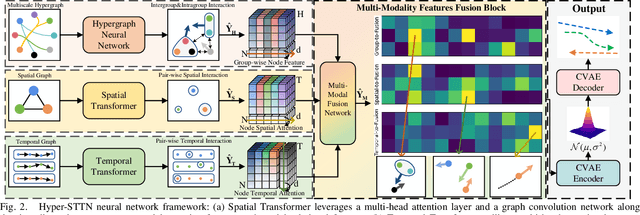

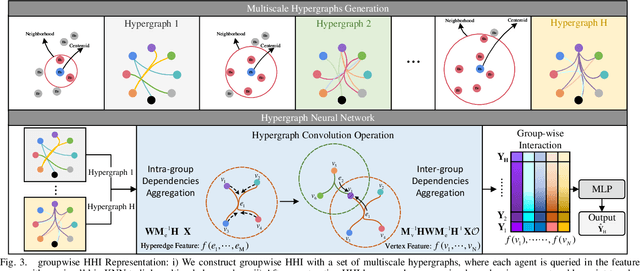

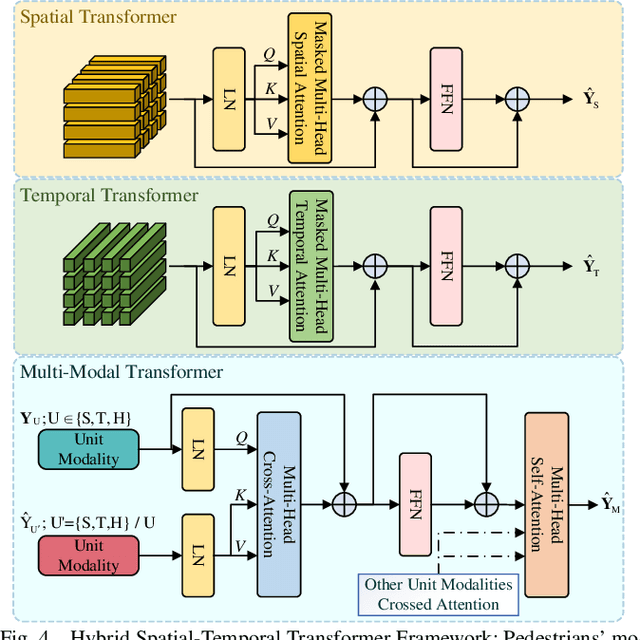

Abstract:Predicting crowded intents and trajectories is crucial in varouls real-world applications, including service robots and autonomous vehicles. Understanding environmental dynamics is challenging, not only due to the complexities of modeling pair-wise spatial and temporal interactions but also the diverse influence of group-wise interactions. To decode the comprehensive pair-wise and group-wise interactions in crowded scenarios, we introduce Hyper-STTN, a Hypergraph-based Spatial-Temporal Transformer Network for crowd trajectory prediction. In Hyper-STTN, crowded group-wise correlations are constructed using a set of multi-scale hypergraphs with varying group sizes, captured through random-walk robability-based hypergraph spectral convolution. Additionally, a spatial-temporal transformer is adapted to capture pedestrians' pair-wise latent interactions in spatial-temporal dimensions. These heterogeneous group-wise and pair-wise are then fused and aligned though a multimodal transformer network. Hyper-STTN outperformes other state-of-the-art baselines and ablation models on 5 real-world pedestrian motion datasets.

Multi-Robot Cooperative Socially-Aware Navigation Using Multi-Agent Reinforcement Learning

Sep 26, 2023

Abstract:In public spaces shared with humans, ensuring multi-robot systems navigate without collisions while respecting social norms is challenging, particularly with limited communication. Although current robot social navigation techniques leverage advances in reinforcement learning and deep learning, they frequently overlook robot dynamics in simulations, leading to a simulation-to-reality gap. In this paper, we bridge this gap by presenting a new multi-robot social navigation environment crafted using Dec-POSMDP and multi-agent reinforcement learning. Furthermore, we introduce SAMARL: a novel benchmark for cooperative multi-robot social navigation. SAMARL employs a unique spatial-temporal transformer combined with multi-agent reinforcement learning. This approach effectively captures the complex interactions between robots and humans, thus promoting cooperative tendencies in multi-robot systems. Our extensive experiments reveal that SAMARL outperforms existing baseline and ablation models in our designed environment. Demo videos for this work can be found at: https://sites.google.com/view/samarl

NaviSTAR: Socially Aware Robot Navigation with Hybrid Spatio-Temporal Graph Transformer and Preference Learning

Apr 12, 2023

Abstract:Developing robotic technologies for use in human society requires ensuring the safety of robots' navigation behaviors while adhering to pedestrians' expectations and social norms. However, maintaining real-time communication between robots and pedestrians to avoid collisions can be challenging. To address these challenges, we propose a novel socially-aware navigation benchmark called NaviSTAR, which utilizes a hybrid Spatio-Temporal grAph tRansformer (STAR) to understand interactions in human-rich environments fusing potential crowd multi-modal information. We leverage off-policy reinforcement learning algorithm with preference learning to train a policy and a reward function network with supervisor guidance. Additionally, we design a social score function to evaluate the overall performance of social navigation. To compare, we train and test our algorithm and other state-of-the-art methods in both simulator and real-world scenarios independently. Our results show that NaviSTAR outperforms previous methods with outstanding performance\footnote{The source code and experiment videos of this work are available at: https://sites.google.com/view/san-navistar

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge