Laurent Facq

Enhancing Cell Instance Segmentation in Scanning Electron Microscopy Images via a Deep Contour Closing Operator

Jul 22, 2024Abstract:Accurately segmenting and individualizing cells in SEM images is a highly promising technique for elucidating tissue architecture in oncology. While current AI-based methods are effective, errors persist, necessitating time-consuming manual corrections, particularly in areas where the quality of cell contours in the image is poor and requires gap filling. This study presents a novel AI-driven approach for refining cell boundary delineation to improve instance-based cell segmentation in SEM images, also reducing the necessity for residual manual correction. A CNN COp-Net is introduced to address gaps in cell contours, effectively filling in regions with deficient or absent information. The network takes as input cell contour probability maps with potentially inadequate or missing information and outputs corrected cell contour delineations. The lack of training data was addressed by generating low integrity probability maps using a tailored PDE. We showcase the efficacy of our approach in augmenting cell boundary precision using both private SEM images from PDX hepatoblastoma tissues and publicly accessible images datasets. The proposed cell contour closing operator exhibits a notable improvement in tested datasets, achieving respectively close to 50% (private data) and 10% (public data) increase in the accurately-delineated cell proportion compared to state-of-the-art methods. Additionally, the need for manual corrections was significantly reduced, therefore facilitating the overall digitalization process. Our results demonstrate a notable enhancement in the accuracy of cell instance segmentation, particularly in highly challenging regions where image quality compromises the integrity of cell boundaries, necessitating gap filling. Therefore, our work should ultimately facilitate the study of tumour tissue bioarchitecture in onconanotomy field.

Adaptive local boundary conditions to improve Deformable Image Registration

May 21, 2024Abstract:Objective: In medical imaging, it is often crucial to accurately assess and correct movement during image-guided therapy. Deformable image registration (DIR) consists in estimating the required spatial transformation to align a moving image with a fixed one. However, it is acknowledged that, boundary conditions applied to the solution are critical in preventing mis-registration. Despite the extensive research on registration techniques, relatively few have addressed the issue of boundary conditions in the context of medical DIR. Our aim is a step towards customizing boundary conditions to suit the diverse registration tasks at hand. Approach: We propose a generic, locally adaptive, Robin-type condition enabling to balance between Dirichlet and Neumann boundary conditions, depending on incoming/outgoing flow fields on the image boundaries. The proposed framework is entirely automatized through the determination of a reduced set of hyperparameters optimized via energy minimization. Main results: The proposed approach was tested on a mono-modal CT thorax registration task and an abdominal CT to MRI registration task. For the first task, we observed a relative improvement in terms of target registration error of up to 12% (mean 4%), compared to homogeneous Dirichlet and homogeneous Neumann. For the second task, the automatic framework provides results closed to the best achievable. Significance: This study underscores the importance of tailoring the registration problem at the image boundaries. In this research, we introduce a novel method to adapt the boundary conditions on a voxel-by-voxel basis, yielding optimized results in two distinct tasks: mono-modal CT thorax registration and abdominal CT to MRI registration. The proposed framework enables optimized boundary conditions in image registration without any a priori assumptions regarding the images or the motion.

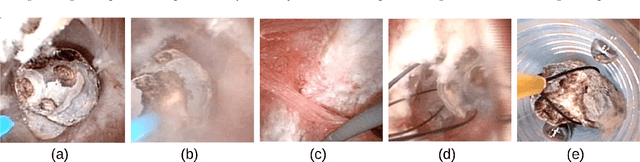

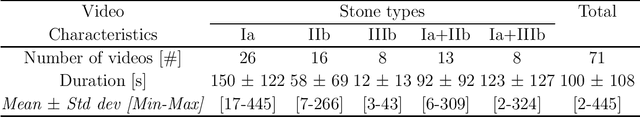

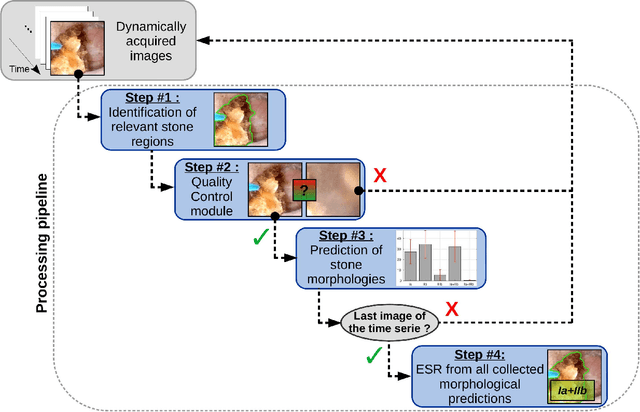

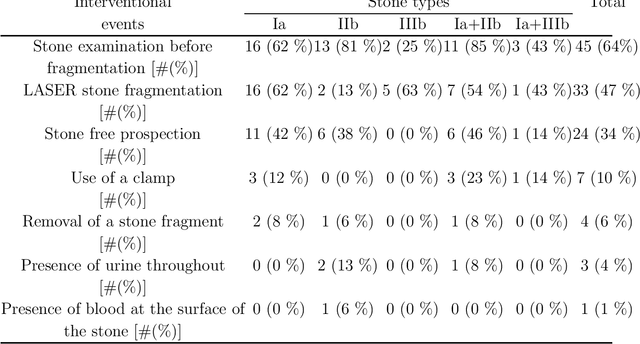

Deep morphological recognition of kidney stones using intra-operative endoscopic digital videos

May 12, 2022

Abstract:The collection and the analysis of kidney stone morphological criteria are essential for an aetiological diagnosis of stone disease. However, in-situ LASER-based fragmentation of urinary stones, which is now the most established chirurgical intervention, may destroy the morphology of the targeted stone. In the current study, we assess the performance and added value of processing complete digital endoscopic video sequences for the automatic recognition of stone morphological features during a standard-of-care intra-operative session. To this end, a computer-aided video classifier was developed to predict in-situ the morphology of stone using an intra-operative digital endoscopic video acquired in a clinical setting. The proposed technique was evaluated on pure (i.e. include one morphology) and mixed (i.e. include at least two morphologies) stones involving "Ia/Calcium Oxalate Monohydrate (COM)", "IIb/ Calcium Oxalate Dihydrate (COD)" and "IIIb/Uric Acid (UA)" morphologies. 71 digital endoscopic videos (50 exhibited only one morphological type and 21 displayed two) were analyzed using the proposed video classifier (56840 frames processed in total). Using the proposed approach, diagnostic performances (averaged over both pure and mixed stone types) were as follows: balanced accuracy=88%, sensitivity=80%, specificity=95%, precision=78% and F1-score=78%. The obtained results demonstrate that AI applied on digital endoscopic video sequences is a promising tool for collecting morphological information during the time-course of the stone fragmentation process without resorting to any human intervention for stone delineation or selection of good quality steady frames. To this end, irrelevant image information must be removed from the prediction process at both frame and pixel levels, which is now feasible thanks to the use of AI-dedicated networks.

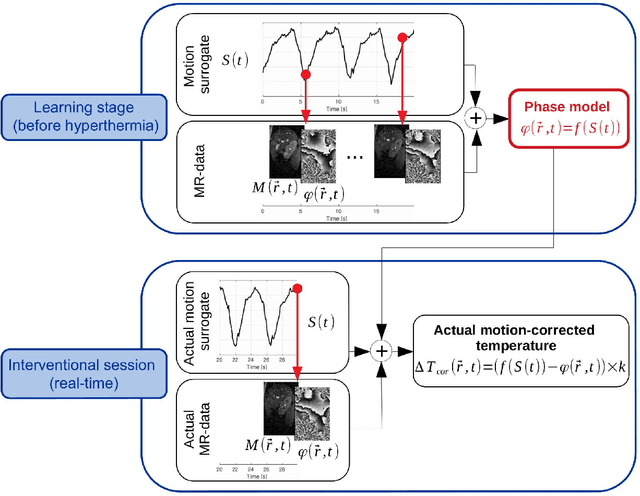

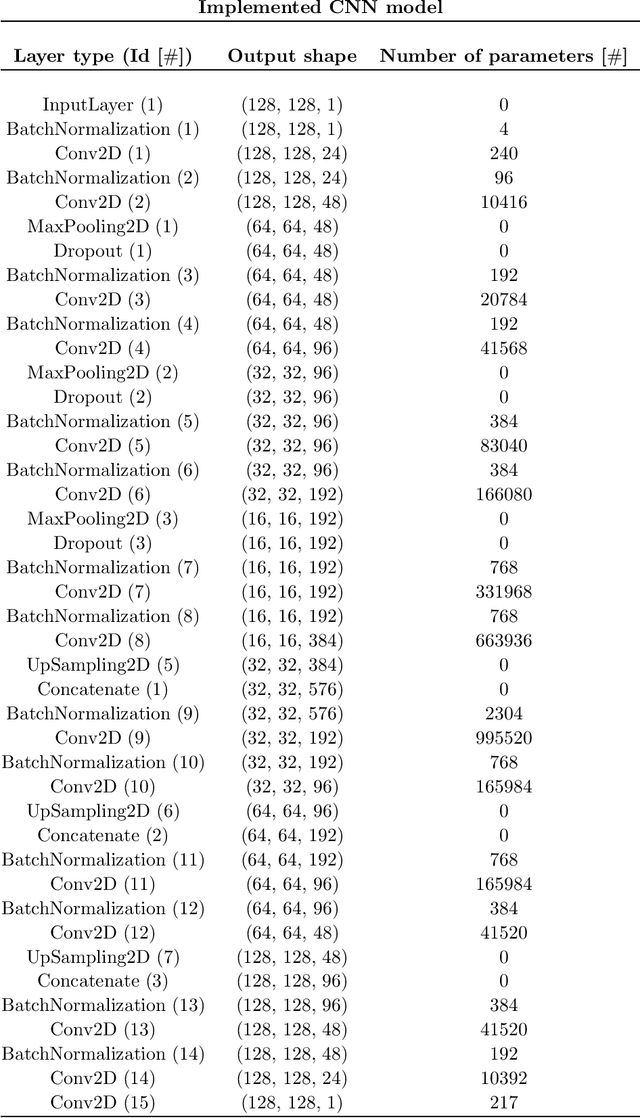

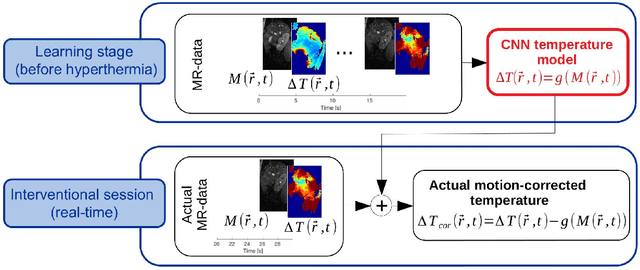

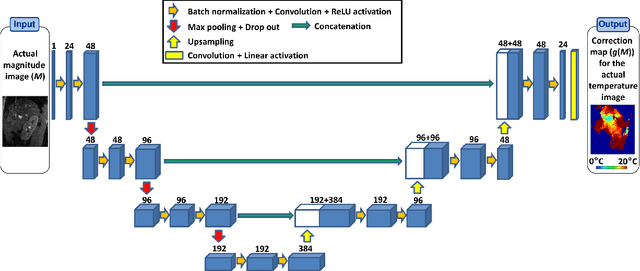

Deep correction of breathing-related artifacts in MR-thermometry

Nov 10, 2020

Abstract:Real-time MR-imaging has been clinically adapted for monitoring thermal therapies since it can provide on-the-fly temperature maps simultaneously with anatomical information. However, proton resonance frequency based thermometry of moving targets remains challenging since temperature artifacts are induced by the respiratory as well as physiological motion. If left uncorrected, these artifacts lead to severe errors in temperature estimates and impair therapy guidance. In this study, we evaluated deep learning for on-line correction of motion related errors in abdominal MR-thermometry. For this, a convolutional neural network (CNN) was designed to learn the apparent temperature perturbation from images acquired during a preparative learning stage prior to hyperthermia. The input of the designed CNN is the most recent magnitude image and no surrogate of motion is needed. During the subsequent hyperthermia procedure, the recent magnitude image is used as an input for the CNN-model in order to generate an on-line correction for the current temperature map. The method's artifact suppression performance was evaluated on 12 free breathing volunteers and was found robust and artifact-free in all examined cases. Furthermore, thermometric precision and accuracy was assessed for in vivo ablation using high intensity focused ultrasound. All calculations involved at the different stages of the proposed workflow were designed to be compatible with the clinical time constraints of a therapeutic procedure.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge