Laurence Lovat

Intrapapillary Capillary Loop Classification in Magnification Endoscopy: Open Dataset and Baseline Methodology

Feb 19, 2021

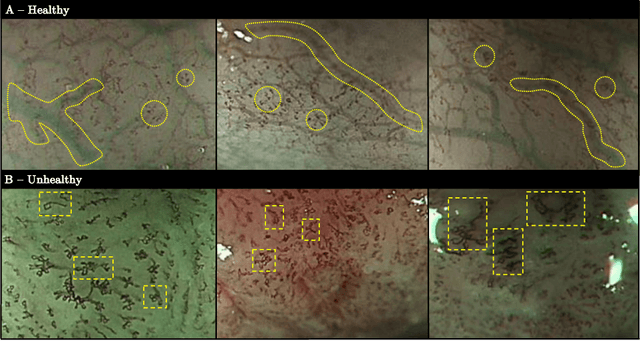

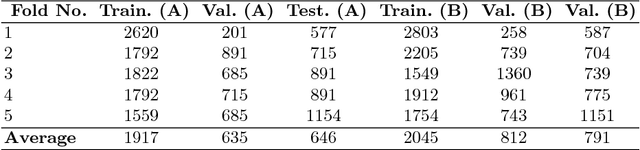

Abstract:Purpose. Early squamous cell neoplasia (ESCN) in the oesophagus is a highly treatable condition. Lesions confined to the mucosal layer can be curatively treated endoscopically. We build a computer-assisted detection (CADe) system that can classify still images or video frames as normal or abnormal with high diagnostic accuracy. Methods. We present a new benchmark dataset containing 68K binary labeled frames extracted from 114 patient videos whose imaged areas have been resected and correlated to histopathology. Our novel convolutional network (CNN) architecture solves the binary classification task and explains what features of the input domain drive the decision-making process of the network. Results. The proposed method achieved an average accuracy of 91.7 % compared to the 94.7 % achieved by a group of 12 senior clinicians. Our novel network architecture produces deeply supervised activation heatmaps that suggest the network is looking at intrapapillary capillary loop (IPCL) patterns when predicting abnormality. Conclusion. We believe that this dataset and baseline method may serve as a reference for future benchmarks on both video frame classification and explainability in the context of ESCN detection. A future work path of high clinical relevance is the extension of the classification to ESCN types.

Detecting small polyps using a Dynamic SSD-GAN

Oct 29, 2020

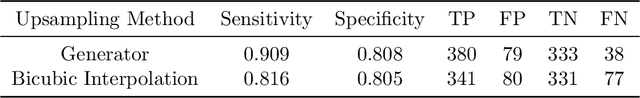

Abstract:Endoscopic examinations are used to inspect the throat, stomach and bowel for polyps which could develop into cancer. Machine learning systems can be trained to process colonoscopy images and detect polyps. However, these systems tend to perform poorly on objects which appear visually small in the images. It is shown here that combining the single-shot detector as a region proposal network with an adversarially-trained generator to upsample small region proposals can significantly improve the detection of visually-small polyps. The Dynamic SSD-GAN pipeline introduced in this paper achieved a 12% increase in sensitivity on visually-small polyps compared to a conventional FCN baseline.

Interpretable Fully Convolutional Classification of Intrapapillary Capillary Loops for Real-Time Detection of Early Squamous Neoplasia

May 02, 2018

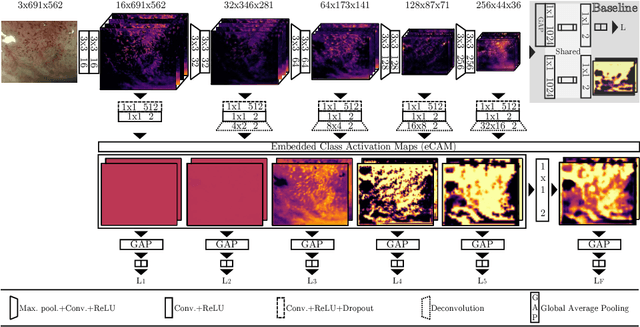

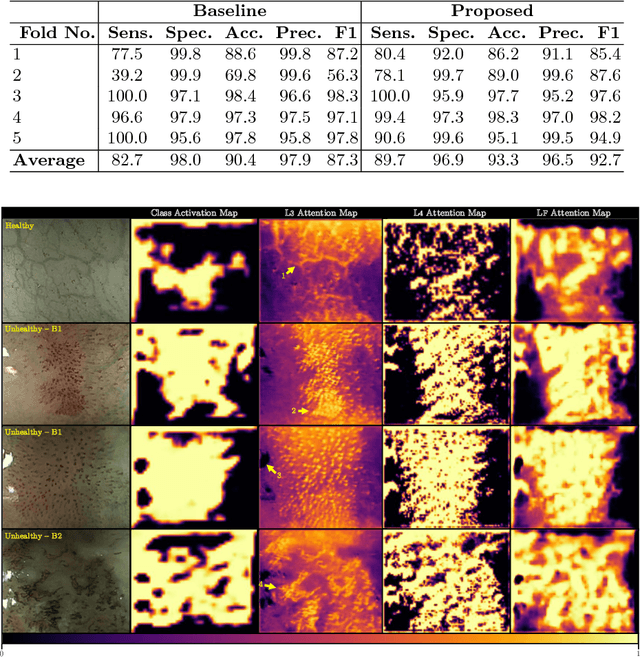

Abstract:In this work, we have concentrated our efforts on the interpretability of classification results coming from a fully convolutional neural network. Motivated by the classification of oesophageal tissue for real-time detection of early squamous neoplasia, the most frequent kind of oesophageal cancer in Asia, we present a new dataset and a novel deep learning method that by means of deep supervision and a newly introduced concept, the embedded Class Activation Map (eCAM), focuses on the interpretability of results as a design constraint of a convolutional network. We present a new approach to visualise attention that aims to give some insights on those areas of the oesophageal tissue that lead a network to conclude that the images belong to a particular class and compare them with those visual features employed by clinicians to produce a clinical diagnosis. In comparison to a baseline method which does not feature deep supervision but provides attention by grafting Class Activation Maps, we improve the F1-score from 87.3% to 92.7% and provide more detailed attention maps.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge