Interpretable Fully Convolutional Classification of Intrapapillary Capillary Loops for Real-Time Detection of Early Squamous Neoplasia

Paper and Code

May 02, 2018

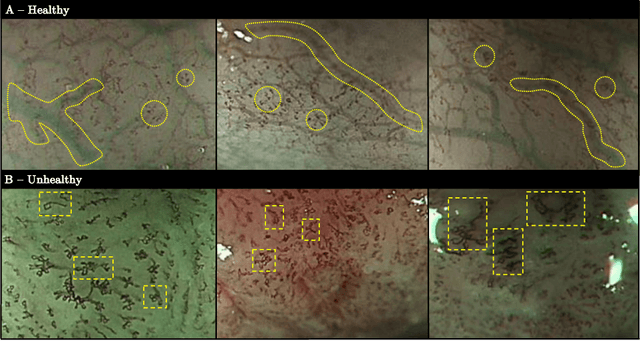

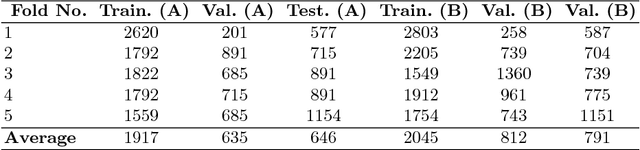

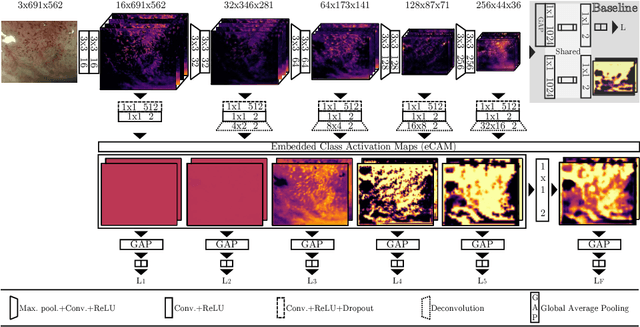

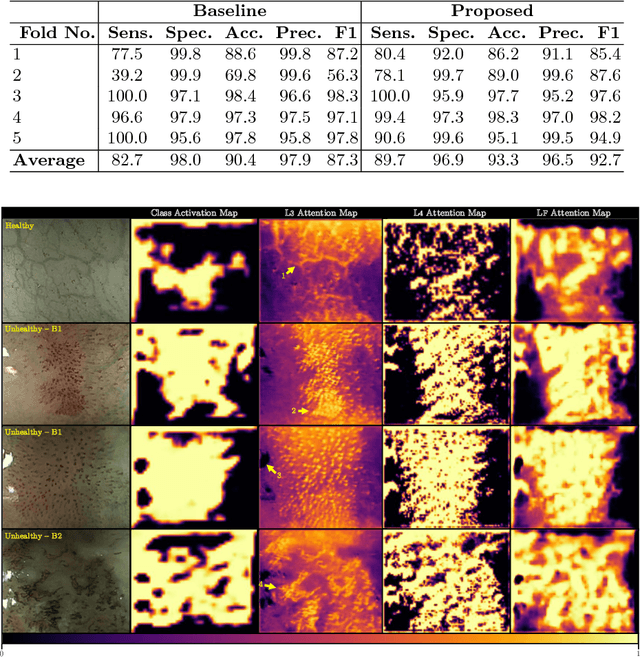

In this work, we have concentrated our efforts on the interpretability of classification results coming from a fully convolutional neural network. Motivated by the classification of oesophageal tissue for real-time detection of early squamous neoplasia, the most frequent kind of oesophageal cancer in Asia, we present a new dataset and a novel deep learning method that by means of deep supervision and a newly introduced concept, the embedded Class Activation Map (eCAM), focuses on the interpretability of results as a design constraint of a convolutional network. We present a new approach to visualise attention that aims to give some insights on those areas of the oesophageal tissue that lead a network to conclude that the images belong to a particular class and compare them with those visual features employed by clinicians to produce a clinical diagnosis. In comparison to a baseline method which does not feature deep supervision but provides attention by grafting Class Activation Maps, we improve the F1-score from 87.3% to 92.7% and provide more detailed attention maps.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge