Kyeongryeol Go

Transferable Candidate Proposal with Bounded Uncertainty

Dec 07, 2023Abstract:From an empirical perspective, the subset chosen through active learning cannot guarantee an advantage over random sampling when transferred to another model. While it underscores the significance of verifying transferability, experimental design from previous works often neglected that the informativeness of a data subset can change over model configurations. To tackle this issue, we introduce a new experimental design, coined as Candidate Proposal, to find transferable data candidates from which active learning algorithms choose the informative subset. Correspondingly, a data selection algorithm is proposed, namely Transferable candidate proposal with Bounded Uncertainty (TBU), which constrains the pool of transferable data candidates by filtering out the presumably redundant data points based on uncertainty estimation. We verified the validity of TBU in image classification benchmarks, including CIFAR-10/100 and SVHN. When transferred to different model configurations, TBU consistency improves performance in existing active learning algorithms. Our code is available at https://github.com/gokyeongryeol/TBU.

Neural Processes with Stochastic Attention: Paying more attention to the context dataset

Apr 11, 2022

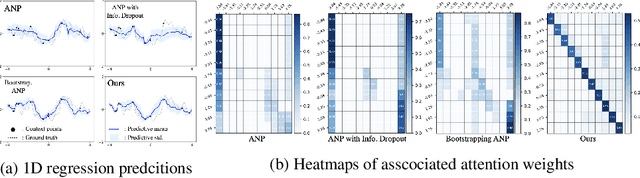

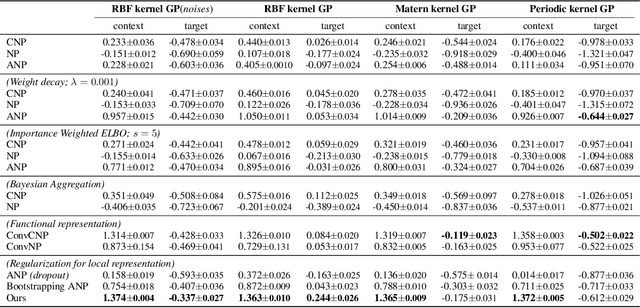

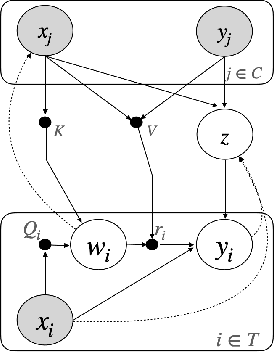

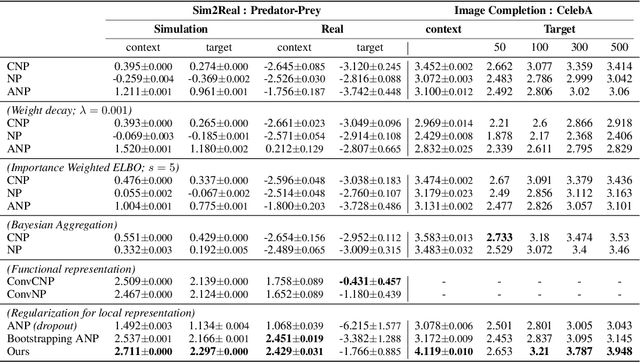

Abstract:Neural processes (NPs) aim to stochastically complete unseen data points based on a given context dataset. NPs essentially leverage a given dataset as a context representation to derive a suitable identifier for a novel task. To improve the prediction accuracy, many variants of NPs have investigated context embedding approaches that generally design novel network architectures and aggregation functions satisfying permutation invariant. In this work, we propose a stochastic attention mechanism for NPs to capture appropriate context information. From the perspective of information theory, we demonstrate that the proposed method encourages context embedding to be differentiated from a target dataset, allowing NPs to consider features in a target dataset and context embedding independently. We observe that the proposed method can appropriately capture context embedding even under noisy data sets and restricted task distributions, where typical NPs suffer from a lack of context embeddings. We empirically show that our approach substantially outperforms conventional NPs in various domains through 1D regression, predator-prey model, and image completion. Moreover, the proposed method is also validated by MovieLens-10k dataset, a real-world problem.

Meta-learning Amidst Heterogeneity and Ambiguity

Jul 05, 2021

Abstract:Meta-learning aims to learn a model that can handle multiple tasks generated from an unknown but shared distribution. However, typical meta-learning algorithms have assumed the tasks to be similar such that a single meta-learner is sufficient to aggregate the variations in all aspects. In addition, there has been less consideration on uncertainty when limited information is given as context. In this paper, we devise a novel meta-learning framework, called Meta-learning Amidst Heterogeneity and Ambiguity (MAHA), that outperforms previous works in terms of prediction based on its ability on task identification. By extensively conducting several experiments in regression and classification, we demonstrate the validity of our model, which turns out to be robust to both task heterogeneity and ambiguity.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge