Kyeong-Joong Jeong

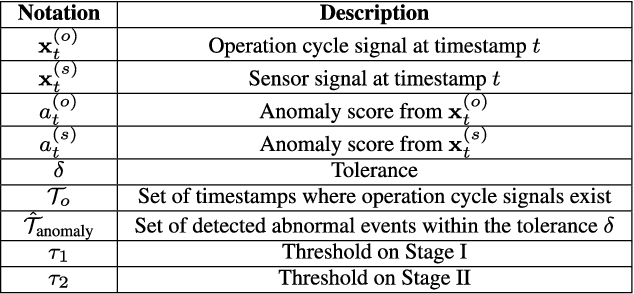

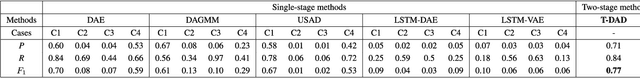

Two-Stage Deep Anomaly Detection with Heterogeneous Time Series Data

Feb 10, 2022

Abstract:We introduce a data-driven anomaly detection framework using a manufacturing dataset collected from a factory assembly line. Given heterogeneous time series data consisting of operation cycle signals and sensor signals, we aim at discovering abnormal events. Motivated by our empirical findings that conventional single-stage benchmark approaches may not exhibit satisfactory performance under our challenging circumstances, we propose a two-stage deep anomaly detection (TDAD) framework in which two different unsupervised learning models are adopted depending on types of signals. In Stage I, we select anomaly candidates by using a model trained by operation cycle signals; in Stage II, we finally detect abnormal events out of the candidates by using another model, which is suitable for taking advantage of temporal continuity, trained by sensor signals. A distinguishable feature of our framework is that operation cycle signals are exploited first to find likely anomalous points, whereas sensor signals are leveraged to filter out unlikely anomalous points afterward. Our experiments comprehensively demonstrate the superiority over single-stage benchmark approaches, the model-agnostic property, and the robustness to difficult situations.

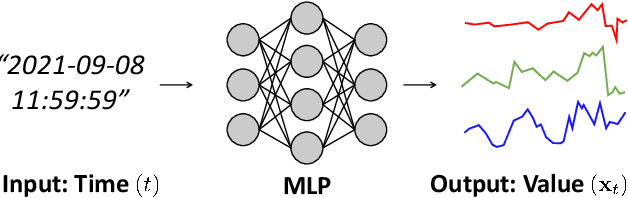

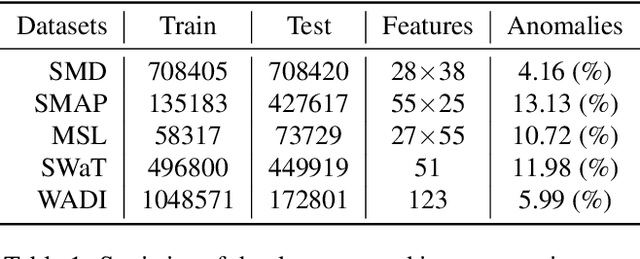

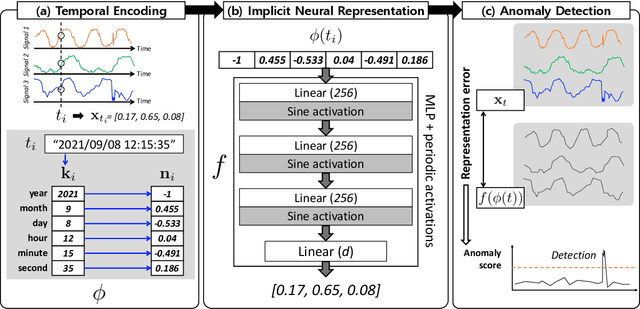

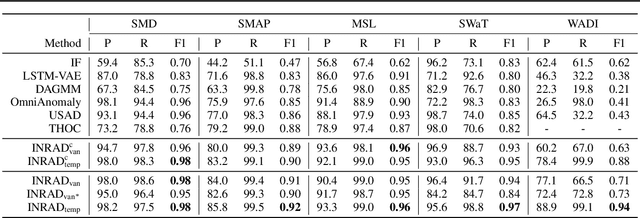

Time-Series Anomaly Detection with Implicit Neural Representation

Jan 28, 2022

Abstract:Detecting anomalies in multivariate time-series data is essential in many real-world applications. Recently, various deep learning-based approaches have shown considerable improvements in time-series anomaly detection. However, existing methods still have several limitations, such as long training time due to their complex model designs or costly tuning procedures to find optimal hyperparameters (e.g., sliding window length) for a given dataset. In our paper, we propose a novel method called Implicit Neural Representation-based Anomaly Detection (INRAD). Specifically, we train a simple multi-layer perceptron that takes time as input and outputs corresponding values at that time. Then we utilize the representation error as an anomaly score for detecting anomalies. Experiments on five real-world datasets demonstrate that our proposed method outperforms other state-of-the-art methods in performance, training speed, and robustness.

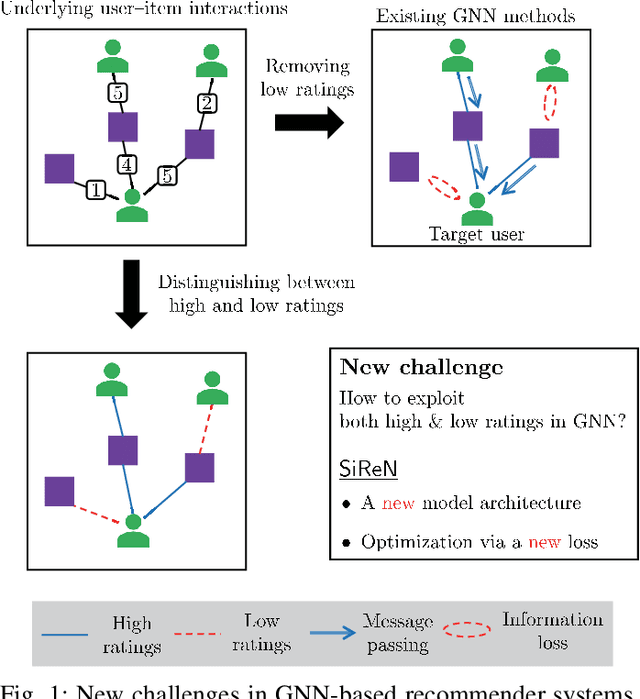

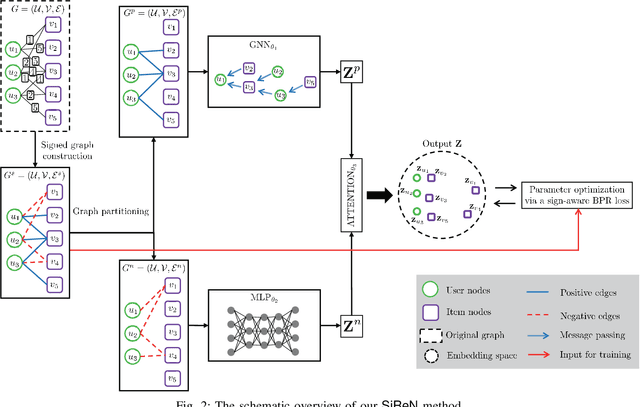

SiReN: Sign-Aware Recommendation Using Graph Neural Networks

Aug 19, 2021

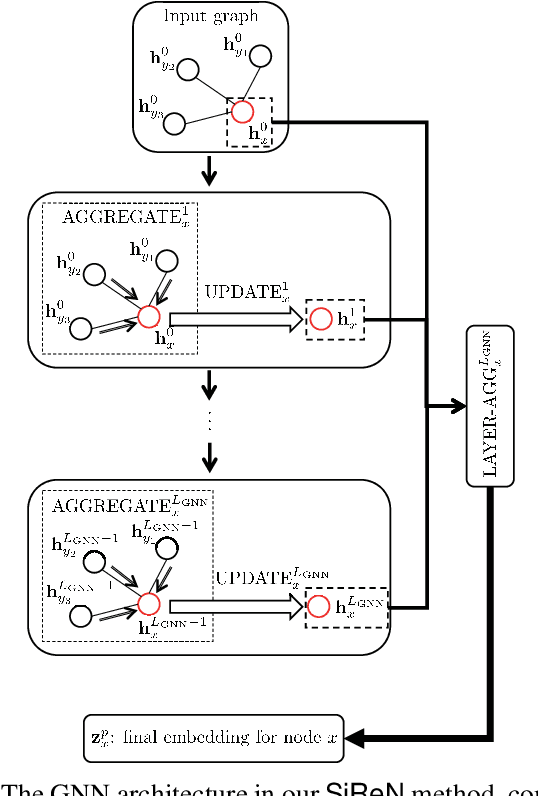

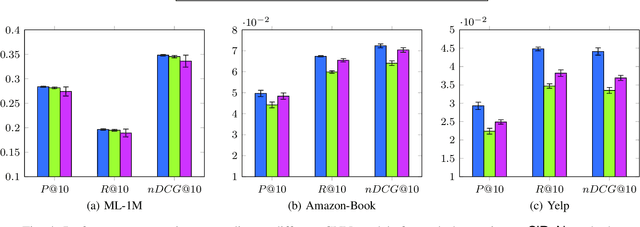

Abstract:In recent years, many recommender systems using network embedding (NE) such as graph neural networks (GNNs) have been extensively studied in the sense of improving recommendation accuracy. However, such attempts have focused mostly on utilizing only the information of positive user-item interactions with high ratings. Thus, there is a challenge on how to make use of low rating scores for representing users' preferences since low ratings can be still informative in designing NE-based recommender systems. In this study, we present SiReN, a new sign-aware recommender system based on GNN models. Specifically, SiReN has three key components: 1) constructing a signed bipartite graph for more precisely representing users' preferences, which is split into two edge-disjoint graphs with positive and negative edges each, 2) generating two embeddings for the partitioned graphs with positive and negative edges via a GNN model and a multi-layer perceptron (MLP), respectively, and then using an attention model to obtain the final embeddings, and 3) establishing a sign-aware Bayesian personalized ranking (BPR) loss function in the process of optimization. Through comprehensive experiments, we empirically demonstrate that SiReN consistently outperforms state-of-the-art NE-aided recommendation methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge