Kunzhe Song

ChatTracer: Large Language Model Powered Real-time Bluetooth Device Tracking System

Mar 28, 2024

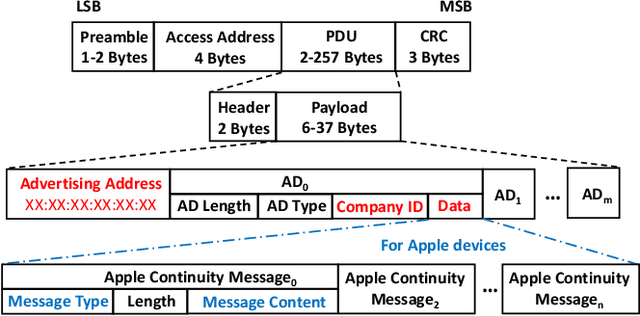

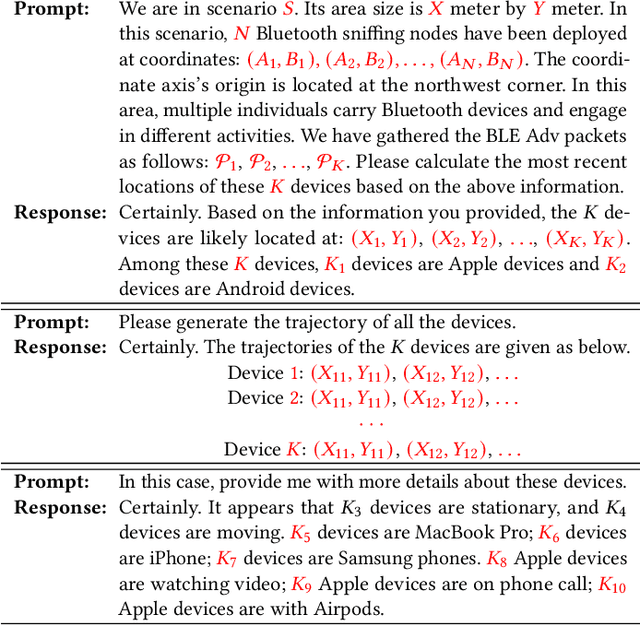

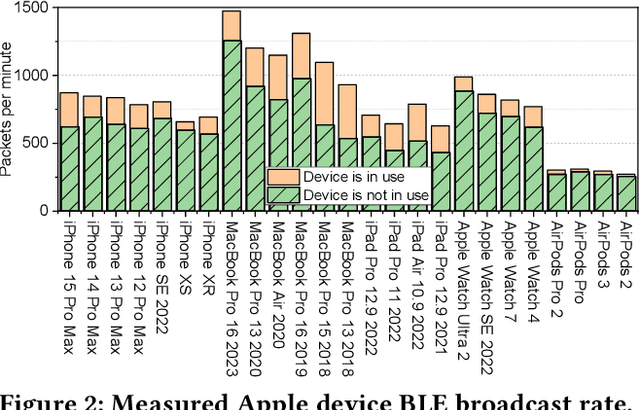

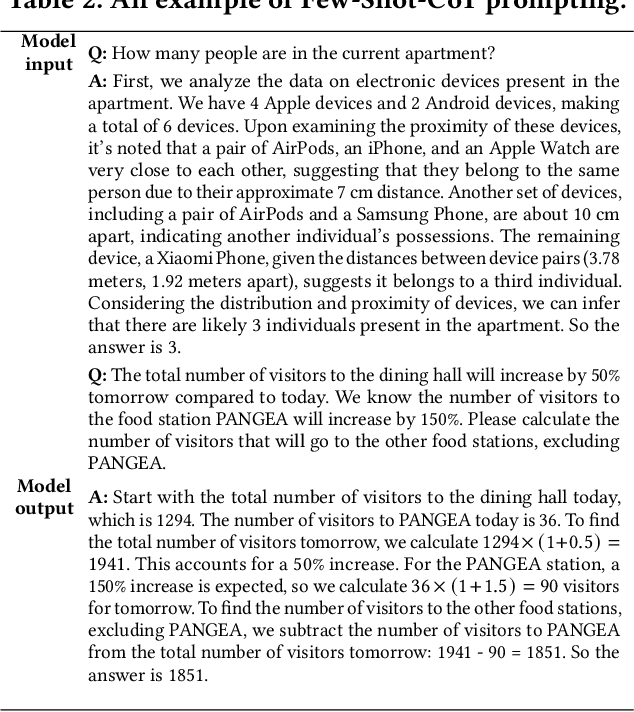

Abstract:Large language models (LLMs), exemplified by OpenAI ChatGPT and Google Bard, have transformed the way we interact with cyber technologies. In this paper, we study the possibility of connecting LLM with wireless sensor networks (WSN). A successful design will not only extend LLM's knowledge landscape to the physical world but also revolutionize human interaction with WSN. To the end, we present ChatTracer, an LLM-powered real-time Bluetooth device tracking system. ChatTracer comprises three key components: an array of Bluetooth sniffing nodes, a database, and a fine-tuned LLM. ChatTracer was designed based on our experimental observation that commercial Apple/Android devices always broadcast hundreds of BLE packets per minute even in their idle status. Its novelties lie in two aspects: i) a reliable and efficient BLE packet grouping algorithm; and ii) an LLM fine-tuning strategy that combines both supervised fine-tuning (SFT) and reinforcement learning with human feedback (RLHF). We have built a prototype of ChatTracer with four sniffing nodes. Experimental results show that ChatTracer not only outperforms existing localization approaches, but also provides an intelligent interface for user interaction.

Self-Supervised Multi-Modal Sequential Recommendation

Apr 26, 2023

Abstract:With the increasing development of e-commerce and online services, personalized recommendation systems have become crucial for enhancing user satisfaction and driving business revenue. Traditional sequential recommendation methods that rely on explicit item IDs encounter challenges in handling item cold start and domain transfer problems. Recent approaches have attempted to use modal features associated with items as a replacement for item IDs, enabling the transfer of learned knowledge across different datasets. However, these methods typically calculate the correlation between the model's output and item embeddings, which may suffer from inconsistencies between high-level feature vectors and low-level feature embeddings, thereby hindering further model learning. To address this issue, we propose a dual-tower retrieval architecture for sequence recommendation. In this architecture, the predicted embedding from the user encoder is used to retrieve the generated embedding from the item encoder, thereby alleviating the issue of inconsistent feature levels. Moreover, in order to further improve the retrieval performance of the model, we also propose a self-supervised multi-modal pretraining method inspired by the consistency property of contrastive learning. This pretraining method enables the model to align various feature combinations of items, thereby effectively generalizing to diverse datasets with different item features. We evaluate the proposed method on five publicly available datasets and conduct extensive experiments. The results demonstrate significant performance improvement of our method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge