Kshitij Agrawal

Patch-wise Features for Blur Image Classification

Apr 06, 2023

Abstract:Images captured through smartphone cameras often suffer from degradation, blur being one of the major ones, posing a challenge in processing these images for downstream tasks. In this paper we propose low-compute lightweight patch-wise features for image quality assessment. Using our method we can discriminate between blur vs sharp image degradation. To this end, we train a decision-tree based XGBoost model on various intuitive image features like gray level variance, first and second order gradients, texture features like local binary patterns. Experiments conducted on an open dataset show that the proposed low compute method results in 90.1% mean accuracy on the validation set, which is comparable to the accuracy of a compute-intensive VGG16 network with 94% mean accuracy fine-tuned to this task. To demonstrate the generalizability of our proposed features and model we test the model on BHBID dataset and an internal dataset where we attain accuracy of 98% and 91%, respectively. The proposed method is 10x faster than the VGG16 based model on CPU and scales linearly to the input image size making it suitable to be implemented on low compute edge devices.

Meta Guided Metric Learner for Overcoming Class Confusion in Few-Shot Road Object Detection

Oct 28, 2021

Abstract:Localization and recognition of less-occurring road objects have been a challenge in autonomous driving applications due to the scarcity of data samples. Few-Shot Object Detection techniques extend the knowledge from existing base object classes to learn novel road objects given few training examples. Popular techniques in FSOD adopt either meta or metric learning techniques which are prone to class confusion and base class forgetting. In this work, we introduce a novel Meta Guided Metric Learner (MGML) to overcome class confusion in FSOD. We re-weight the features of the novel classes higher than the base classes through a novel Squeeze and Excite module and encourage the learning of truly discriminative class-specific features by applying an Orthogonality Constraint to the meta learner. Our method outperforms State-of-the-Art (SoTA) approaches in FSOD on the India Driving Dataset (IDD) by upto 11 mAP points while suffering from the least class confusion of 20% given only 10 examples of each novel road object. We further show similar improvements on the few-shot splits of PASCAL VOC dataset where we outperform SoTA approaches by upto 5.8 mAP accross all splits.

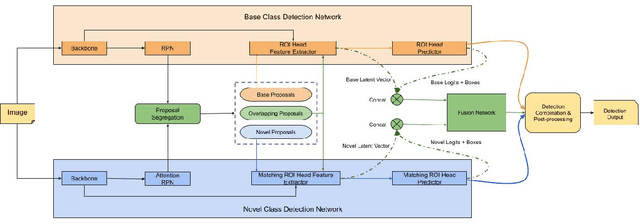

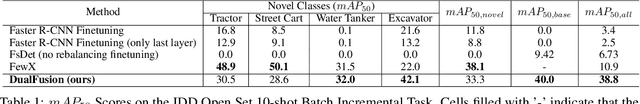

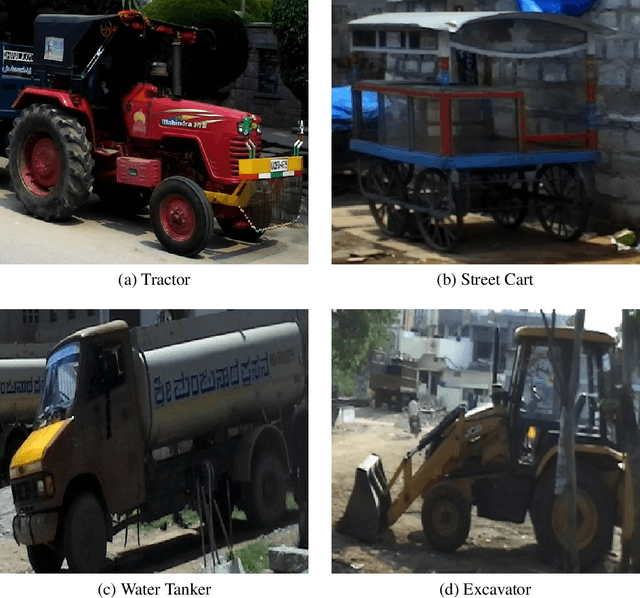

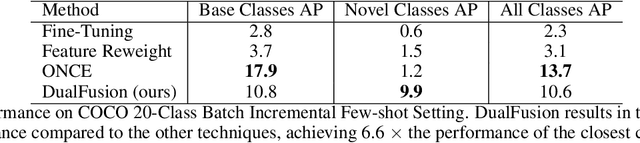

Few-Shot Batch Incremental Road Object Detection via Detector Fusion

Aug 18, 2021

Abstract:Incremental few-shot learning has emerged as a new and challenging area in deep learning, whose objective is to train deep learning models using very few samples of new class data, and none of the old class data. In this work we tackle the problem of batch incremental few-shot road object detection using data from the India Driving Dataset (IDD). Our approach, DualFusion, combines object detectors in a manner that allows us to learn to detect rare objects with very limited data, all without severely degrading the performance of the detector on the abundant classes. In the IDD OpenSet incremental few-shot detection task, we achieve a mAP50 score of 40.0 on the base classes and an overall mAP50 score of 38.8, both of which are the highest to date. In the COCO batch incremental few-shot detection task, we achieve a novel AP score of 9.9, surpassing the state-of-the-art novel class performance on the same by over 6.6 times.

Few-Shot Learning for Road Object Detection

Jan 29, 2021

Abstract:Few-shot learning is a problem of high interest in the evolution of deep learning. In this work, we consider the problem of few-shot object detection (FSOD) in a real-world, class-imbalanced scenario. For our experiments, we utilize the India Driving Dataset (IDD), as it includes a class of less-occurring road objects in the image dataset and hence provides a setup suitable for few-shot learning. We evaluate both metric-learning and meta-learning based FSOD methods, in two experimental settings: (i) representative (same-domain) splits from IDD, that evaluates the ability of a model to learn in the context of road images, and (ii) object classes with less-occurring object samples, similar to the open-set setting in real-world. From our experiments, we demonstrate that the metric-learning method outperforms meta-learning on the novel classes by (i) 11.2 mAP points on the same domain, and (ii) 1.0 mAP point on the open-set. We also show that our extension of object classes in a real-world open dataset offers a rich ground for few-shot learning studies.

Enhancing Object Detection in Adverse Conditions using Thermal Imaging

Sep 30, 2019

Abstract:Autonomous driving relies on deriving understanding of objects and scenes through images. These images are often captured by sensors in the visible spectrum. For improved detection capabilities we propose the use of thermal sensors to augment the vision capabilities of an autonomous vehicle. In this paper, we present our investigations on the fusion of visible and thermal spectrum images using a publicly available dataset, and use it to analyze the performance of object recognition on other known driving datasets. We present an comparison of object detection in night time imagery and qualitatively demonstrate that thermal images significantly improve detection accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge