Konstantin Slutsky

Minimum-Violation Planning for Autonomous Systems: Theoretical and Practical Considerations

Sep 24, 2020

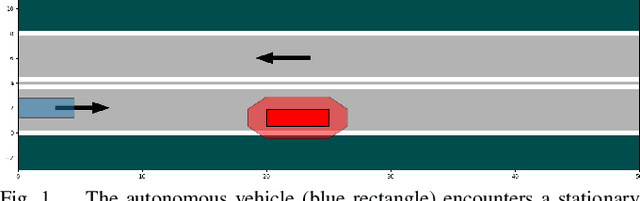

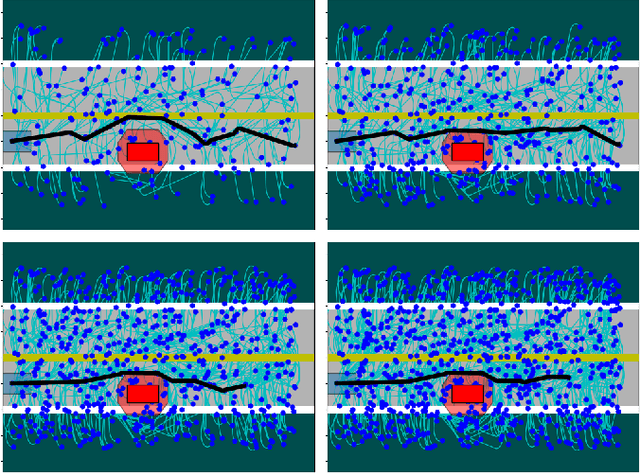

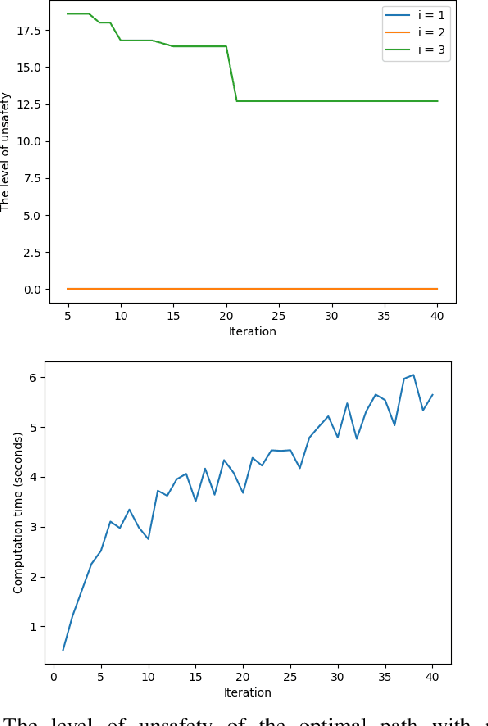

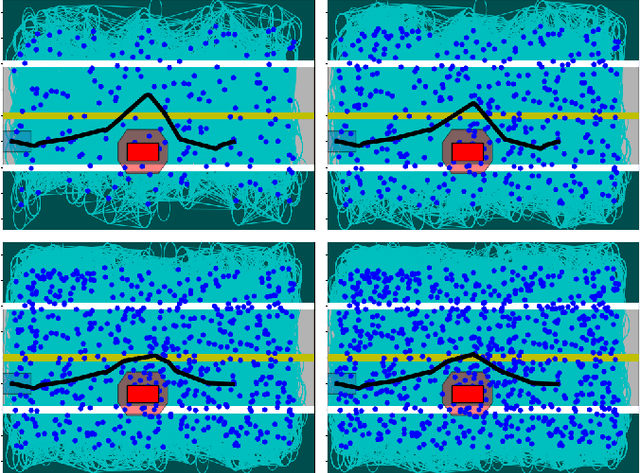

Abstract:This paper considers the problem of computing an optimal trajectory for an autonomous system that is subject to a set of potentially conflicting rules. First, we introduce the concept of prioritized safety specifications, where each rule is expressed as a temporal logic formula with its associated weight and priority. The optimality is defined based on the violation of such prioritized safety specifications. We then introduce a class of temporal logic formulas called $\textrm{si-FLTL}_{\mathsf{G_X}}$ and develop an efficient, incremental sampling-based approach to solve this minimum-violation planning problem with guarantees on asymptotic optimality. We illustrate the application of the proposed approach in autonomous vehicles, showing that $\textrm{si-FLTL}_{\mathsf{G_X}}$ formulas are sufficiently expressive to describe many traffic rules. Finally, we discuss practical considerations and present simulation results for a vehicle overtaking scenario.

Liability, Ethics, and Culture-Aware Behavior Specification using Rulebooks

Mar 01, 2019

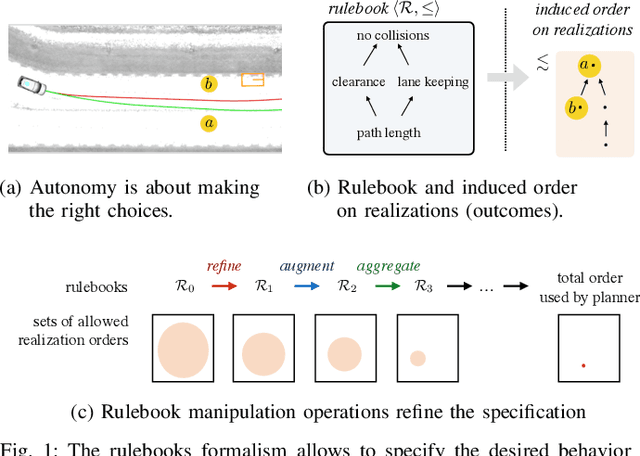

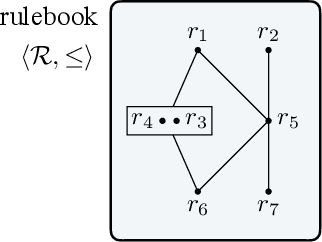

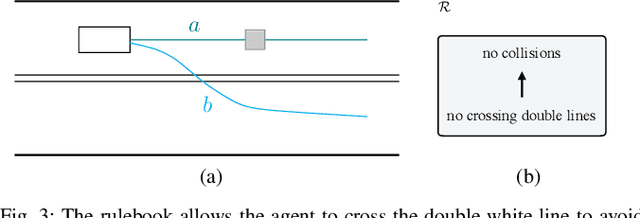

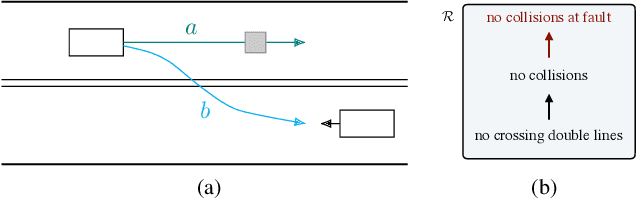

Abstract:The behavior of self-driving cars must be compatible with an enormous set of conflicting and ambiguous objectives, from law, from ethics, from the local culture, and so on. This paper describes a new way to conveniently define the desired behavior for autonomous agents, which we use on the self-driving cars developed at nuTonomy. We define a "rulebook" as a pre-ordered set of "rules", each akin to a violation metric on the possible outcomes ("realizations"). The rules are partially ordered by priority. The semantics of a rulebook imposes a pre-order on the set of realizations. We study the compositional properties of the rulebooks, and we derive which operations we can allow on the rulebooks to preserve previously-introduced constraints. While we demonstrate the application of these techniques in the self-driving domain, the methods are domain-independent.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge