Kim Bjerge

Insect Identification in the Wild: The AMI Dataset

Jun 18, 2024

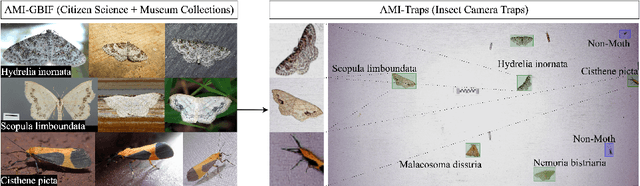

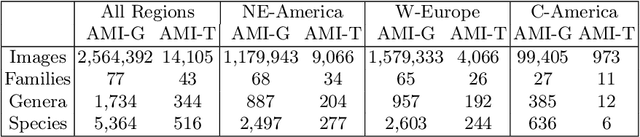

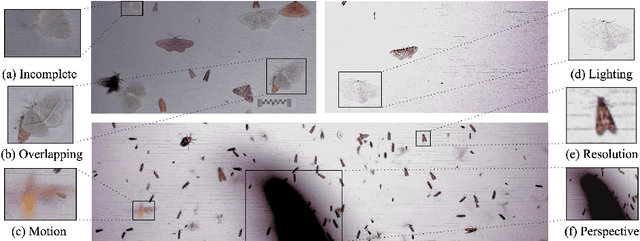

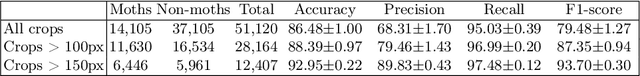

Abstract:Insects represent half of all global biodiversity, yet many of the world's insects are disappearing, with severe implications for ecosystems and agriculture. Despite this crisis, data on insect diversity and abundance remain woefully inadequate, due to the scarcity of human experts and the lack of scalable tools for monitoring. Ecologists have started to adopt camera traps to record and study insects, and have proposed computer vision algorithms as an answer for scalable data processing. However, insect monitoring in the wild poses unique challenges that have not yet been addressed within computer vision, including the combination of long-tailed data, extremely similar classes, and significant distribution shifts. We provide the first large-scale machine learning benchmarks for fine-grained insect recognition, designed to match real-world tasks faced by ecologists. Our contributions include a curated dataset of images from citizen science platforms and museums, and an expert-annotated dataset drawn from automated camera traps across multiple continents, designed to test out-of-distribution generalization under field conditions. We train and evaluate a variety of baseline algorithms and introduce a combination of data augmentation techniques that enhance generalization across geographies and hardware setups. Code and datasets are made publicly available.

Motion Informed Object Detection of Small Insects in Time-lapse Camera Recordings

Dec 01, 2022

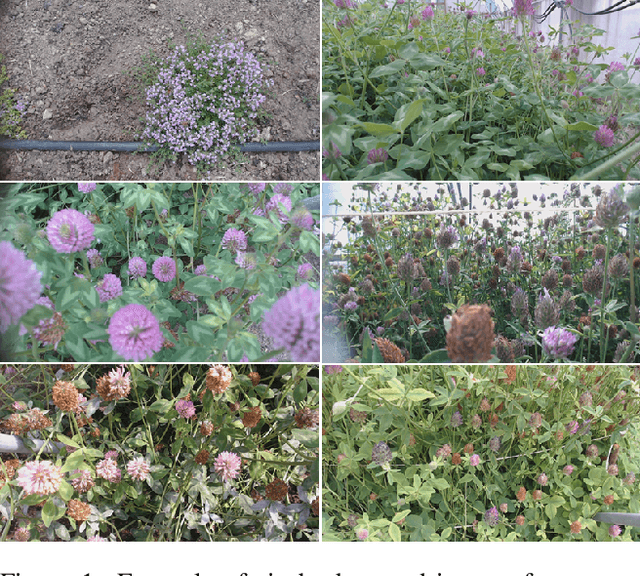

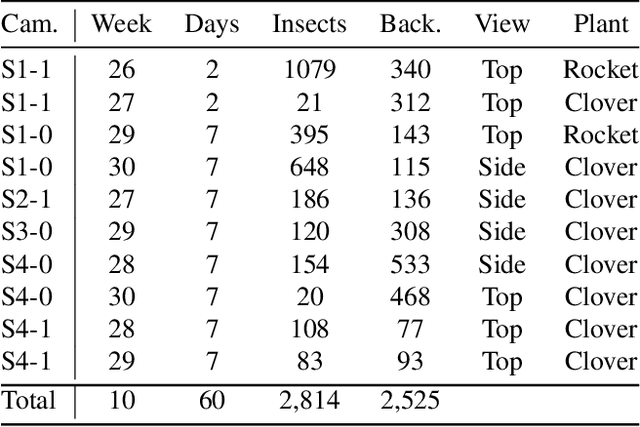

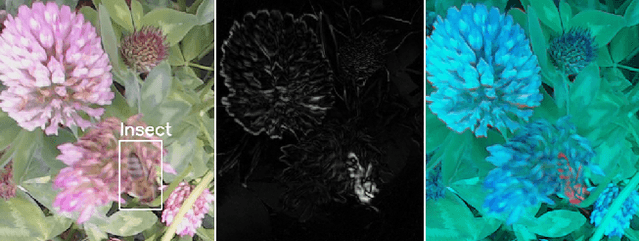

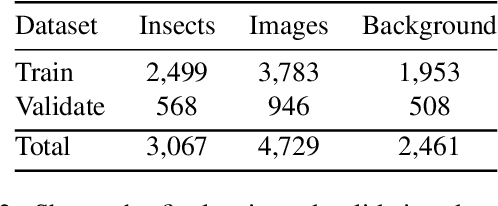

Abstract:Insects as pollinators play a key role in ecosystem management and world food production. However, insect populations are declining, calling for a necessary global demand of insect monitoring. Existing methods analyze video or time-lapse images of insects in nature, but the analysis is challenging since insects are small objects in complex and dynamic scenes of natural vegetation. The current paper provides a dataset of primary honeybees visiting three different plant species during two months of summer-period. The dataset consists of more than 700,000 time-lapse images from multiple cameras, including more than 100,000 annotated images. The paper presents a new method pipeline for detecting insects in time-lapse RGB-images. The pipeline consists of a two-step process. Firstly, the time-lapse RGB-images are preprocessed to enhance insects in the images. We propose a new prepossessing enhancement method: Motion-Informed-enhancement. The technique uses motion and colors to enhance insects in images. The enhanced images are subsequently fed into a Convolutional Neural network (CNN) object detector. Motion-Informed-enhancement improves the deep learning object detectors You Only Look Once (YOLO) and Faster Region-based Convolutional Neural Networks (Faster R-CNN). Using Motion-Informed-enhancement the YOLO-detector improves average micro F1-score from 0.49 to 0.71, and the Faster R-CNN-detector improves average micro F1-score from 0.32 to 0.56 on the our dataset. Our datasets are published on: https://vision.eng.au.dk/mie/

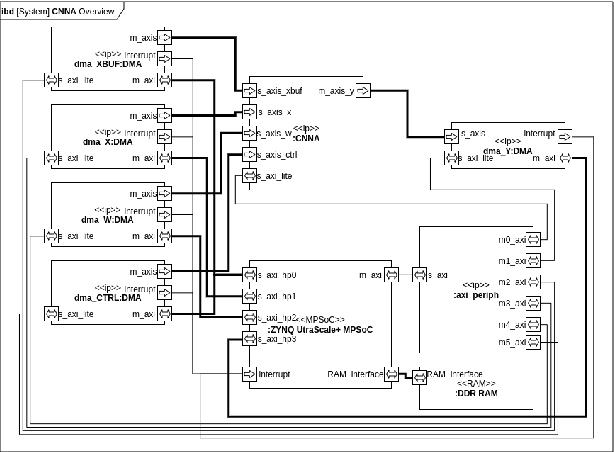

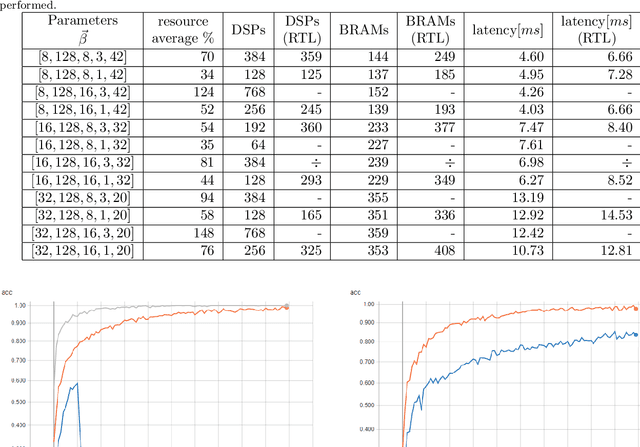

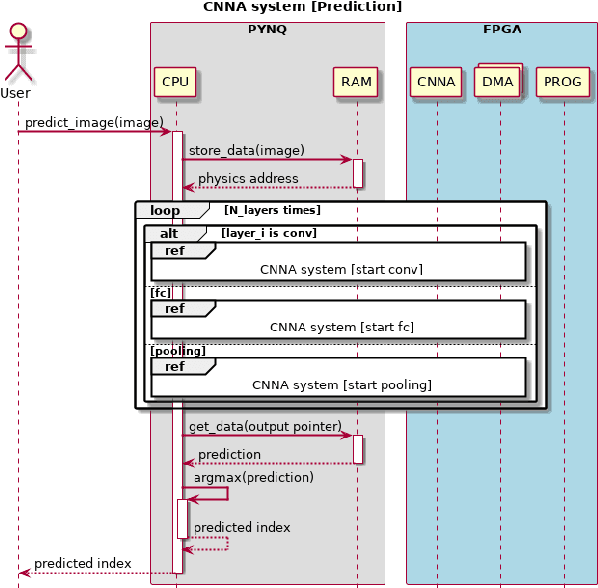

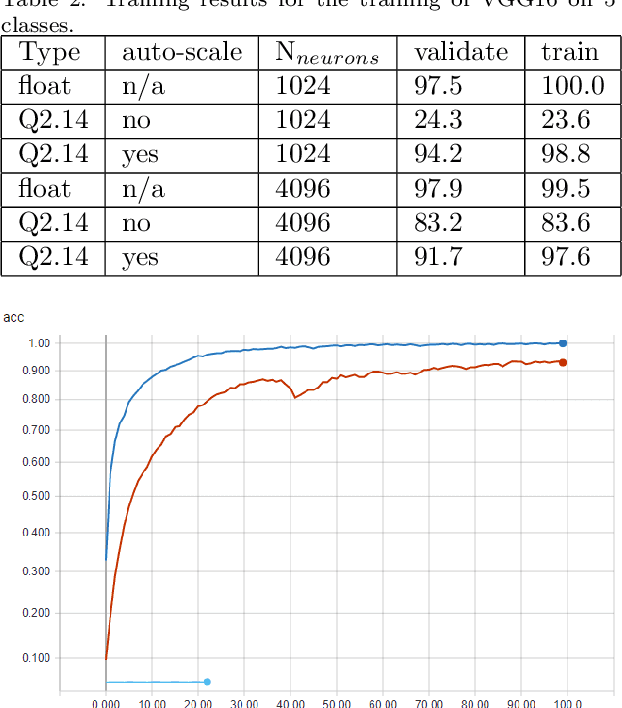

A generic and efficient convolutional neural network accelerator using HLS for a system on chip design

Apr 27, 2020

Abstract:This paper presents a generic convolutional neural network accelerator (CNNA) for a system on chip design (SoC). The goal was to accelerate inference of different deep learning networks on an embedded SoC platform. The presented CNNA has a scalable architecture which uses high level synthesis (HLS) and SystemC for the hardware accelerator. It is able to accelerate any CNN exported from Python and supports a combination of convolutional, max-pooling, and fully connected layers. A training method using fixed-point quantized weights is proposed and presented in the paper. The CNNA is template-based, enabling it to scale for different targets of the Xilinx ZYNQ platform. This approach enables design space exploration, which makes it possible to explore several configurations of the CNNA during C- and RTL-simulation, fitting it to the desired platform and model. The convolutional neural network VGG16 was used to test the solution on a Xilinx Ultra96 board. The result gave a high accuracy in training with an auto-scaled fixed-point Q2.14 format compared to a similar floating-point model. It was able to perform inference in 2.0 seconds, while having an average power consumption of 2.63 W, which corresponds to a power efficiency of 6.0 GOPS/W for the CNN accelerator.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge