Kevin Sim

Beyond the Hype: Benchmarking LLM-Evolved Heuristics for Bin Packing

Jan 20, 2025

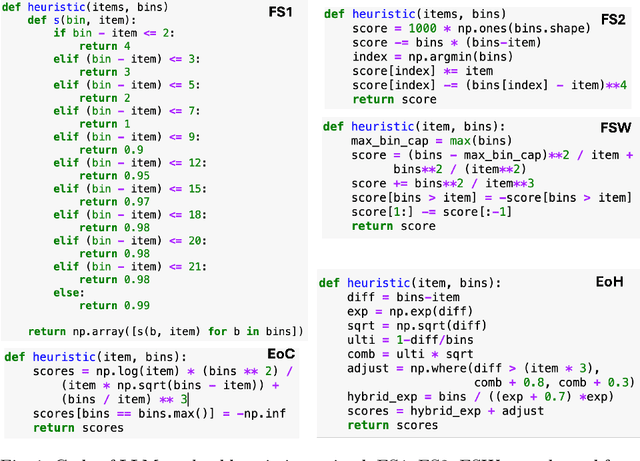

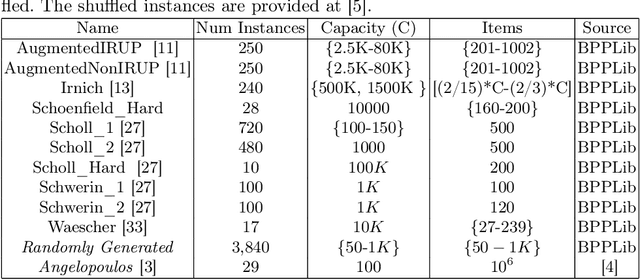

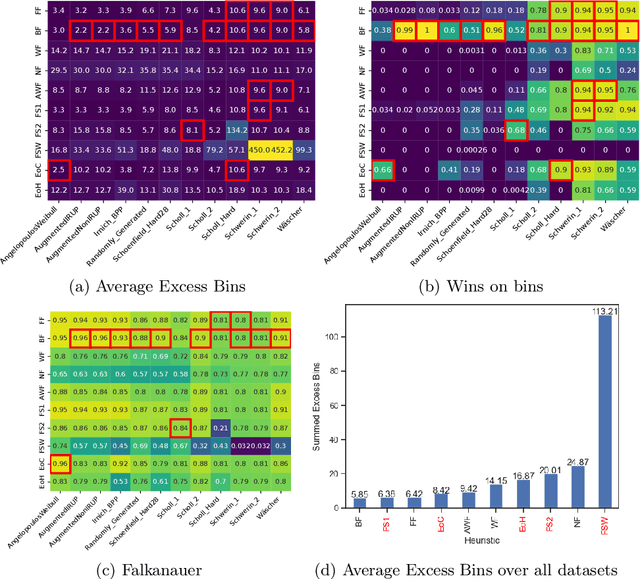

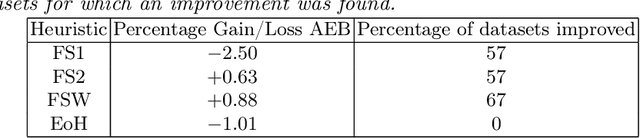

Abstract:Coupling Large Language Models (LLMs) with Evolutionary Algorithms has recently shown significant promise as a technique to design new heuristics that outperform existing methods, particularly in the field of combinatorial optimisation. An escalating arms race is both rapidly producing new heuristics and improving the efficiency of the processes evolving them. However, driven by the desire to quickly demonstrate the superiority of new approaches, evaluation of the new heuristics produced for a specific domain is often cursory: testing on very few datasets in which instances all belong to a specific class from the domain, and on few instances per class. Taking bin-packing as an example, to the best of our knowledge we conduct the first rigorous benchmarking study of new LLM-generated heuristics, comparing them to well-known existing heuristics across a large suite of benchmark instances using three performance metrics. For each heuristic, we then evolve new instances won by the heuristic and perform an instance space analysis to understand where in the feature space each heuristic performs well. We show that most of the LLM heuristics do not generalise well when evaluated across a broad range of benchmarks in contrast to existing simple heuristics, and suggest that any gains from generating very specialist heuristics that only work in small areas of the instance space need to be weighed carefully against the considerable cost of generating these heuristics.

Evaluating the Robustness of Deep-Learning Algorithm-Selection Models by Evolving Adversarial Instances

Jun 24, 2024

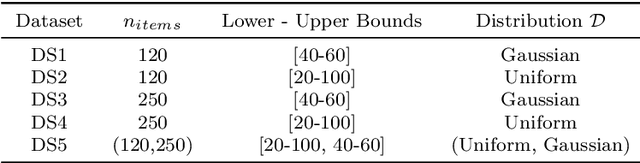

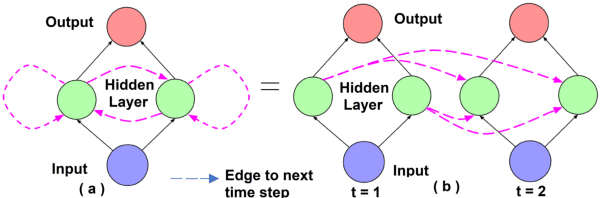

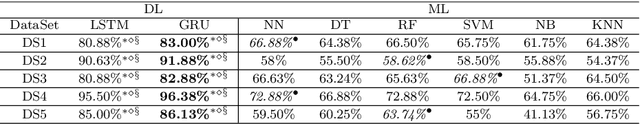

Abstract:Deep neural networks (DNN) are increasingly being used to perform algorithm-selection in combinatorial optimisation domains, particularly as they accommodate input representations which avoid designing and calculating features. Mounting evidence from domains that use images as input shows that deep convolutional networks are vulnerable to adversarial samples, in which a small perturbation of an instance can cause the DNN to misclassify. However, it remains unknown as to whether deep recurrent networks (DRN) which have recently been shown promise as algorithm-selectors in the bin-packing domain are equally vulnerable. We use an evolutionary algorithm (EA) to find perturbations of instances from two existing benchmarks for online bin packing that cause trained DRNs to misclassify: adversarial samples are successfully generated from up to 56% of the original instances depending on the dataset. Analysis of the new misclassified instances sheds light on the `fragility' of some training instances, i.e. instances where it is trivial to find a small perturbation that results in a misclassification and the factors that influence this. Finally, the method generates a large number of new instances misclassified with a wide variation in confidence, providing a rich new source of training data to create more robust models.

To Switch or not to Switch: Predicting the Benefit of Switching between Algorithms based on Trajectory Features

Feb 17, 2023

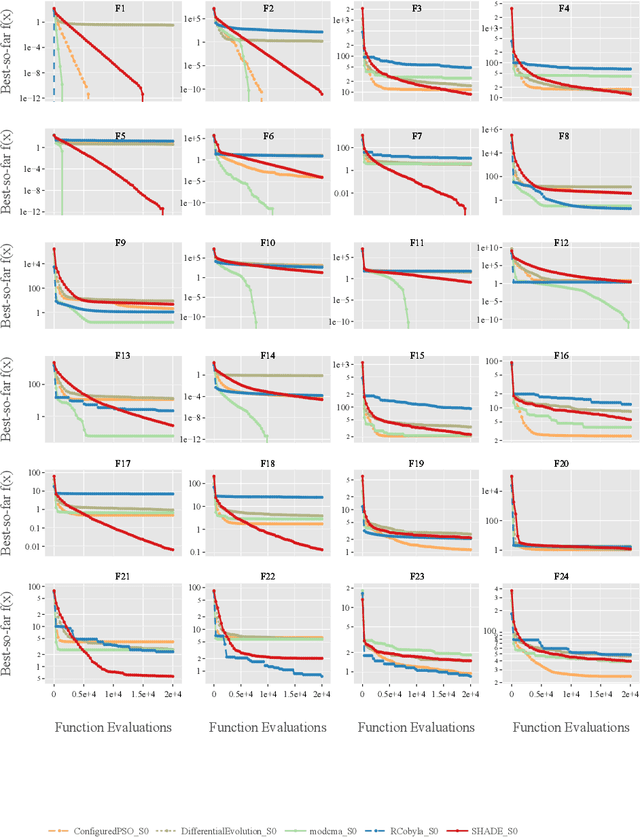

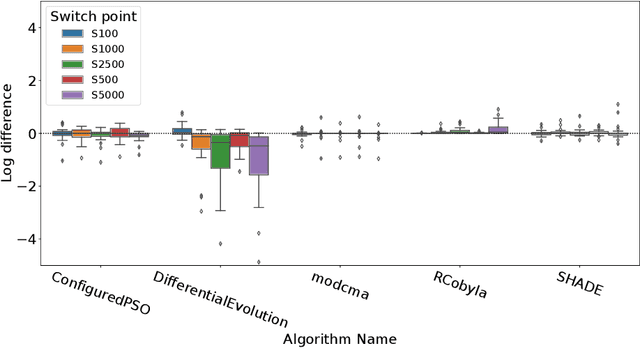

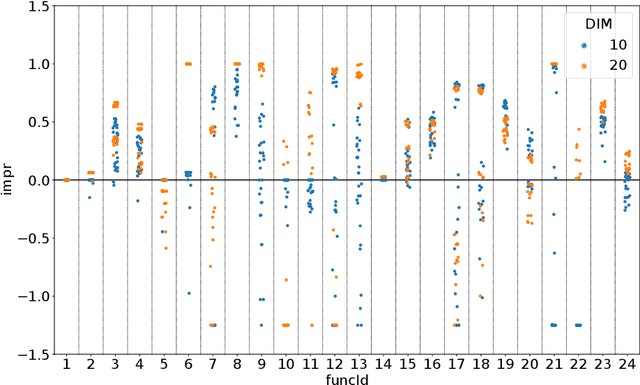

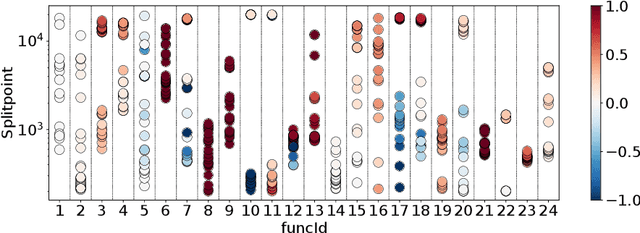

Abstract:Dynamic algorithm selection aims to exploit the complementarity of multiple optimization algorithms by switching between them during the search. While these kinds of dynamic algorithms have been shown to have potential to outperform their component algorithms, it is still unclear how this potential can best be realized. One promising approach is to make use of landscape features to enable a per-run trajectory-based switch. Here, the samples seen by the first algorithm are used to create a set of features which describe the landscape from the perspective of the algorithm. These features are then used to predict what algorithm to switch to. In this work, we extend this per-run trajectory-based approach to consider a wide variety of potential points at which to perform the switch. We show that using a sliding window to capture the local landscape features contains information which can be used to predict whether a switch at that point would be beneficial to future performance. By analyzing the resulting models, we identify what features are most important to these predictions. Finally, by evaluating the importance of features and comparing these values between multiple algorithms, we show clear differences in the way the second algorithm interacts with the local landscape features found before the switch.

Automated Algorithm Selection: from Feature-Based to Feature-Free Approaches

Mar 24, 2022

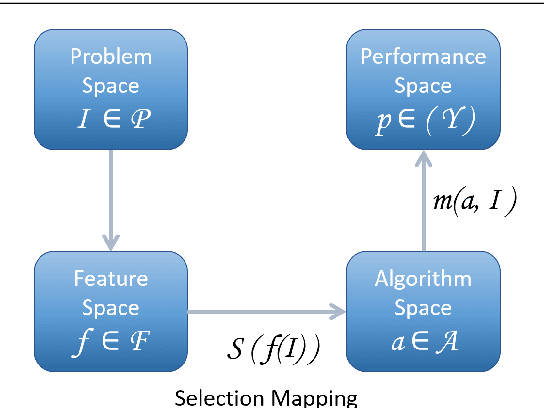

Abstract:We propose a novel technique for algorithm-selection, applicable to optimisation domains in which there is implicit sequential information encapsulated in the data, e.g., in online bin-packing. Specifically we train two types of recurrent neural networks to predict a packing heuristic in online bin-packing, selecting from four well-known heuristics. As input, the RNN methods only use the sequence of item-sizes. This contrasts to typical approaches to algorithm-selection which require a model to be trained using domain-specific instance features that need to be first derived from the input data. The RNN approaches are shown to be capable of achieving within 5% of the oracle performance on between 80.88% to 97.63% of the instances, depending on the dataset. They are also shown to outperform classical machine learning models trained using derived features. Finally, we hypothesise that the proposed methods perform well when the instances exhibit some implicit structure that results in discriminatory performance with respect to a set of heuristics. We test this hypothesis by generating fourteen new datasets with increasing levels of structure, and show that there is a critical threshold of structure required before algorithm-selection delivers benefit.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge