Kevan Yuen

Looking at Hands in Autonomous Vehicles: A ConvNet Approach using Part Affinity Fields

Apr 03, 2018

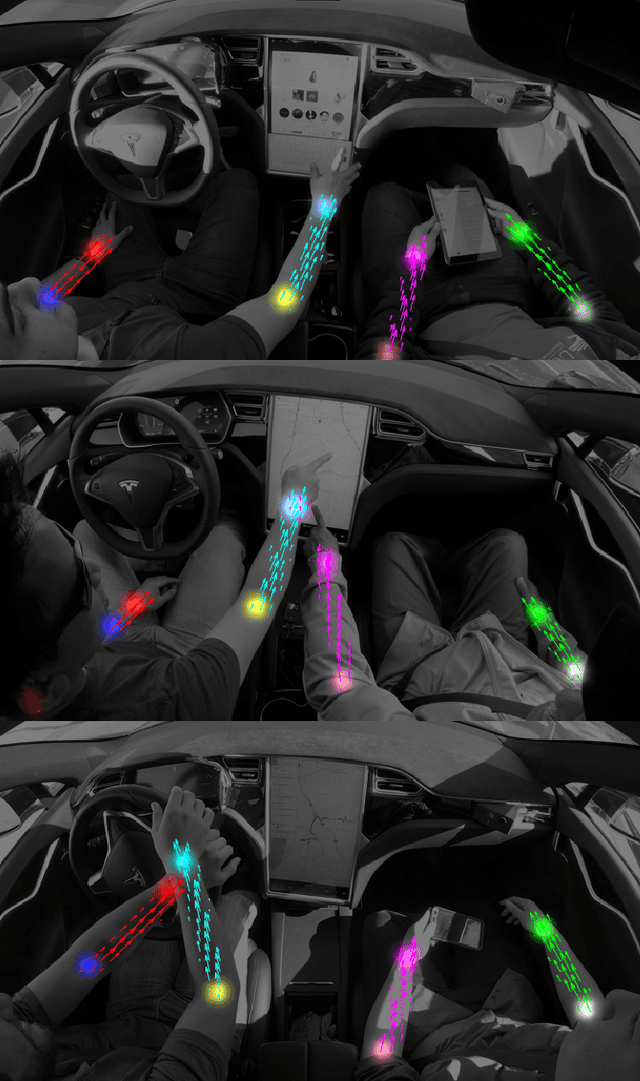

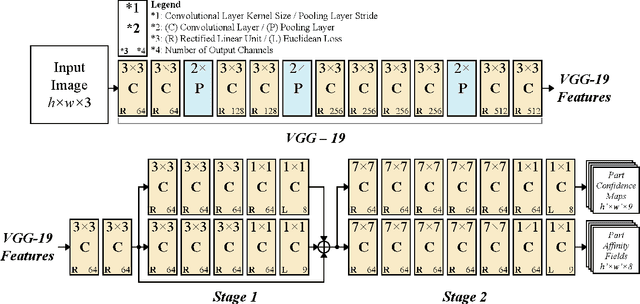

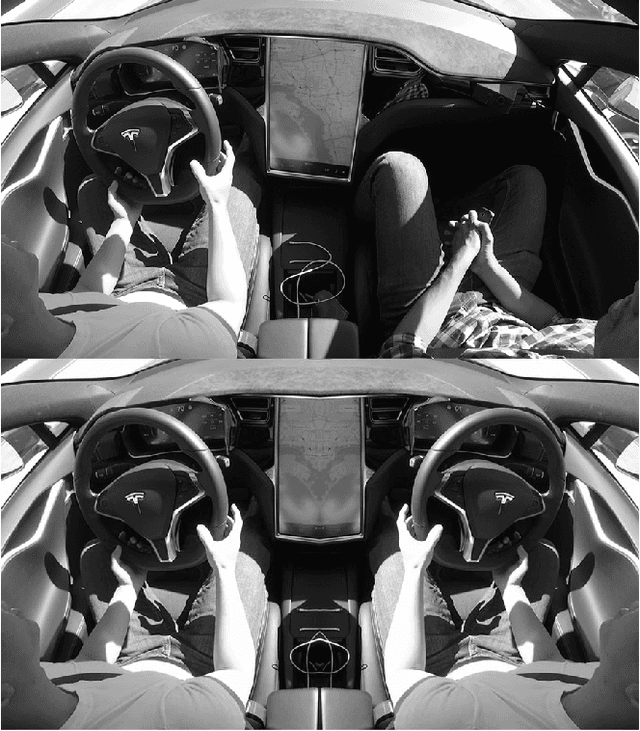

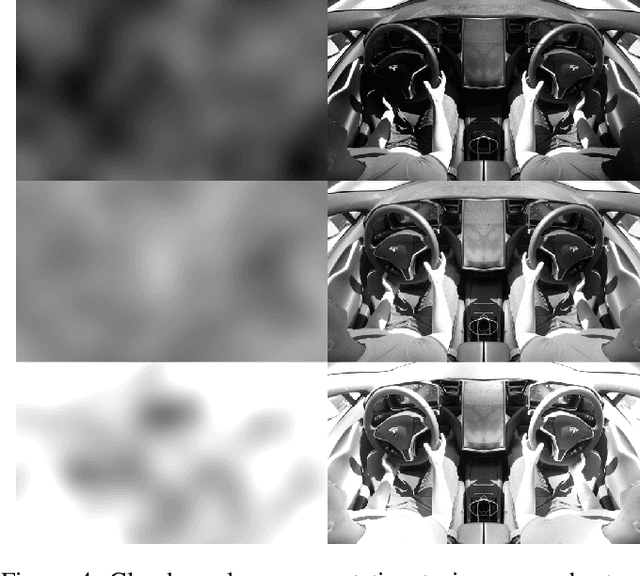

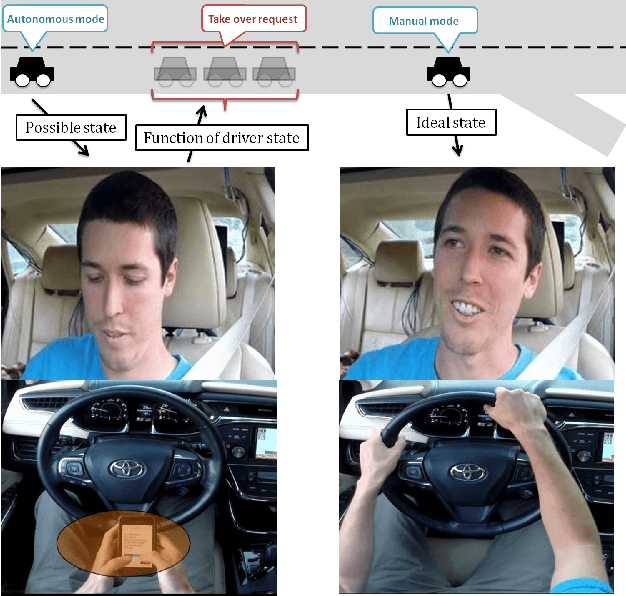

Abstract:In the context of autonomous driving, where humans may need to take over in the event where the computer may issue a takeover request, a key step towards driving safety is the monitoring of the hands to ensure the driver is ready for such a request. This work, focuses on the first step of this process, which is to locate the hands. Such a system must work in real-time and under varying harsh lighting conditions. This paper introduces a fast ConvNet approach, based on the work of original work of OpenPose for full body joint estimation. The network is modified with fewer parameters and retrained using our own day-time naturalistic autonomous driving dataset to estimate joint and affinity heatmaps for driver & passenger's wrist and elbows, for a total of 8 joint classes and part affinity fields between each wrist-elbow pair. The approach runs real-time on real-world data at 40 fps on multiple drivers and passengers. The system is extensively evaluated both quantitatively and qualitatively, showing at least 95% detection performance on joint localization and arm-angle estimation.

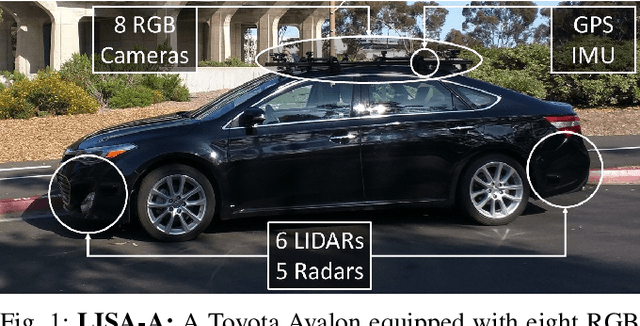

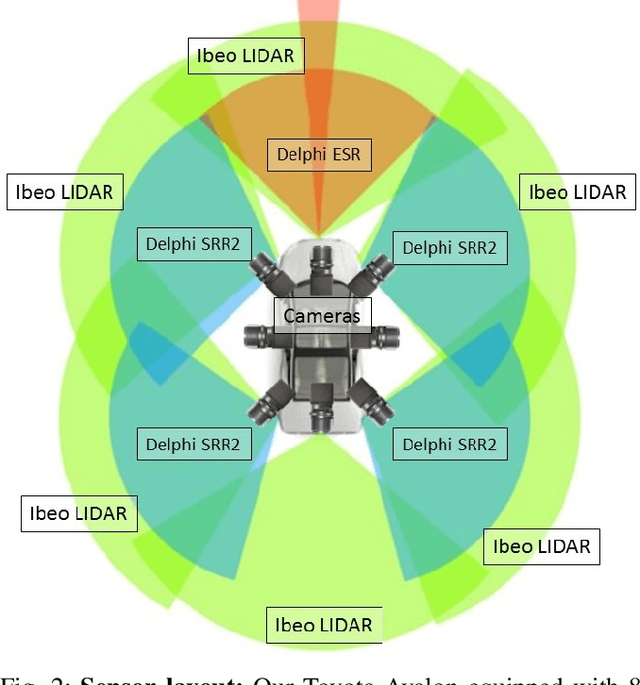

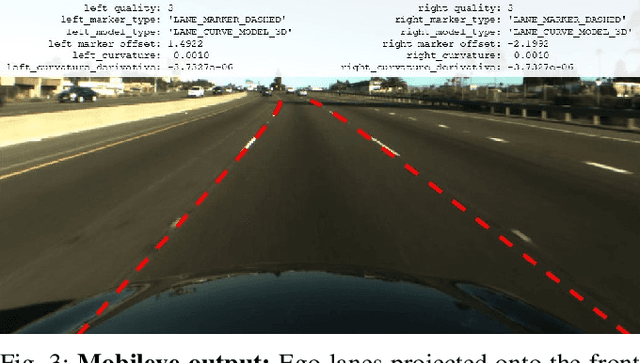

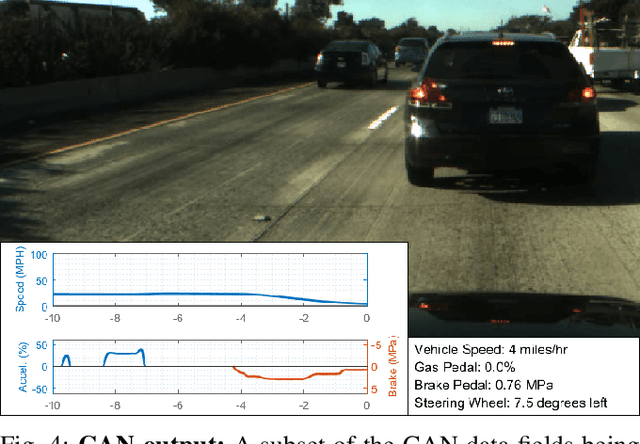

A Multimodal, Full-Surround Vehicular Testbed for Naturalistic Studies and Benchmarking: Design, Calibration and Deployment

Mar 20, 2018

Abstract:Recent progress in autonomous and semi-autonomous driving has been made possible in part through an assortment of sensors that provide the intelligent agent with an enhanced perception of its surroundings. It has been clear for quite some while now that for intelligent vehicles to function effectively in all situations and conditions, a fusion of different sensor technologies is essential. Consequently, the availability of synchronized multi-sensory data streams are necessary to promote the development of fusion based algorithms for low, mid and high level semantic tasks. In this paper, we provide a comprehensive description of LISA-A: our heavily sensorized, full-surround testbed capable of providing high quality data from a slew of synchronized and calibrated sensors such as cameras, LIDARs, radars, and the IMU/GPS. The vehicle has recorded over 100 hours of real world data for a very diverse set of weather, traffic and daylight conditions. All captured data is accurately calibrated and synchronized using timestamps, and stored safely in high performance servers mounted inside the vehicle itself. Details on the testbed instrumentation, sensor layout, sensor outputs, calibration and synchronization are described in this paper.

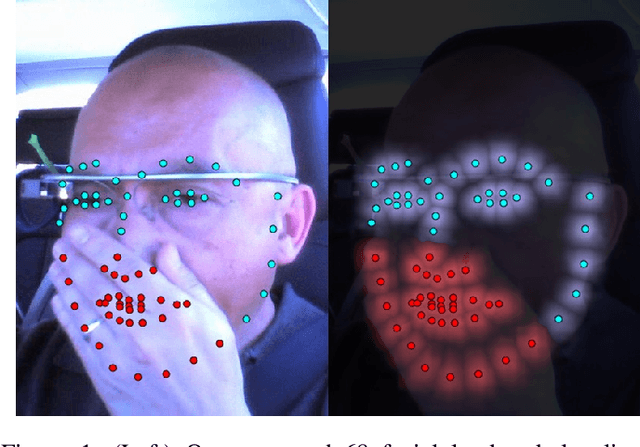

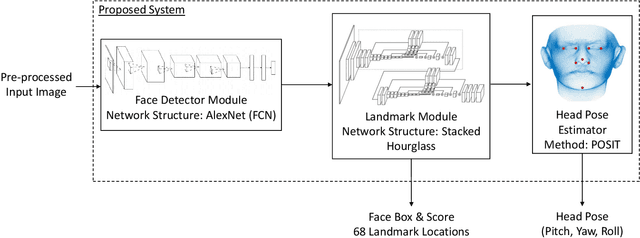

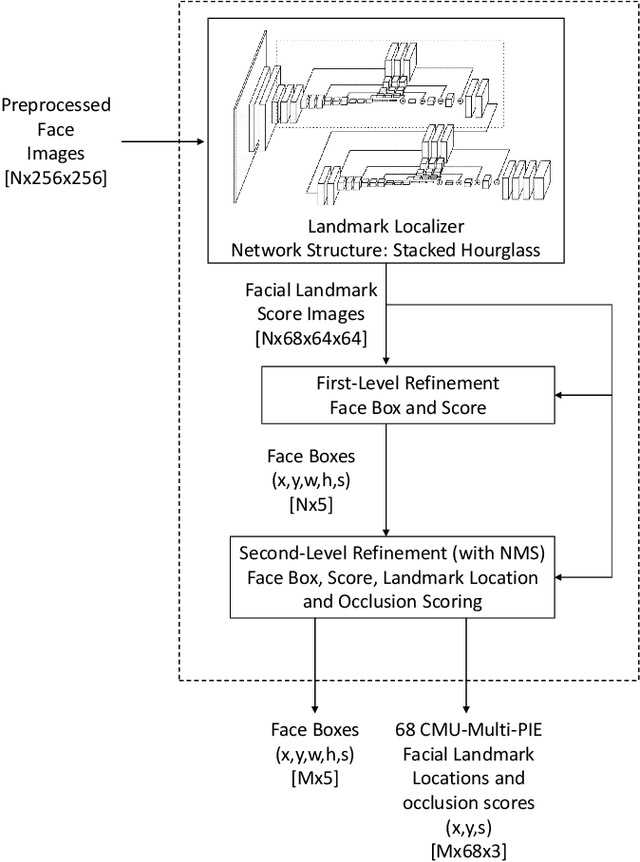

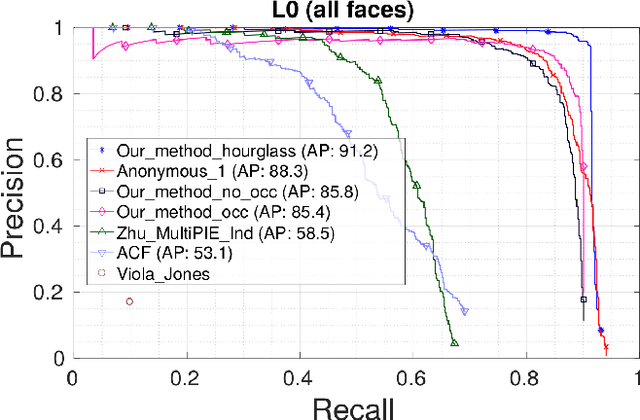

An Occluded Stacked Hourglass Approach to Facial Landmark Localization and Occlusion Estimation

Feb 05, 2018

Abstract:A key step to driver safety is to observe the driver's activities with the face being a key step in this process to extracting information such as head pose, blink rate, yawns, talking to passenger which can then help derive higher level information such as distraction, drowsiness, intent, and where they are looking. In the context of driving safety, it is important for the system perform robust estimation under harsh lighting and occlusion but also be able to detect when the occlusion occurs so that information predicted from occluded parts of the face can be taken into account properly. This paper introduces the Occluded Stacked Hourglass, based on the work of original Stacked Hourglass network for body pose joint estimation, which is retrained to process a detected face window and output 68 occlusion heat maps, each corresponding to a facial landmark. Landmark location, occlusion levels and a refined face detection score, to reject false positives, are extracted from these heat maps. Using the facial landmark locations, features such as head pose and eye/mouth openness can be extracted to derive driver attention and activity. The system is evaluated for face detection, head pose, and occlusion estimation on various datasets in the wild, both quantitatively and qualitatively, and shows state-of-the-art results.

Dynamics of Driver's Gaze: Explorations in Behavior Modeling & Maneuver Prediction

Jan 31, 2018

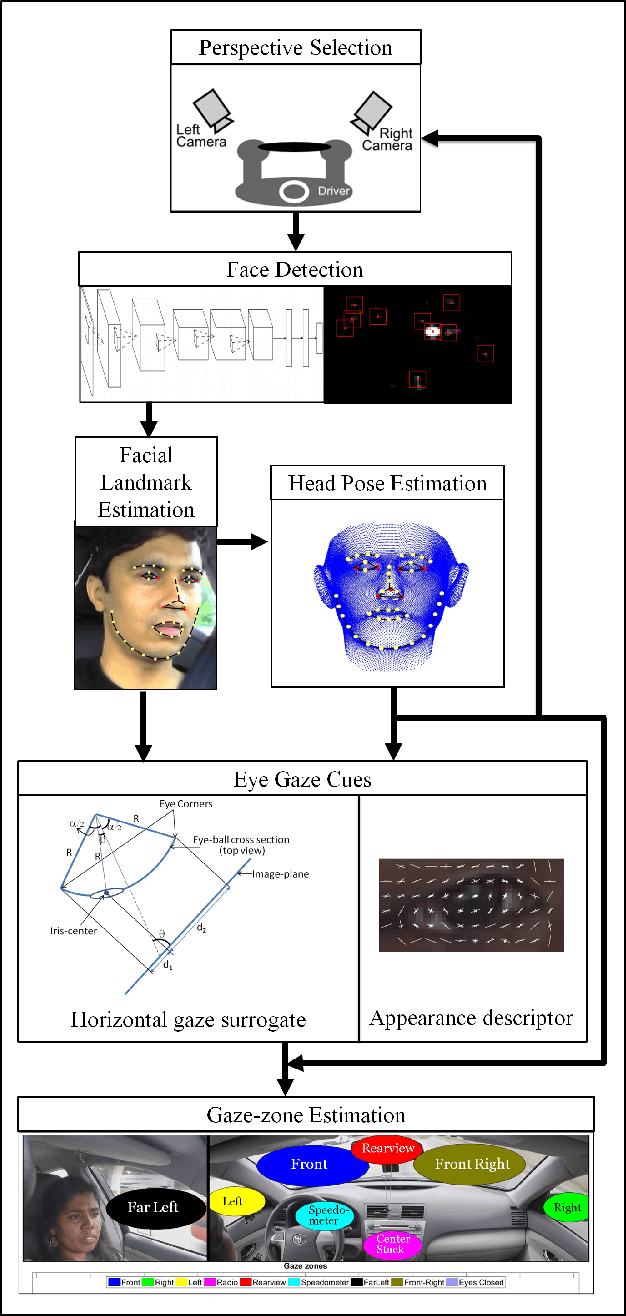

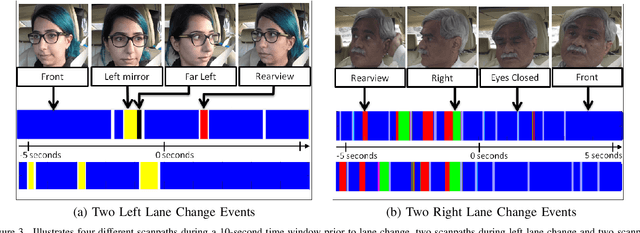

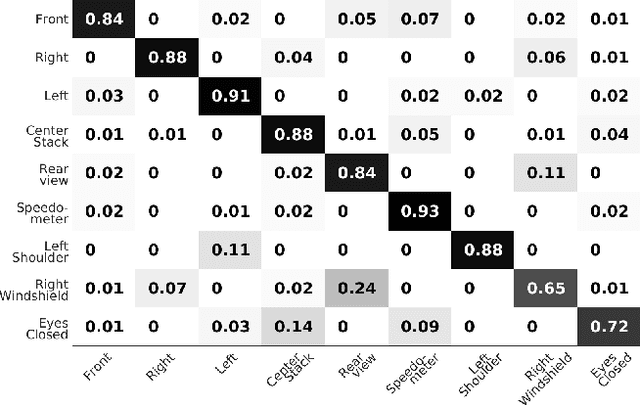

Abstract:The study and modeling of driver's gaze dynamics is important because, if and how the driver is monitoring the driving environment is vital for driver assistance in manual mode, for take-over requests in highly automated mode and for semantic perception of the surround in fully autonomous mode. We developed a machine vision based framework to classify driver's gaze into context rich zones of interest and model driver's gaze behavior by representing gaze dynamics over a time period using gaze accumulation, glance duration and glance frequencies. As a use case, we explore the driver's gaze dynamic patterns during maneuvers executed in freeway driving, namely, left lane change maneuver, right lane change maneuver and lane keeping. It is shown that condensing gaze dynamics into durations and frequencies leads to recurring patterns based on driver activities. Furthermore, modeling these patterns show predictive powers in maneuver detection up to a few hundred milliseconds a priori.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge