Ken Nakae

MarmoNet: a pipeline for automated projection mapping of the common marmoset brain from whole-brain serial two-photon tomography

Aug 02, 2019

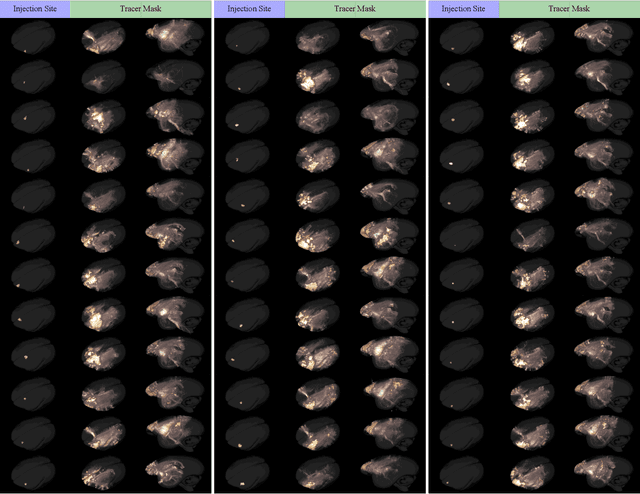

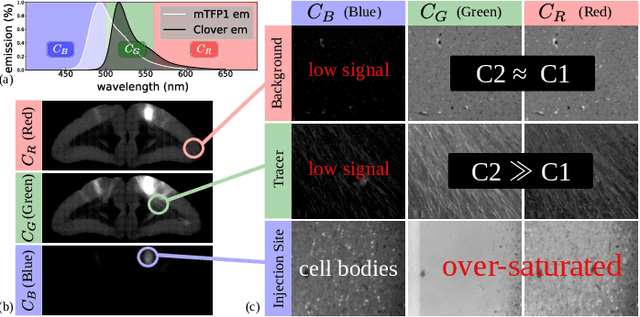

Abstract:Understanding the connectivity in the brain is an important prerequisite for understanding how the brain processes information. In the Brain/MINDS project, a connectivity study on marmoset brains uses two-photon microscopy fluorescence images of axonal projections to collect the neuron connectivity from defined brain regions at the mesoscopic scale. The processing of the images requires the detection and segmentation of the axonal tracer signal. The objective is to detect as much tracer signal as possible while not misclassifying other background structures as the signal. This can be challenging because of imaging noise, a cluttered image background, distortions or varying image contrast cause problems. We are developing MarmoNet, a pipeline that processes and analyzes tracer image data of the common marmoset brain. The pipeline incorporates state-of-the-art machine learning techniques based on artificial convolutional neural networks (CNN) and image registration techniques to extract and map all relevant information in a robust manner. The pipeline processes new images in a fully automated way. This report introduces the current state of the tracer signal analysis part of the pipeline.

Distributional Smoothing with Virtual Adversarial Training

Jun 11, 2016

Abstract:We propose local distributional smoothness (LDS), a new notion of smoothness for statistical model that can be used as a regularization term to promote the smoothness of the model distribution. We named the LDS based regularization as virtual adversarial training (VAT). The LDS of a model at an input datapoint is defined as the KL-divergence based robustness of the model distribution against local perturbation around the datapoint. VAT resembles adversarial training, but distinguishes itself in that it determines the adversarial direction from the model distribution alone without using the label information, making it applicable to semi-supervised learning. The computational cost for VAT is relatively low. For neural network, the approximated gradient of the LDS can be computed with no more than three pairs of forward and back propagations. When we applied our technique to supervised and semi-supervised learning for the MNIST dataset, it outperformed all the training methods other than the current state of the art method, which is based on a highly advanced generative model. We also applied our method to SVHN and NORB, and confirmed our method's superior performance over the current state of the art semi-supervised method applied to these datasets.

Principal Sensitivity Analysis

Mar 11, 2015

Abstract:We present a novel algorithm (Principal Sensitivity Analysis; PSA) to analyze the knowledge of the classifier obtained from supervised machine learning techniques. In particular, we define principal sensitivity map (PSM) as the direction on the input space to which the trained classifier is most sensitive, and use analogously defined k-th PSM to define a basis for the input space. We train neural networks with artificial data and real data, and apply the algorithm to the obtained supervised classifiers. We then visualize the PSMs to demonstrate the PSA's ability to decompose the knowledge acquired by the trained classifiers.

Deep learning of fMRI big data: a novel approach to subject-transfer decoding

Jan 31, 2015

Abstract:As a technology to read brain states from measurable brain activities, brain decoding are widely applied in industries and medical sciences. In spite of high demands in these applications for a universal decoder that can be applied to all individuals simultaneously, large variation in brain activities across individuals has limited the scope of many studies to the development of individual-specific decoders. In this study, we used deep neural network (DNN), a nonlinear hierarchical model, to construct a subject-transfer decoder. Our decoder is the first successful DNN-based subject-transfer decoder. When applied to a large-scale functional magnetic resonance imaging (fMRI) database, our DNN-based decoder achieved higher decoding accuracy than other baseline methods, including support vector machine (SVM). In order to analyze the knowledge acquired by this decoder, we applied principal sensitivity analysis (PSA) to the decoder and visualized the discriminative features that are common to all subjects in the dataset. Our PSA successfully visualized the subject-independent features contributing to the subject-transferability of the trained decoder.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge