Keita Mason

Open-Set Recognition in the Age of Vision-Language Models

Mar 25, 2024

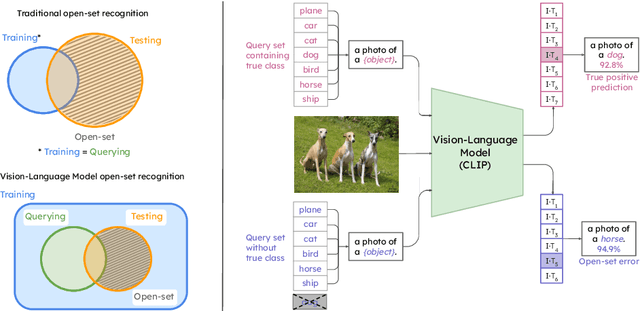

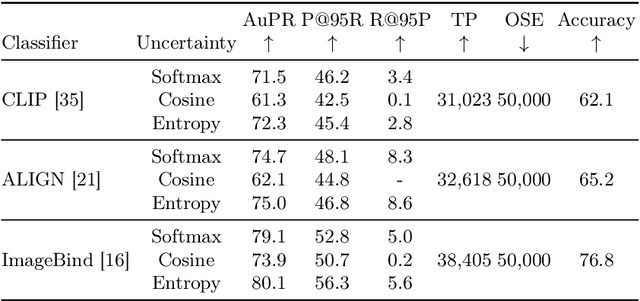

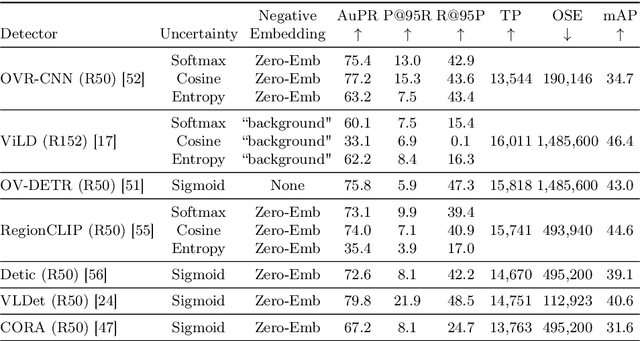

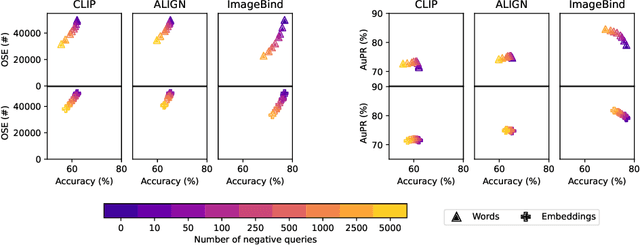

Abstract:Are vision-language models (VLMs) open-set models because they are trained on internet-scale datasets? We answer this question with a clear no - VLMs introduce closed-set assumptions via their finite query set, making them vulnerable to open-set conditions. We systematically evaluate VLMs for open-set recognition and find they frequently misclassify objects not contained in their query set, leading to alarmingly low precision when tuned for high recall and vice versa. We show that naively increasing the size of the query set to contain more and more classes does not mitigate this problem, but instead causes diminishing task performance and open-set performance. We establish a revised definition of the open-set problem for the age of VLMs, define a new benchmark and evaluation protocol to facilitate standardised evaluation and research in this important area, and evaluate promising baseline approaches based on predictive uncertainty and dedicated negative embeddings on a range of VLM classifiers and object detectors.

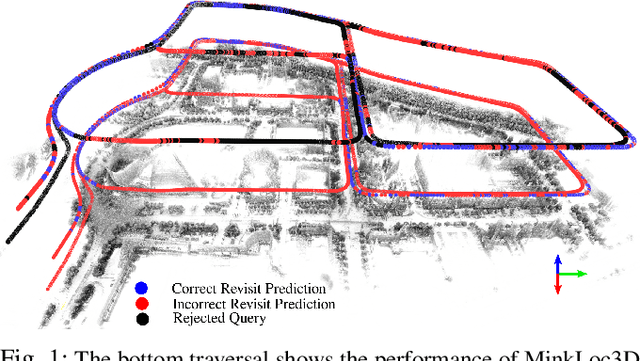

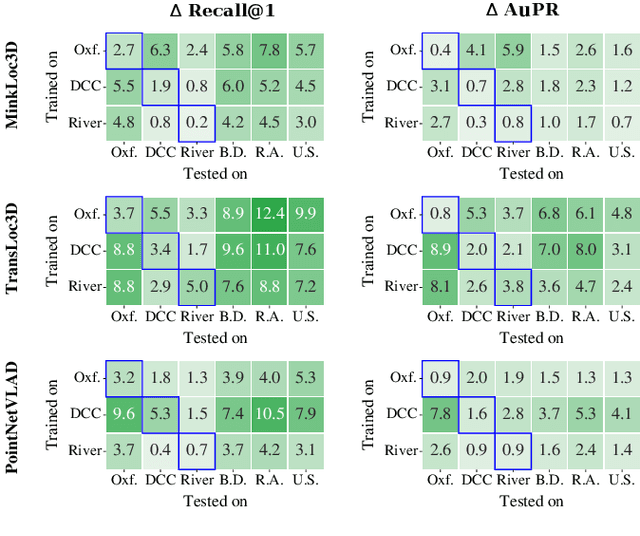

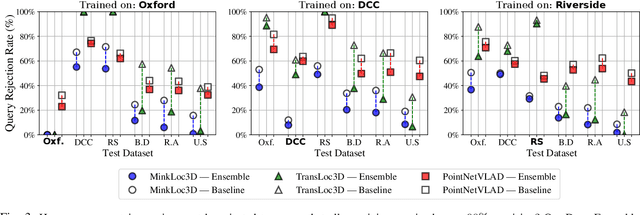

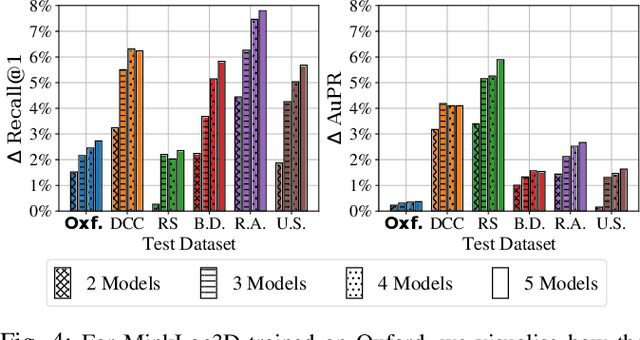

Uncertainty-Aware Lidar Place Recognition in Novel Environments

Oct 04, 2022

Abstract:State-of-the-art approaches to lidar place recognition degrade significantly when tested on novel environments that are not present in their training dataset. To improve their reliability, we propose uncertainty-aware lidar place recognition, where each predicted place match must have an associated uncertainty that can be used to identify and reject potentially incorrect matches. We introduce a novel evaluation protocol designed to benchmark uncertainty-aware lidar place recognition, and present Deep Ensembles as the first uncertainty-aware approach for this task. Testing across three large-scale datasets and three state-of-the-art architectures, we show that Deep Ensembles consistently improves the performance of lidar place recognition in novel environments. Compared to a standard network, our results show that Deep Ensembles improves the Recall@1 by more than 5% and AuPR by more than 3% on average when tested on previously unseen environments. Our code repository will be made publicly available upon paper acceptance at https://github.com/csiro-robotics/Uncertainty-LPR.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge