Kazuto Ichimaru

Neural Active Structure-from-Motion in Dark and Textureless Environment

Oct 20, 2024

Abstract:Active 3D measurement, especially structured light (SL) has been widely used in various fields for its robustness against textureless or equivalent surfaces by low light illumination. In addition, reconstruction of large scenes by moving the SL system has become popular, however, there have been few practical techniques to obtain the system's precise pose information only from images, since most conventional techniques are based on image features, which cannot be retrieved under textureless environments. In this paper, we propose a simultaneous shape reconstruction and pose estimation technique for SL systems from an image set where sparsely projected patterns onto the scene are observed (i.e. no scene texture information), which we call Active SfM. To achieve this, we propose a full optimization framework of the volumetric shape that employs neural signed distance fields (Neural-SDF) for SL with the goal of not only reconstructing the scene shape but also estimating the poses for each motion of the system. Experimental results show that the proposed method is able to achieve accurate shape reconstruction as well as pose estimation from images where only projected patterns are observed.

ActiveNeuS: Neural Signed Distance Fields for Active Stereo

Oct 20, 2024

Abstract:3D-shape reconstruction in extreme environments, such as low illumination or scattering condition, has been an open problem and intensively researched. Active stereo is one of potential solution for such environments for its robustness and high accuracy. However, active stereo systems usually consist of specialized system configurations with complicated algorithms, which narrow their application. In this paper, we propose Neural Signed Distance Field for active stereo systems to enable implicit correspondence search and triangulation in generalized Structured Light. With our technique, textureless or equivalent surfaces by low light condition are successfully reconstructed even with a small number of captured images. Experiments were conducted to confirm that the proposed method could achieve state-of-the-art reconstruction quality under such severe condition. We also demonstrated that the proposed method worked in an underwater scenario.

Unified Underwater Structure-from-Motion

Sep 09, 2019

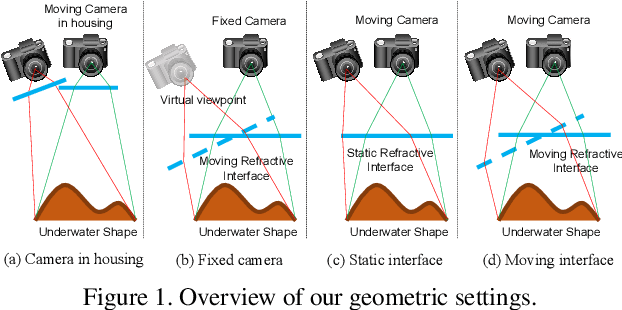

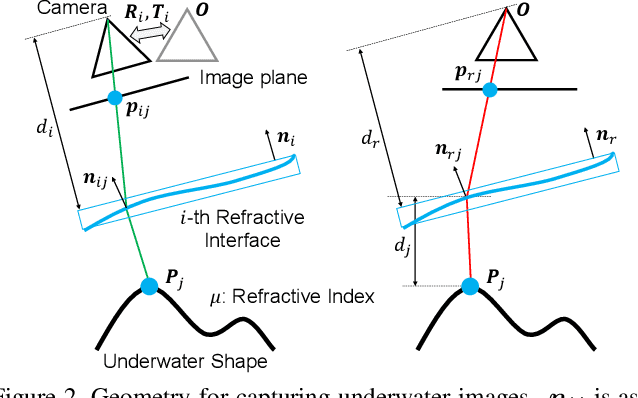

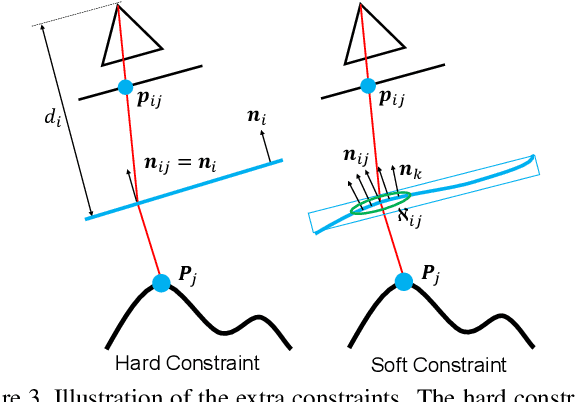

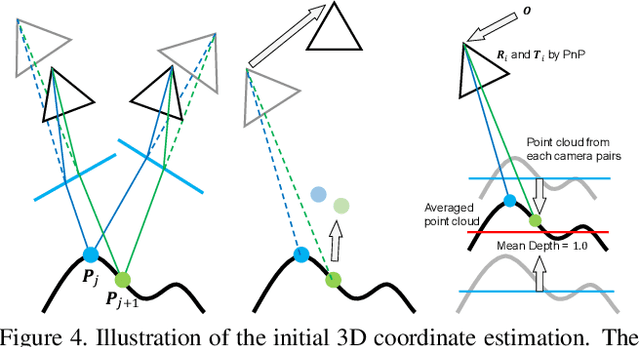

Abstract:This paper shows that accurate underwater 3D shape reconstruction is possible using a single camera, observing a target through a refractive interface. We provide unified reconstruction techniques for a variety of scenarios such as single static camera and moving refractive interface, single moving camera and static refractive interface, and single moving camera and moving refractive interface. In our basic setup, we assume that the refractive interface is planar, and simultaneously estimate the unknown transformations of the planar interface and the camera, and the unknown target shape using bundle adjustment. We also extend it to relax the planarity assumption, which enables us to use waves of the refractive interface for the reconstruction task. Experiments with real data show the superiority of our method to existing methods.

Underwater Stereo using Refraction-free Image Synthesized from Light Field Camera

May 23, 2019

Abstract:There is a strong demand on capturing underwater scenes without distortions caused by refraction. Since a light field camera can capture several light rays at each point of an image plane from various directions, if geometrically correct rays are chosen, it is possible to synthesize a refraction-free image. In this paper, we propose a novel technique to efficiently select such rays to synthesize a refraction-free image from an underwater image captured by a light field camera. In addition, we propose a stereo technique to reconstruct 3D shapes using a pair of our refraction-free images, which are central projection. In the experiment, we captured several underwater scenes by two light field cameras, synthesized refraction free images and applied stereo technique to reconstruct 3D shapes. The results are compared with previous techniques which are based on approximation, showing the strength of our method.

CNN based dense underwater 3D scene reconstruction by transfer learning using bubble database

Nov 21, 2018

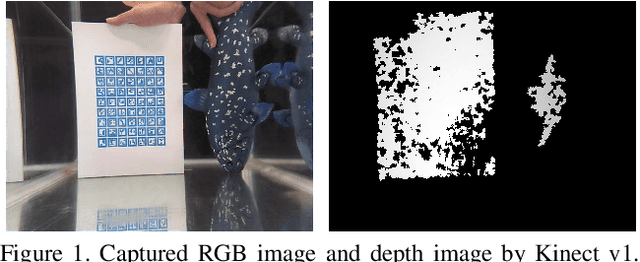

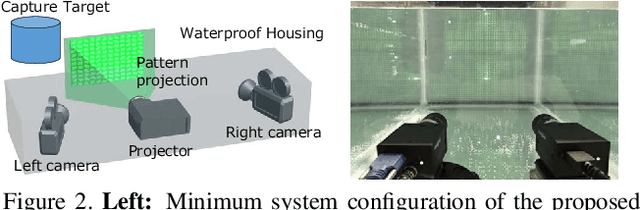

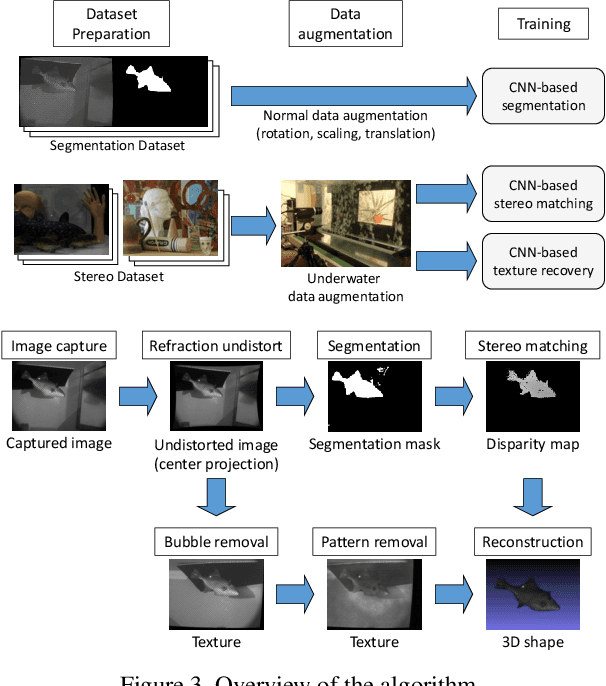

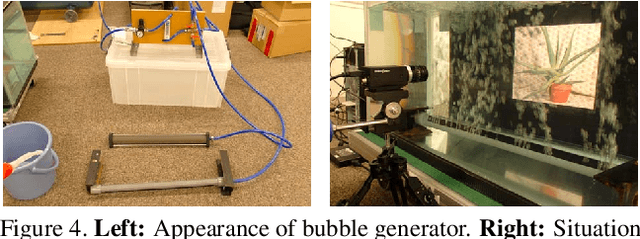

Abstract:Dense 3D shape acquisition of swimming human or live fish is an important research topic for sports, biological science and so on. For this purpose, active stereo sensor is usually used in the air, however it cannot be applied to the underwater environment because of refraction, strong light attenuation and severe interference of bubbles. Passive stereo is a simple solution for capturing dynamic scenes at underwater environment, however the shape with textureless surfaces or irregular reflections cannot be recovered. Recently, the stereo camera pair with a pattern projector for adding artificial textures on the objects is proposed. However, to use the system for underwater environment, several problems should be compensated, i.e., disturbance by fluctuation and bubbles. Simple solution is to use convolutional neural network for stereo to cancel the effects of bubbles and/or water fluctuation. Since it is not easy to train CNN with small size of database with large variation, we develop a special bubble generation device to efficiently create real bubble database of multiple size and density. In addition, we propose a transfer learning technique for multi-scale CNN to effectively remove bubbles and projected-patterns on the object. Further, we develop a real system and actually captured live swimming human, which has not been done before. Experiments are conducted to show the effectiveness of our method compared with the state of the art techniques.

Multi-scale CNN stereo and pattern removal technique for underwater active stereo system

Aug 25, 2018

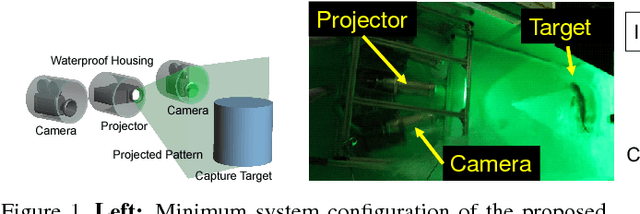

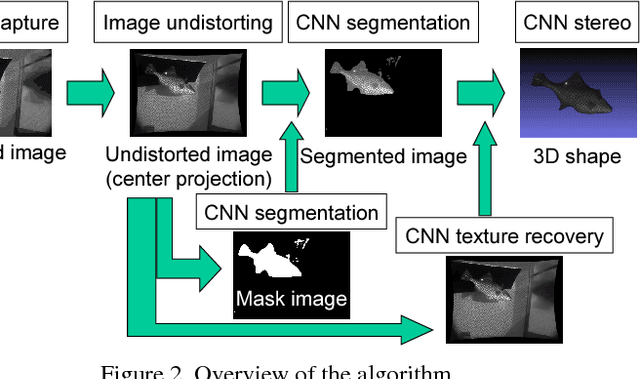

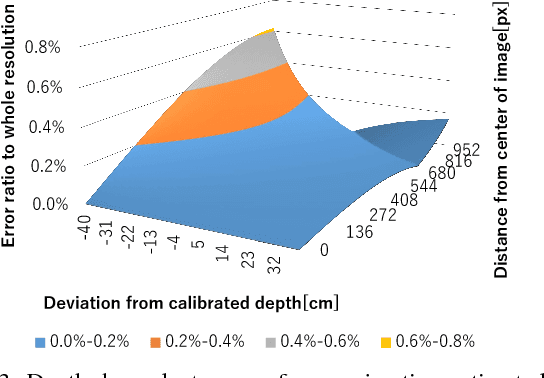

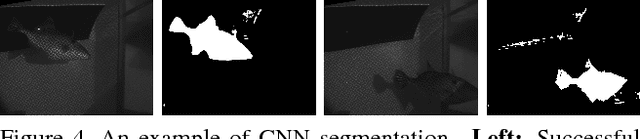

Abstract:Demands on capturing dynamic scenes of underwater environments are rapidly growing. Passive stereo is applicable to capture dynamic scenes, however the shape with textureless surfaces or irregular reflections cannot be recovered by the technique. In our system, we add a pattern projector to the stereo camera pair so that artificial textures are augmented on the objects. To use the system at underwater environments, several problems should be compensated, i.e., refraction, disturbance by fluctuation and bubbles. Further, since surface of the objects are interfered by the bubbles, projected patterns, etc., those noises and patterns should be removed from captured images to recover original texture. To solve these problems, we propose three approaches; a depth-dependent calibration, Convolutional Neural Network(CNN)-stereo method and CNN-based texture recovery method. A depth-dependent calibration is our analysis to find the acceptable depth range for approximation by center projection to find the certain target depth for calibration. In terms of CNN stereo, unlike common CNNbased stereo methods which do not consider strong disturbances like refraction or bubbles, we designed a novel CNN architecture for stereo matching using multi-scale information, which is intended to be robust against such disturbances. Finally, we propose a multi-scale method for bubble and a projected-pattern removal method using CNNs to recover original textures. Experimental results are shown to prove the effectiveness of our method compared with the state of the art techniques. Furthermore, reconstruction of a live swimming fish is demonstrated to confirm the feasibility of our techniques.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge