Multi-scale CNN stereo and pattern removal technique for underwater active stereo system

Paper and Code

Aug 25, 2018

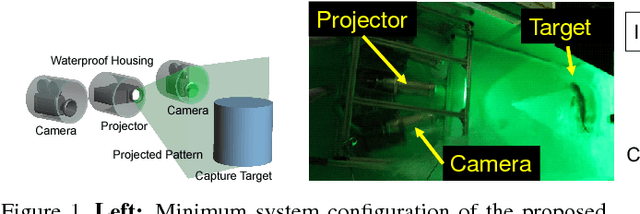

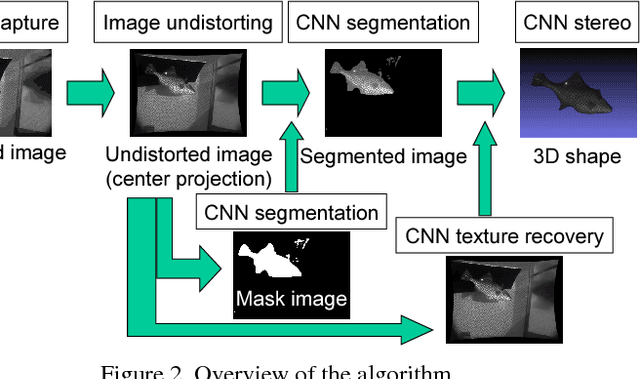

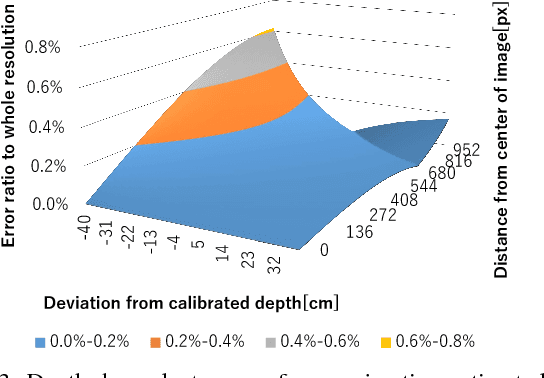

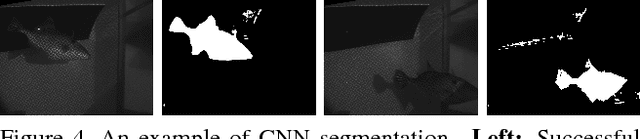

Demands on capturing dynamic scenes of underwater environments are rapidly growing. Passive stereo is applicable to capture dynamic scenes, however the shape with textureless surfaces or irregular reflections cannot be recovered by the technique. In our system, we add a pattern projector to the stereo camera pair so that artificial textures are augmented on the objects. To use the system at underwater environments, several problems should be compensated, i.e., refraction, disturbance by fluctuation and bubbles. Further, since surface of the objects are interfered by the bubbles, projected patterns, etc., those noises and patterns should be removed from captured images to recover original texture. To solve these problems, we propose three approaches; a depth-dependent calibration, Convolutional Neural Network(CNN)-stereo method and CNN-based texture recovery method. A depth-dependent calibration is our analysis to find the acceptable depth range for approximation by center projection to find the certain target depth for calibration. In terms of CNN stereo, unlike common CNNbased stereo methods which do not consider strong disturbances like refraction or bubbles, we designed a novel CNN architecture for stereo matching using multi-scale information, which is intended to be robust against such disturbances. Finally, we propose a multi-scale method for bubble and a projected-pattern removal method using CNNs to recover original textures. Experimental results are shown to prove the effectiveness of our method compared with the state of the art techniques. Furthermore, reconstruction of a live swimming fish is demonstrated to confirm the feasibility of our techniques.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge