Kazufumi Ito

A neural network based policy iteration algorithm with global $H^2$-superlinear convergence for stochastic games on domains

Jul 23, 2019

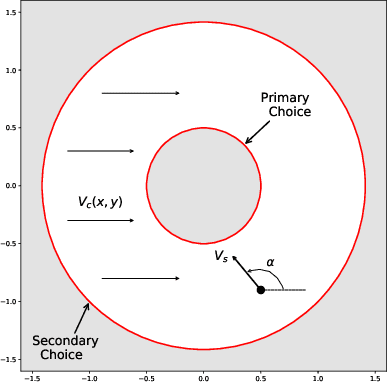

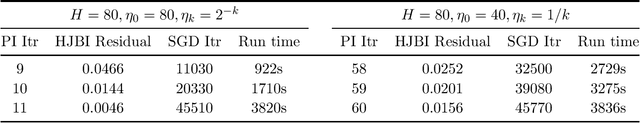

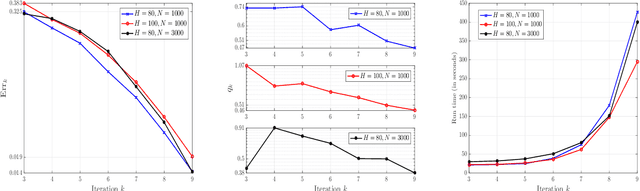

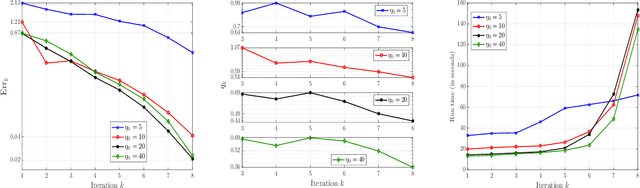

Abstract:In this work, we propose a class of numerical schemes for solving semilinear Hamilton-Jacobi-Bellman-Isaacs (HJBI) boundary value problems which arise naturally from exit time problems of diffusion processes with controlled drift. We exploit policy iteration to reduce the semilinear problem into a sequence of linear Dirichlet problems, which are subsequently approximated by a multilayer feedforward neural network ansatz. We establish that the numerical solutions converge globally in the $H^2$-norm, and further demonstrate that this convergence is superlinear, by interpreting the algorithm as an inexact Newton iteration for the HJBI equation. Moreover, we construct the optimal feedback controls from the numerical value functions and deduce convergence. The numerical schemes and convergence results are then extended to HJBI boundary value problems corresponding to controlled diffusion processes with oblique boundary reflection. Numerical experiments on the stochastic Zermelo navigation problem are presented to illustrate the theoretical results and to demonstrate the effectiveness of the method.

Variational Gaussian Approximation for Poisson Data

Sep 18, 2017

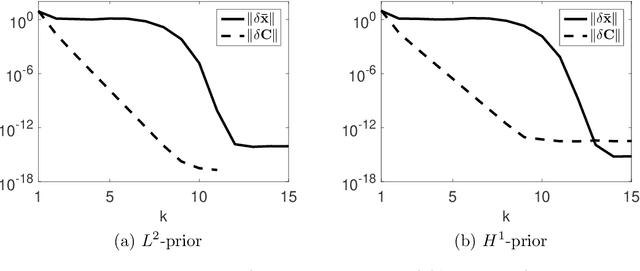

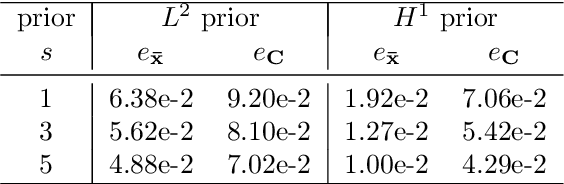

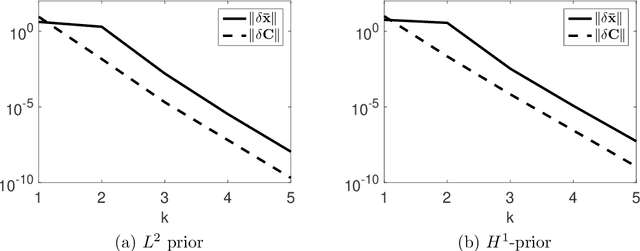

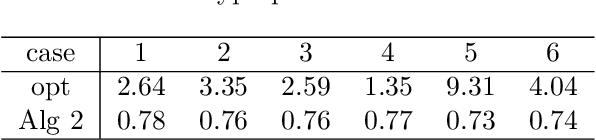

Abstract:The Poisson model is frequently employed to describe count data, but in a Bayesian context it leads to an analytically intractable posterior probability distribution. In this work, we analyze a variational Gaussian approximation to the posterior distribution arising from the Poisson model with a Gaussian prior. This is achieved by seeking an optimal Gaussian distribution minimizing the Kullback-Leibler divergence from the posterior distribution to the approximation, or equivalently maximizing the lower bound for the model evidence. We derive an explicit expression for the lower bound, and show the existence and uniqueness of the optimal Gaussian approximation. The lower bound functional can be viewed as a variant of classical Tikhonov regularization that penalizes also the covariance. Then we develop an efficient alternating direction maximization algorithm for solving the optimization problem, and analyze its convergence. We discuss strategies for reducing the computational complexity via low rank structure of the forward operator and the sparsity of the covariance. Further, as an application of the lower bound, we discuss hierarchical Bayesian modeling for selecting the hyperparameter in the prior distribution, and propose a monotonically convergent algorithm for determining the hyperparameter. We present extensive numerical experiments to illustrate the Gaussian approximation and the algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge