Kaustubh Chaudhari

BlackJAX: Composable Bayesian inference in JAX

Feb 22, 2024Abstract:BlackJAX is a library implementing sampling and variational inference algorithms commonly used in Bayesian computation. It is designed for ease of use, speed, and modularity by taking a functional approach to the algorithms' implementation. BlackJAX is written in Python, using JAX to compile and run NumpPy-like samplers and variational methods on CPUs, GPUs, and TPUs. The library integrates well with probabilistic programming languages by working directly with the (un-normalized) target log density function. BlackJAX is intended as a collection of low-level, composable implementations of basic statistical 'atoms' that can be combined to perform well-defined Bayesian inference, but also provides high-level routines for ease of use. It is designed for users who need cutting-edge methods, researchers who want to create complex sampling methods, and people who want to learn how these work.

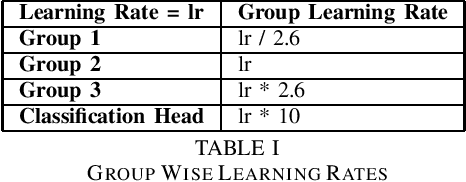

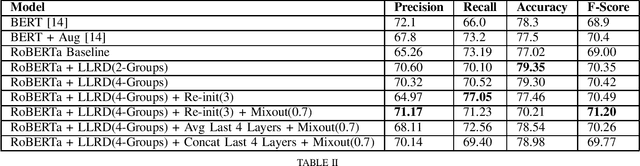

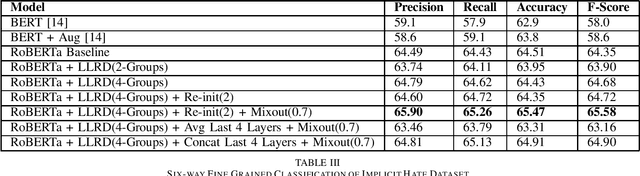

Combating high variance in Data-Scarce Implicit Hate Speech Classification

Aug 29, 2022

Abstract:Hate speech classification has been a long-standing problem in natural language processing. However, even though there are numerous hate speech detection methods, they usually overlook a lot of hateful statements due to them being implicit in nature. Developing datasets to aid in the task of implicit hate speech classification comes with its own challenges; difficulties are nuances in language, varying definitions of what constitutes hate speech, and the labor-intensive process of annotating such data. This had led to a scarcity of data available to train and test such systems, which gives rise to high variance problems when parameter-heavy transformer-based models are used to address the problem. In this paper, we explore various optimization and regularization techniques and develop a novel RoBERTa-based model that achieves state-of-the-art performance.

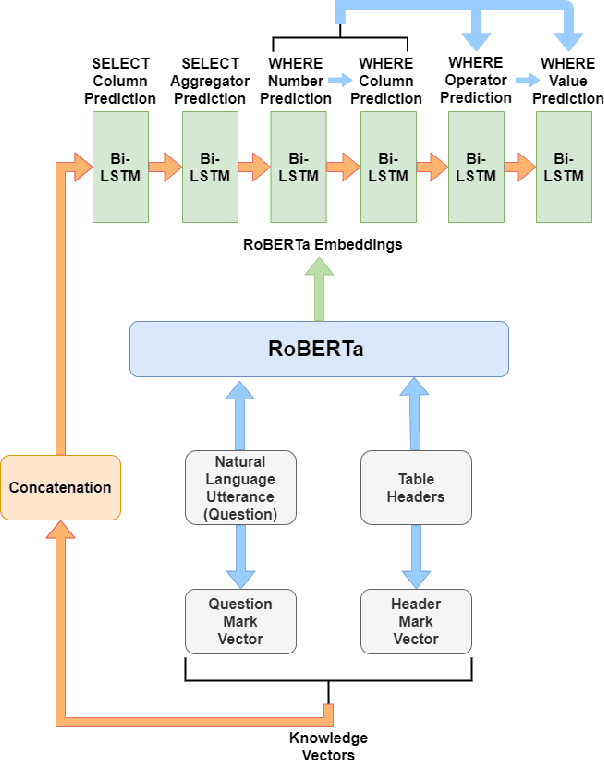

Data Agnostic RoBERTa-based Natural Language to SQL Query Generation

Oct 11, 2020

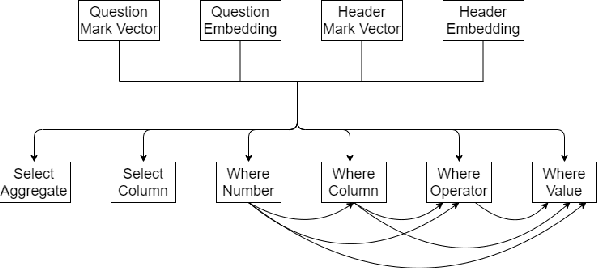

Abstract:Relational databases are among the most widely used architectures to store massive amounts of data in the modern world. However, there is a barrier between these databases and the average user. The user often lacks the knowledge of a query language such as SQL required to interact with the database. The NL2SQL task aims at finding deep learning approaches to solve this problem by converting natural language questions into valid SQL queries. Given the sensitive nature of some databases and the growing need for data privacy, we have presented an approach with data privacy at its core. We have passed RoBERTa embeddings and data-agnostic knowledge vectors into LSTM based submodels to predict the final query. Although we have not achieved state of the art results, we have eliminated the need for the table data, right from the training of the model, and have achieved a test set execution accuracy of 76.7%. By eliminating the table data dependency while training we have created a model capable of zero shot learning based on the natural language question and table schema alone.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge