Kate Lytvynets

Deep Learning with Label Noise: A Hierarchical Approach

May 28, 2022

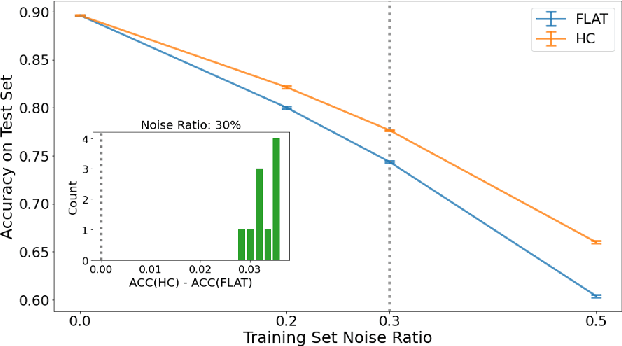

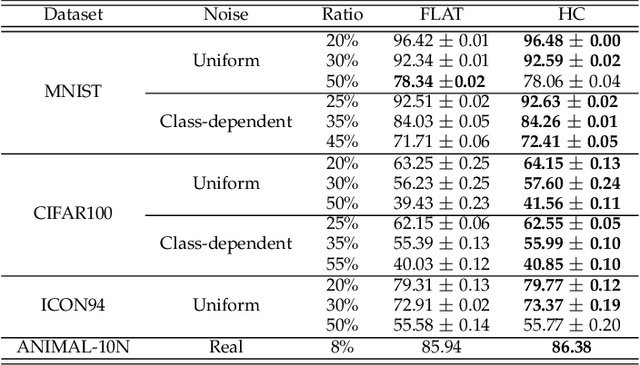

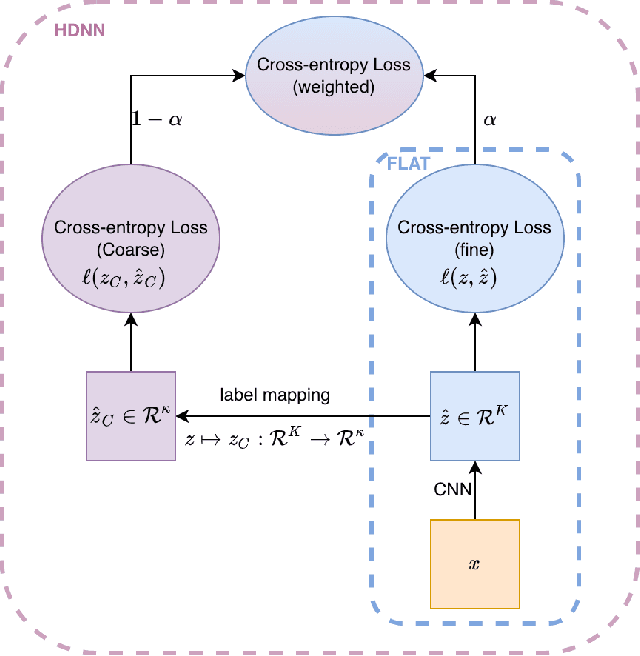

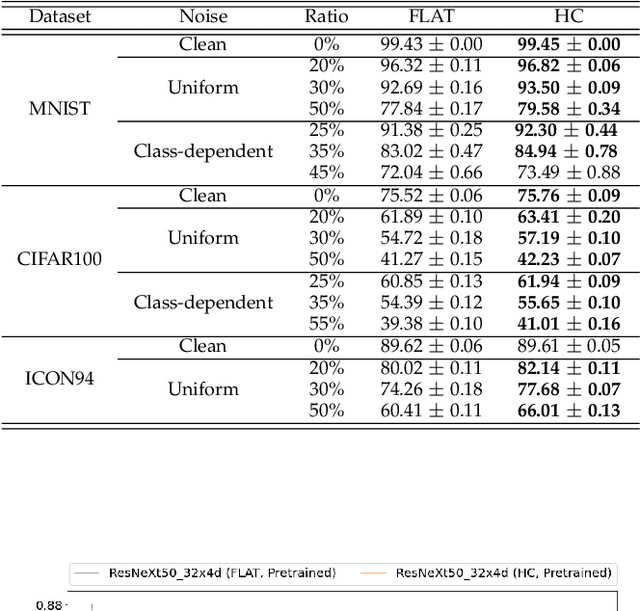

Abstract:Deep neural networks are susceptible to label noise. Existing methods to improve robustness, such as meta-learning and regularization, usually require significant change to the network architecture or careful tuning of the optimization procedure. In this work, we propose a simple hierarchical approach that incorporates a label hierarchy when training the deep learning models. Our approach requires no change of the network architecture or the optimization procedure. We investigate our hierarchical network through a wide range of simulated and real datasets and various label noise types. Our hierarchical approach improves upon regular deep neural networks in learning with label noise. Combining our hierarchical approach with pre-trained models achieves state-of-the-art performance in real-world noisy datasets.

Mental State Classification Using Multi-graph Features

Feb 25, 2022

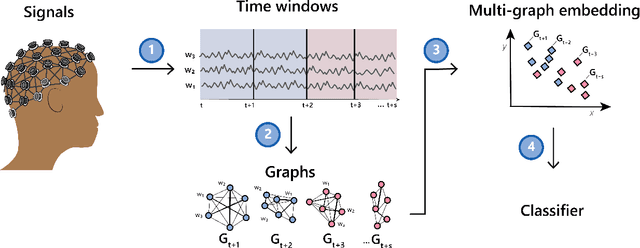

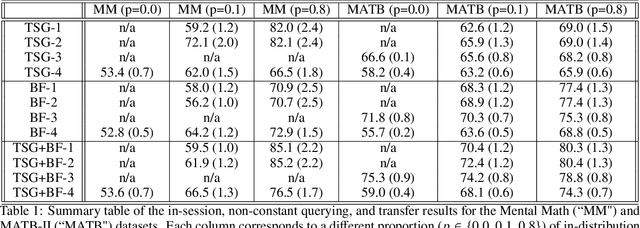

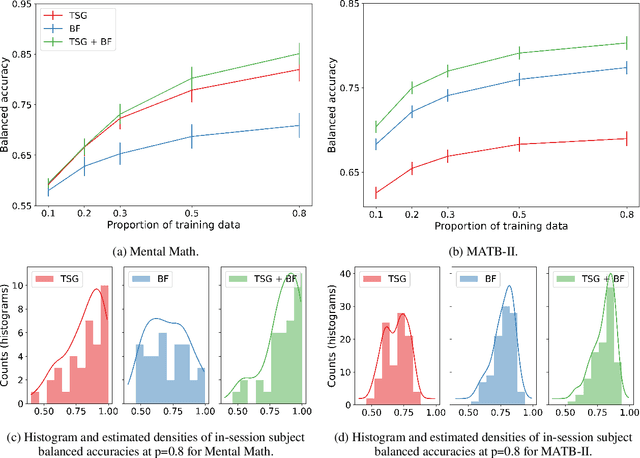

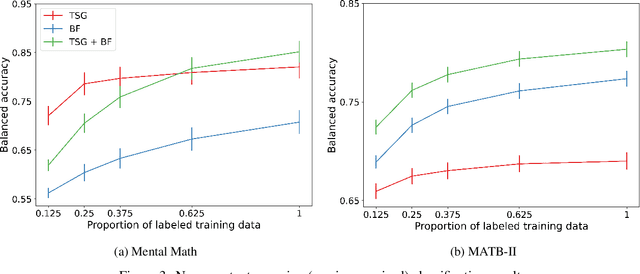

Abstract:We consider the problem of extracting features from passive, multi-channel electroencephalogram (EEG) devices for downstream inference tasks related to high-level mental states such as stress and cognitive load. Our proposed method leverages recently developed multi-graph tools and applies them to the time series of graphs implied by the statistical dependence structure (e.g., correlation) amongst the multiple sensors. We compare the effectiveness of the proposed features to traditional band power-based features in the context of three classification experiments and find that the two feature sets offer complementary predictive information. We conclude by showing that the importance of particular channels and pairs of channels for classification when using the proposed features is neuroscientifically valid.

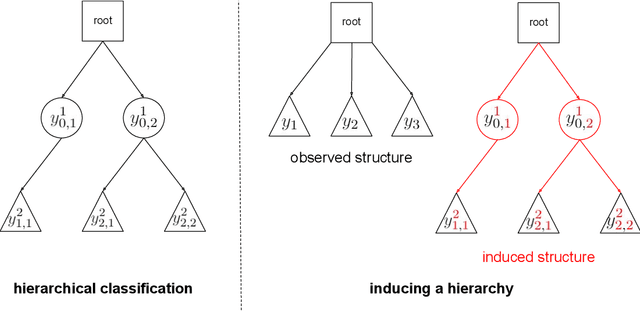

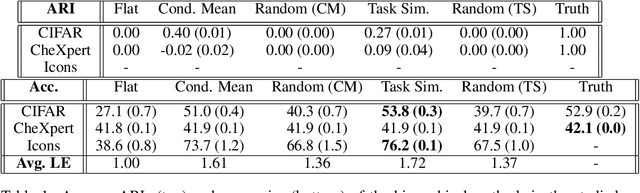

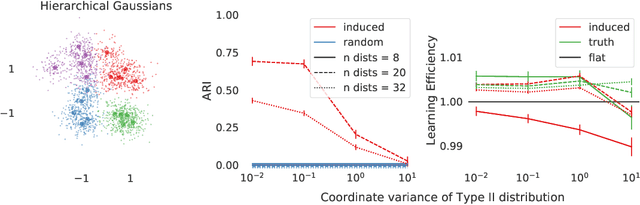

Inducing a hierarchy for multi-class classification problems

Feb 20, 2021

Abstract:In applications where categorical labels follow a natural hierarchy, classification methods that exploit the label structure often outperform those that do not. Un-fortunately, the majority of classification datasets do not come pre-equipped with a hierarchical structure and classical flat classifiers must be employed. In this paper, we investigate a class of methods that induce a hierarchy that can similarly improve classification performance over flat classifiers. The class of methods follows the structure of first clustering the conditional distributions and subsequently using a hierarchical classifier with the induced hierarchy. We demonstrate the effectiveness of the class of methods both for discovering a latent hierarchy and for improving accuracy in principled simulation settings and three real data applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge