Karen Feigh

Model Cards for AI Teammates: Comparing Human-AI Team Familiarization Methods for High-Stakes Environments

May 19, 2025Abstract:We compare three methods of familiarizing a human with an artificial intelligence (AI) teammate ("agent") prior to operation in a collaborative, fast-paced intelligence, surveillance, and reconnaissance (ISR) environment. In a between-subjects user study (n=60), participants either read documentation about the agent, trained alongside the agent prior to the mission, or were given no familiarization. Results showed that the most valuable information about the agent included details of its decision-making algorithms and its relative strengths and weaknesses compared to the human. This information allowed the familiarization groups to form sophisticated team strategies more quickly than the control group. Documentation-based familiarization led to the fastest adoption of these strategies, but also biased participants towards risk-averse behavior that prevented high scores. Participants familiarized through direct interaction were able to infer much of the same information through observation, and were more willing to take risks and experiment with different control modes, but reported weaker understanding of the agent's internal processes. Significant differences were seen between individual participants' risk tolerance and methods of AI interaction, which should be considered when designing human-AI control interfaces. Based on our findings, we recommend a human-AI team familiarization method that combines AI documentation, structured in-situ training, and exploratory interaction.

Mixed-Initiative Human-Robot Teaming under Suboptimality with Online Bayesian Adaptation

Mar 24, 2024Abstract:For effective human-agent teaming, robots and other artificial intelligence (AI) agents must infer their human partner's abilities and behavioral response patterns and adapt accordingly. Most prior works make the unrealistic assumption that one or more teammates can act near-optimally. In real-world collaboration, humans and autonomous agents can be suboptimal, especially when each only has partial domain knowledge. In this work, we develop computational modeling and optimization techniques for enhancing the performance of suboptimal human-agent teams, where the human and the agent have asymmetric capabilities and act suboptimally due to incomplete environmental knowledge. We adopt an online Bayesian approach that enables a robot to infer people's willingness to comply with its assistance in a sequential decision-making game. Our user studies show that user preferences and team performance indeed vary with robot intervention styles, and our approach for mixed-initiative collaborations enhances objective team performance ($p<.001$) and subjective measures, such as user's trust ($p<.001$) and perceived likeability of the robot ($p<.001$).

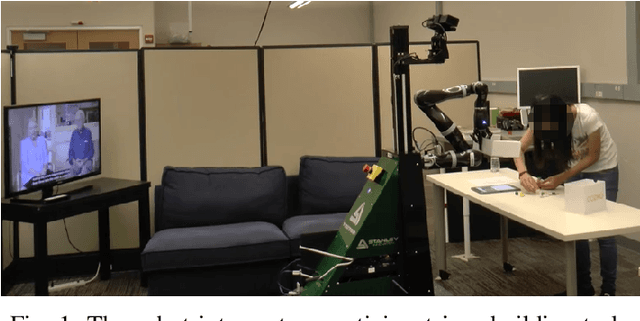

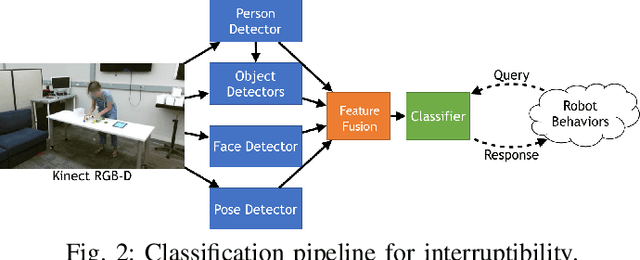

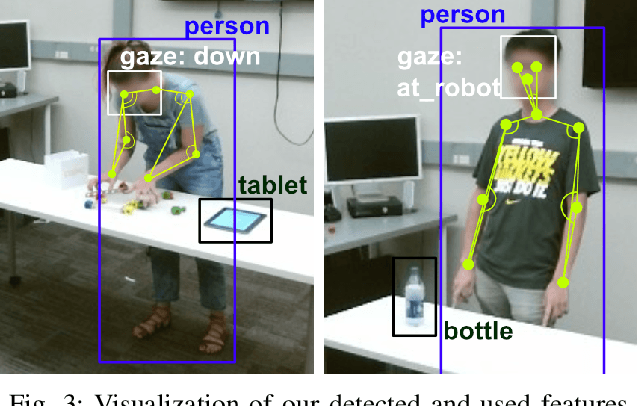

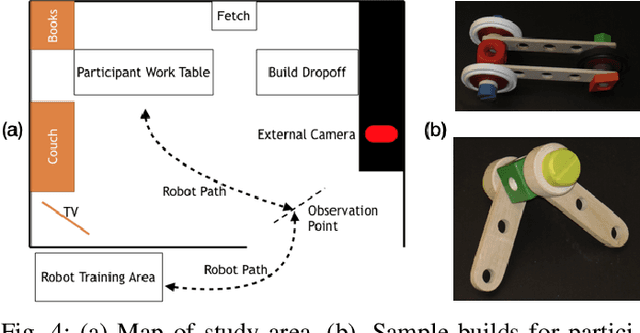

Effects of Interruptibility-Aware Robot Behavior

Apr 17, 2018

Abstract:As robots become increasingly prevalent in human environments, there will inevitably be times when a robot needs to interrupt a human to initiate an interaction. Our work introduces the first interruptibility-aware mobile robot system, and evaluates the effects of interruptibility-awareness on human task performance, robot task performance, and on human interpretation of the robot's social aptitude. Our results show that our robot is effective at predicting interruptibility at high accuracy, allowing it to interrupt at more appropriate times. Results of a large-scale user study show that while participants are able to maintain task performance even in the presence of interruptions, interruptibility-awareness improves the robot's task performance and improves participant social perception of the robot.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge