Karen Elliott

Risk-aware black-box portfolio construction using Bayesian optimization with adaptive weighted Lagrangian estimator

Apr 18, 2025Abstract:Existing portfolio management approaches are often black-box models due to safety and commercial issues in the industry. However, their performance can vary considerably whenever market conditions or internal trading strategies change. Furthermore, evaluating these non-transparent systems is expensive, where certain budgets limit observations of the systems. Therefore, optimizing performance while controlling the potential risk of these financial systems has become a critical challenge. This work presents a novel Bayesian optimization framework to optimize black-box portfolio management models under limited observations. In conventional Bayesian optimization settings, the objective function is to maximize the expectation of performance metrics. However, simply maximizing performance expectations leads to erratic optimization trajectories, which exacerbate risk accumulation in portfolio management. Meanwhile, this can lead to misalignment between the target distribution and the actual distribution of the black-box model. To mitigate this problem, we propose an adaptive weight Lagrangian estimator considering dual objective, which incorporates maximizing model performance and minimizing variance of model observations. Extensive experiments demonstrate the superiority of our approach over five backtest settings with three black-box stock portfolio management models. Ablation studies further verify the effectiveness of the proposed estimator.

Technologies for Trustworthy Machine Learning: A Survey in a Socio-Technical Context

Jul 17, 2020

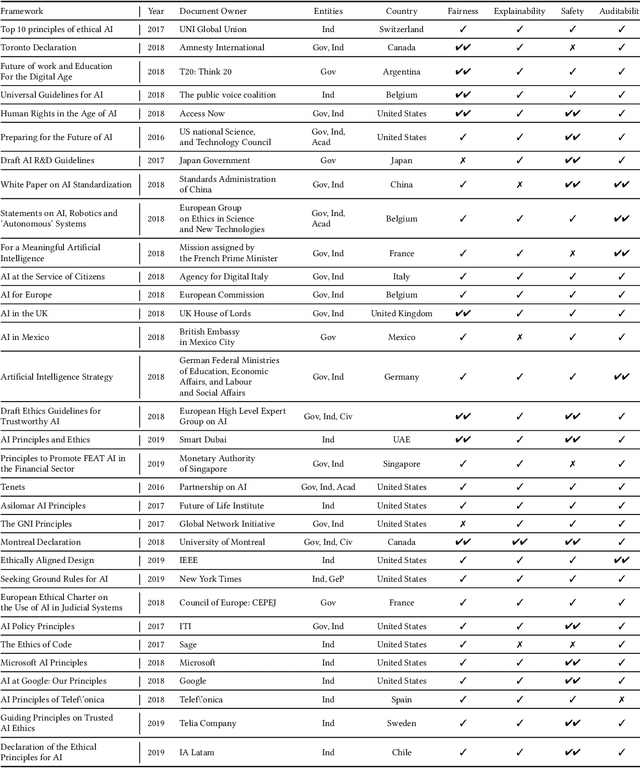

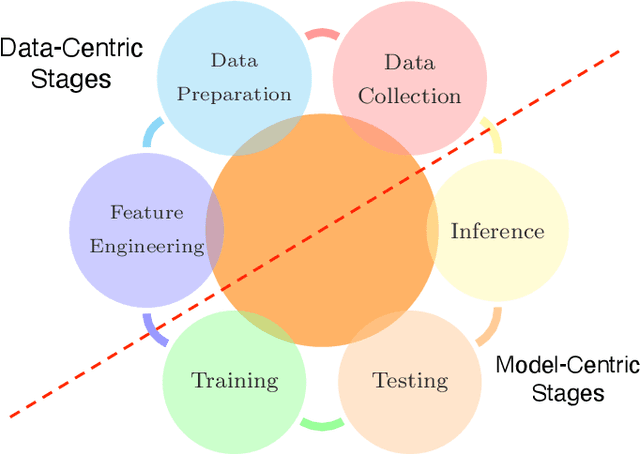

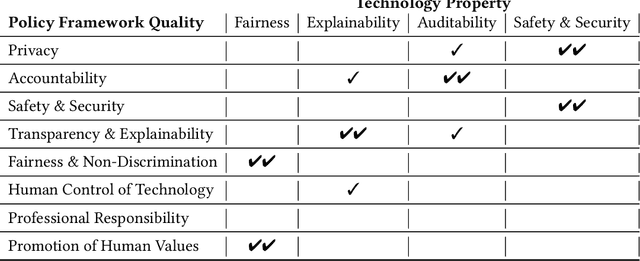

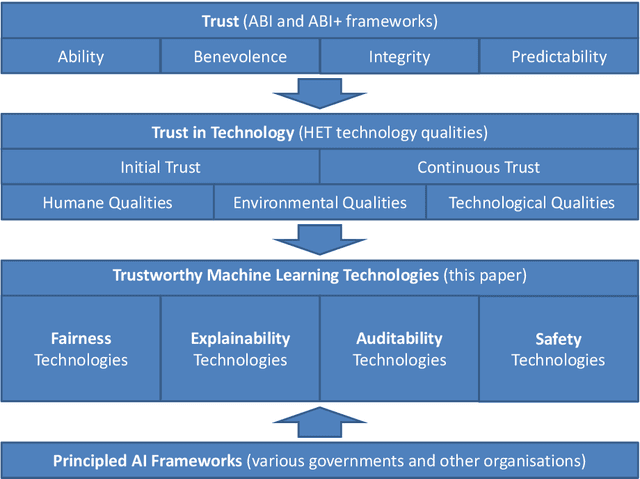

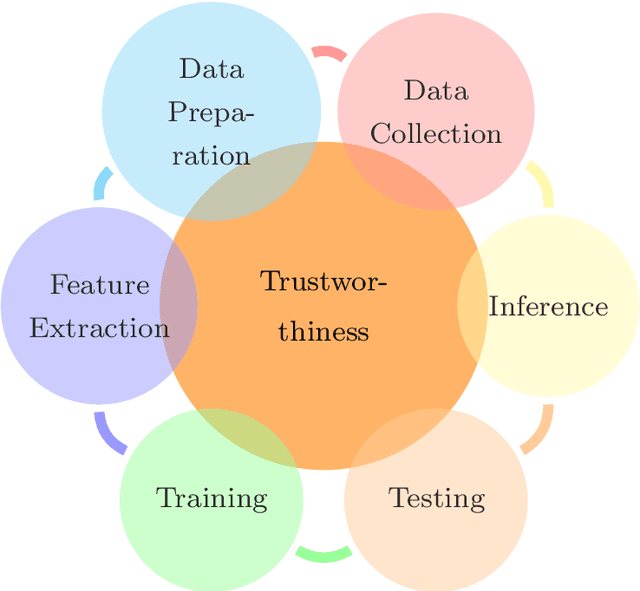

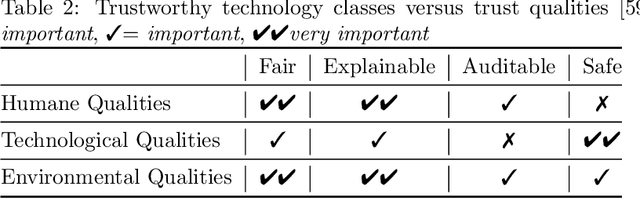

Abstract:Concerns about the societal impact of AI-based services and systems has encouraged governments and other organisations around the world to propose AI policy frameworks to address fairness, accountability, transparency and related topics. To achieve the objectives of these frameworks, the data and software engineers who build machine-learning systems require knowledge about a variety of relevant supporting tools and techniques. In this paper we provide an overview of technologies that support building trustworthy machine learning systems, i.e., systems whose properties justify that people place trust in them. We argue that four categories of system properties are instrumental in achieving the policy objectives, namely fairness, explainability, auditability and safety & security (FEAS). We discuss how these properties need to be considered across all stages of the machine learning life cycle, from data collection through run-time model inference. As a consequence, we survey in this paper the main technologies with respect to all four of the FEAS properties, for data-centric as well as model-centric stages of the machine learning system life cycle. We conclude with an identification of open research problems, with a particular focus on the connection between trustworthy machine learning technologies and their implications for individuals and society.

The relationship between trust in AI and trustworthy machine learning technologies

Dec 03, 2019

Abstract:To build AI-based systems that users and the public can justifiably trust one needs to understand how machine learning technologies impact trust put in these services. To guide technology developments, this paper provides a systematic approach to relate social science concepts of trust with the technologies used in AI-based services and products. We conceive trust as discussed in the ABI (Ability, Benevolence, Integrity) framework and use a recently proposed mapping of ABI on qualities of technologies. We consider four categories of machine learning technologies, namely these for Fairness, Explainability, Auditability and Safety (FEAS) and discuss if and how these possess the required qualities. Trust can be impacted throughout the life cycle of AI-based systems, and we introduce the concept of Chain of Trust to discuss technological needs for trust in different stages of the life cycle. FEAS has obvious relations with known frameworks and therefore we relate FEAS to a variety of international Principled AI policy and technology frameworks that have emerged in recent years.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge