Ehsan Toreini

Measuring Online Hate on 4chan using Pre-trained Deep Learning Models

Mar 30, 2025

Abstract:Online hate speech can harmfully impact individuals and groups, specifically on non-moderated platforms such as 4chan where users can post anonymous content. This work focuses on analysing and measuring the prevalence of online hate on 4chan's politically incorrect board (/pol/) using state-of-the-art Natural Language Processing (NLP) models, specifically transformer-based models such as RoBERTa and Detoxify. By leveraging these advanced models, we provide an in-depth analysis of hate speech dynamics and quantify the extent of online hate non-moderated platforms. The study advances understanding through multi-class classification of hate speech (racism, sexism, religion, etc.), while also incorporating the classification of toxic content (e.g., identity attacks and threats) and a further topic modelling analysis. The results show that 11.20% of this dataset is identified as containing hate in different categories. These evaluations show that online hate is manifested in various forms, confirming the complicated and volatile nature of detection in the wild.

Verifiable Fairness: Privacy-preserving Computation of Fairness for Machine Learning Systems

Sep 12, 2023

Abstract:Fair machine learning is a thriving and vibrant research topic. In this paper, we propose Fairness as a Service (FaaS), a secure, verifiable and privacy-preserving protocol to computes and verify the fairness of any machine learning (ML) model. In the deisgn of FaaS, the data and outcomes are represented through cryptograms to ensure privacy. Also, zero knowledge proofs guarantee the well-formedness of the cryptograms and underlying data. FaaS is model--agnostic and can support various fairness metrics; hence, it can be used as a service to audit the fairness of any ML model. Our solution requires no trusted third party or private channels for the computation of the fairness metric. The security guarantees and commitments are implemented in a way that every step is securely transparent and verifiable from the start to the end of the process. The cryptograms of all input data are publicly available for everyone, e.g., auditors, social activists and experts, to verify the correctness of the process. We implemented FaaS to investigate performance and demonstrate the successful use of FaaS for a publicly available data set with thousands of entries.

A Practical Deep Learning-Based Acoustic Side Channel Attack on Keyboards

Aug 02, 2023

Abstract:With recent developments in deep learning, the ubiquity of micro-phones and the rise in online services via personal devices, acoustic side channel attacks present a greater threat to keyboards than ever. This paper presents a practical implementation of a state-of-the-art deep learning model in order to classify laptop keystrokes, using a smartphone integrated microphone. When trained on keystrokes recorded by a nearby phone, the classifier achieved an accuracy of 95%, the highest accuracy seen without the use of a language model. When trained on keystrokes recorded using the video-conferencing software Zoom, an accuracy of 93% was achieved, a new best for the medium. Our results prove the practicality of these side channel attacks via off-the-shelf equipment and algorithms. We discuss a series of mitigation methods to protect users against these series of attacks.

Technologies for Trustworthy Machine Learning: A Survey in a Socio-Technical Context

Jul 17, 2020

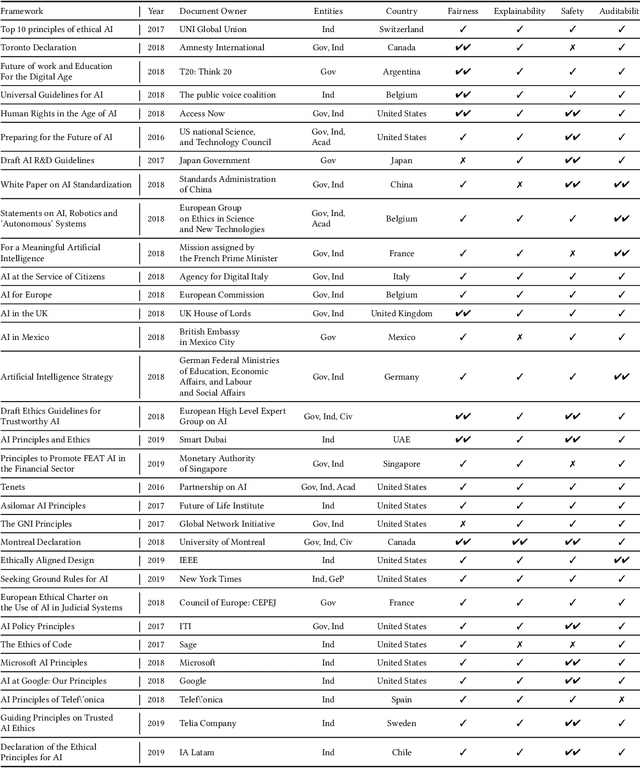

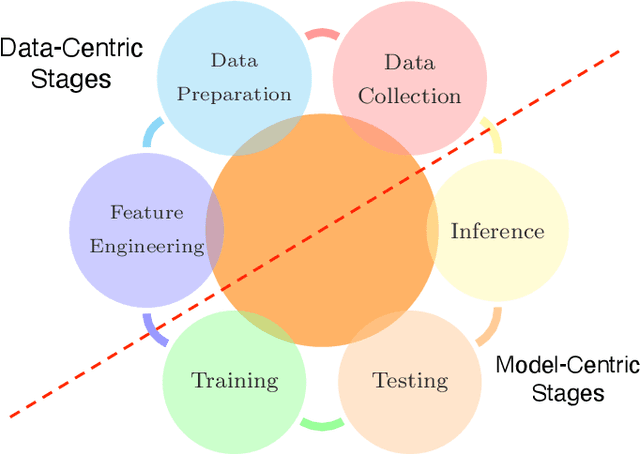

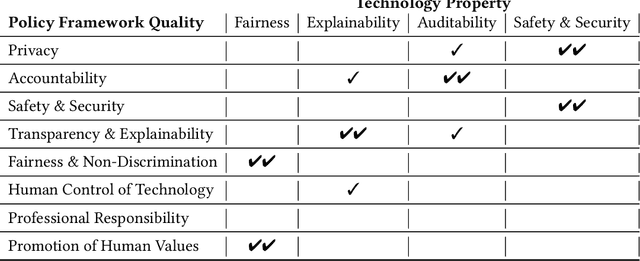

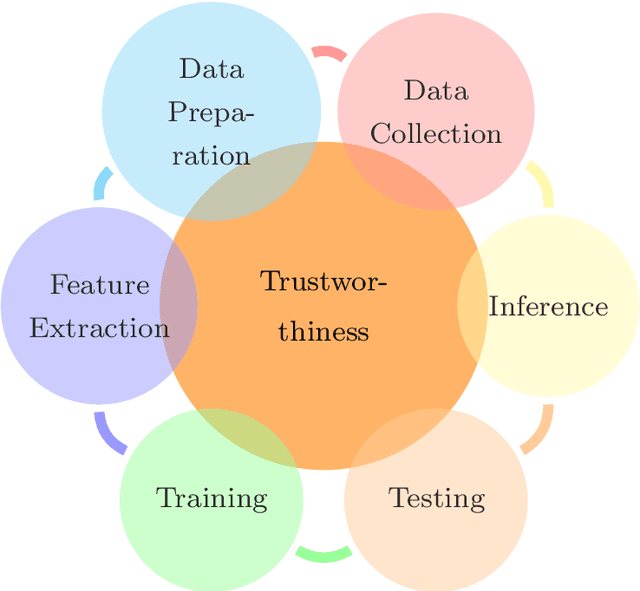

Abstract:Concerns about the societal impact of AI-based services and systems has encouraged governments and other organisations around the world to propose AI policy frameworks to address fairness, accountability, transparency and related topics. To achieve the objectives of these frameworks, the data and software engineers who build machine-learning systems require knowledge about a variety of relevant supporting tools and techniques. In this paper we provide an overview of technologies that support building trustworthy machine learning systems, i.e., systems whose properties justify that people place trust in them. We argue that four categories of system properties are instrumental in achieving the policy objectives, namely fairness, explainability, auditability and safety & security (FEAS). We discuss how these properties need to be considered across all stages of the machine learning life cycle, from data collection through run-time model inference. As a consequence, we survey in this paper the main technologies with respect to all four of the FEAS properties, for data-centric as well as model-centric stages of the machine learning system life cycle. We conclude with an identification of open research problems, with a particular focus on the connection between trustworthy machine learning technologies and their implications for individuals and society.

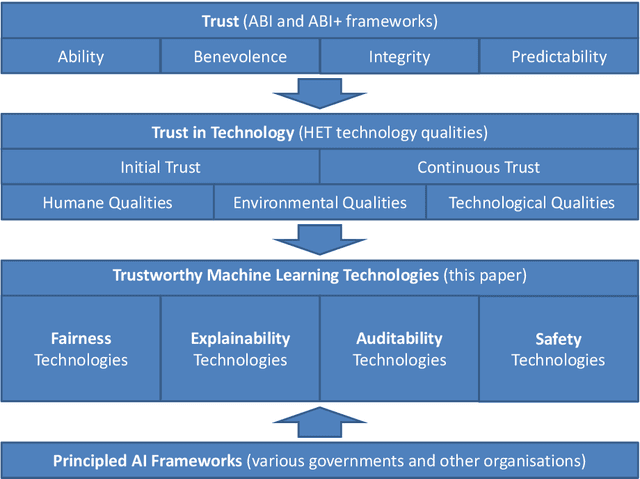

The relationship between trust in AI and trustworthy machine learning technologies

Dec 03, 2019

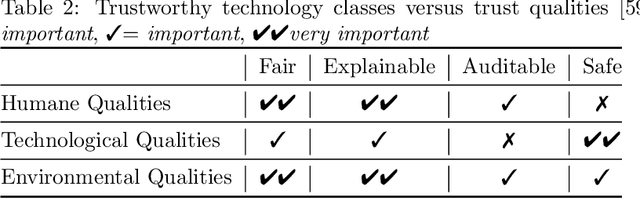

Abstract:To build AI-based systems that users and the public can justifiably trust one needs to understand how machine learning technologies impact trust put in these services. To guide technology developments, this paper provides a systematic approach to relate social science concepts of trust with the technologies used in AI-based services and products. We conceive trust as discussed in the ABI (Ability, Benevolence, Integrity) framework and use a recently proposed mapping of ABI on qualities of technologies. We consider four categories of machine learning technologies, namely these for Fairness, Explainability, Auditability and Safety (FEAS) and discuss if and how these possess the required qualities. Trust can be impacted throughout the life cycle of AI-based systems, and we introduce the concept of Chain of Trust to discuss technological needs for trust in different stages of the life cycle. FEAS has obvious relations with known frameworks and therefore we relate FEAS to a variety of international Principled AI policy and technology frameworks that have emerged in recent years.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge