Karen Egiazarian

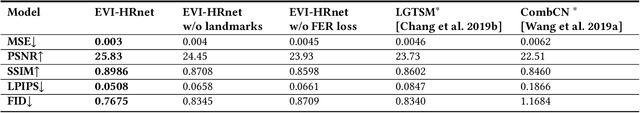

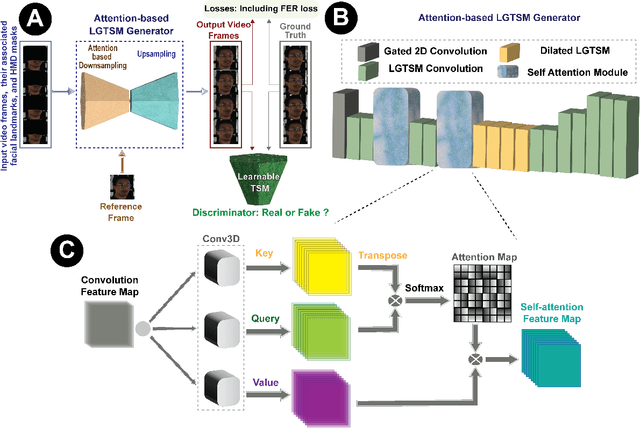

Towards Realistic Landmark-Guided Facial Video Inpainting Based on GANs

Feb 14, 2024

Abstract:Facial video inpainting plays a crucial role in a wide range of applications, including but not limited to the removal of obstructions in video conferencing and telemedicine, enhancement of facial expression analysis, privacy protection, integration of graphical overlays, and virtual makeup. This domain presents serious challenges due to the intricate nature of facial features and the inherent human familiarity with faces, heightening the need for accurate and persuasive completions. In addressing challenges specifically related to occlusion removal in this context, our focus is on the progressive task of generating complete images from facial data covered by masks, ensuring both spatial and temporal coherence. Our study introduces a network designed for expression-based video inpainting, employing generative adversarial networks (GANs) to handle static and moving occlusions across all frames. By utilizing facial landmarks and an occlusion-free reference image, our model maintains the user's identity consistently across frames. We further enhance emotional preservation through a customized facial expression recognition (FER) loss function, ensuring detailed inpainted outputs. Our proposed framework exhibits proficiency in eliminating occlusions from facial videos in an adaptive form, whether appearing static or dynamic on the frames, while providing realistic and coherent results.

Expression-aware video inpainting for HMD removal in XR applications

Jan 25, 2024

Abstract:Head-mounted displays (HMDs) serve as indispensable devices for observing extended reality (XR) environments and virtual content. However, HMDs present an obstacle to external recording techniques as they block the upper face of the user. This limitation significantly affects social XR applications, specifically teleconferencing, where facial features and eye gaze information play a vital role in creating an immersive user experience. In this study, we propose a new network for expression-aware video inpainting for HMD removal (EVI-HRnet) based on generative adversarial networks (GANs). Our model effectively fills in missing information with regard to facial landmarks and a single occlusion-free reference image of the user. The framework and its components ensure the preservation of the user's identity across frames using the reference frame. To further improve the level of realism of the inpainted output, we introduce a novel facial expression recognition (FER) loss function for emotion preservation. Our results demonstrate the remarkable capability of the proposed framework to remove HMDs from facial videos while maintaining the subject's facial expression and identity. Moreover, the outputs exhibit temporal consistency along the inpainted frames. This lightweight framework presents a practical approach for HMD occlusion removal, with the potential to enhance various collaborative XR applications without the need for additional hardware.

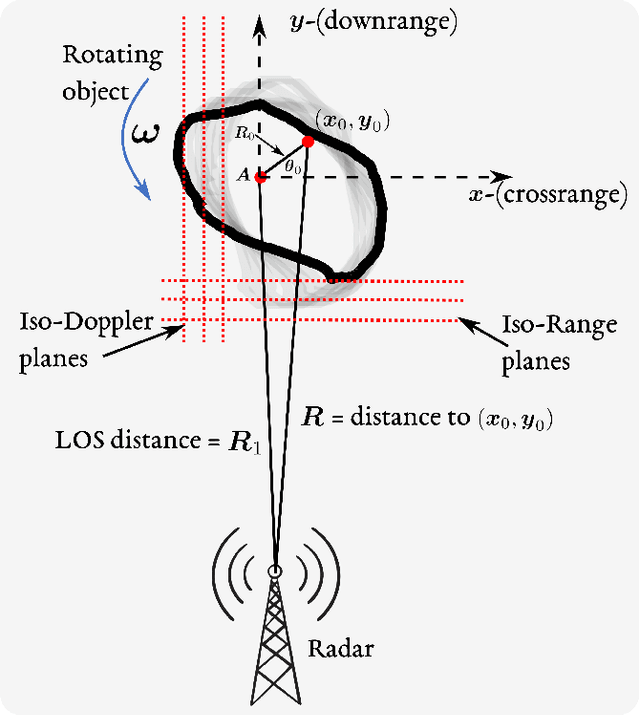

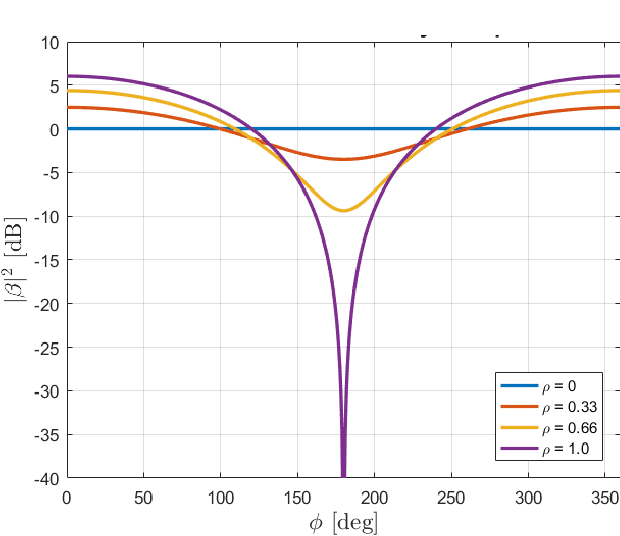

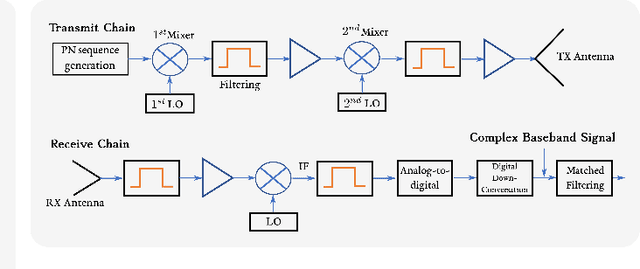

An Overview of Advances in Signal Processing Techniques for Classical and Quantum Wideband Synthetic Apertures

May 11, 2022

Abstract:Rapid developments in synthetic aperture (SA) systems, which generate a larger aperture with greater angular resolution than is inherently possible from the physical dimensions of a single sensor alone, are leading to novel research avenues in several signal processing applications. The SAs may either use a mechanical positioner to move an antenna through space or deploy a distributed network of sensors. With the advent of new hardware technologies, the SAs tend to be denser nowadays. The recent opening of higher frequency bands has led to wide SA bandwidths. In general, new techniques and setups are required to harness the potential of wide SAs in space and bandwidth. Herein, we provide a brief overview of emerging signal processing trends in such spatially and spectrally wideband SA systems. This guide is intended to aid newcomers in navigating the most critical issues in SA analysis and further supports the development of new theories in the field. In particular, we cover the theoretical framework and practical underpinnings of wideband SA radar, channel sounding, sonar, radiometry, and optical applications. Apart from the classical SA applications, we also discuss the quantum electric-field-sensing probes in SAs that are currently undergoing active research but remain at nascent stages of development.

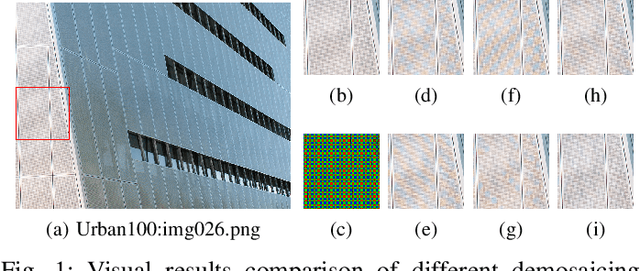

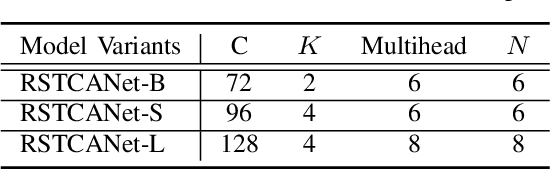

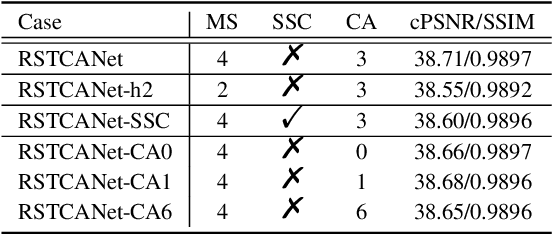

Residual Swin Transformer Channel Attention Network for Image Demosaicing

Apr 14, 2022

Abstract:Image demosaicing is problem of interpolating full- resolution color images from raw sensor (color filter array) data. During last decade, deep neural networks have been widely used in image restoration, and in particular, in demosaicing, attaining significant performance improvement. In recent years, vision transformers have been designed and successfully used in various computer vision applications. One of the recent methods of image restoration based on a Swin Transformer (ST), SwinIR, demonstrates state-of-the-art performance with a smaller number of parameters than neural network-based methods. Inspired by the success of SwinIR, we propose in this paper a novel Swin Transformer-based network for image demosaicing, called RSTCANet. To extract image features, RSTCANet stacks several residual Swin Transformer Channel Attention blocks (RSTCAB), introducing the channel attention for each two successive ST blocks. Extensive experiments demonstrate that RSTCANet out- performs state-of-the-art image demosaicing methods, and has a smaller number of parameters.

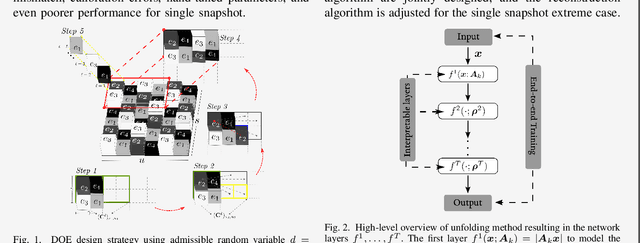

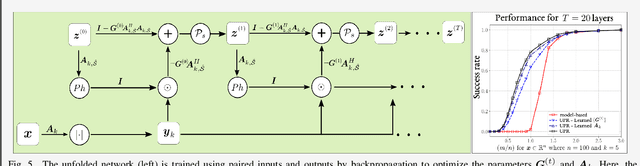

Unfolding-Aided Bootstrapped Phase Retrieval in Optical Imaging

Mar 03, 2022

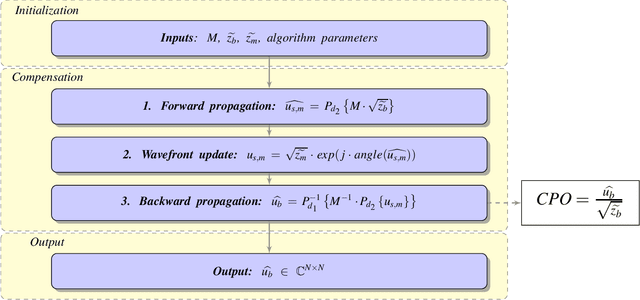

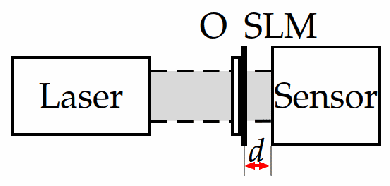

Abstract:Phase retrieval in optical imaging refers to the recovery of a complex signal from phaseless data acquired in the form of its diffraction patterns. These patterns are acquired through a system with a coherent light source that employs a diffractive optical element (DOE) to modulate the scene resulting in coded diffraction patterns at the sensor. Recently, the hybrid approach of model-driven network or deep unfolding has emerged as an effective alternative because it allows for bounding the complexity of phase retrieval algorithms while also retaining their efficacy. Additionally, such hybrid approaches have shown promise in improving the design of DOEs that follow theoretical uniqueness conditions. There are opportunities to exploit novel experimental setups and resolve even more complex DOE phase retrieval applications. This paper presents an overview of algorithms and applications of deep unfolding for bootstrapped - regardless of near, middle, and far zones - phase retrieval.

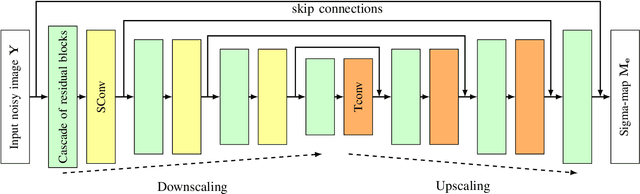

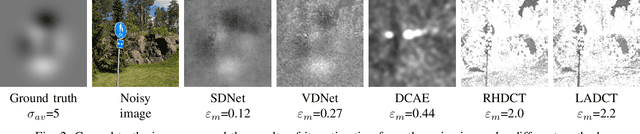

Learning-based Noise Component Map Estimation for Image Denoising

Sep 24, 2021

Abstract:A problem of image denoising when images are corrupted by a non-stationary noise is considered in this paper. Since in practice no a priori information on noise is available, noise statistics should be pre-estimated for image denoising. In this paper, deep convolutional neural network (CNN) based method for estimation of a map of local, patch-wise, standard deviations of noise (so-called sigma-map) is proposed. It achieves the state-of-the-art performance in accuracy of estimation of sigma-map for the case of non-stationary noise, as well as estimation of noise variance for the case of additive white Gaussian noise. Extensive experiments on image denoising using estimated sigma-maps demonstrate that our method outperforms recent CNN-based blind image denoising methods by up to 6 dB in PSNR, as well as other state-of-the-art methods based on sigma-map estimation by up to 0.5 dB, providing same time better usage flexibility. Comparison with the ideal case, when denoising is applied using ground-truth sigma-map, shows that a difference of corresponding PSNR values for most of noise levels is within 0.1-0.2 dB and does not exceeds 0.6 dB.

SSR-PR: Single-shot Super-Resolution Phase Retrieval based two prior calibration tests

Aug 12, 2021

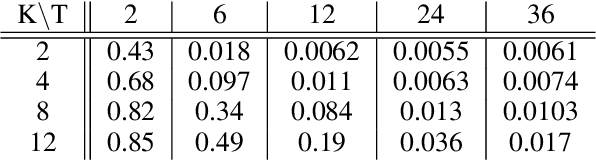

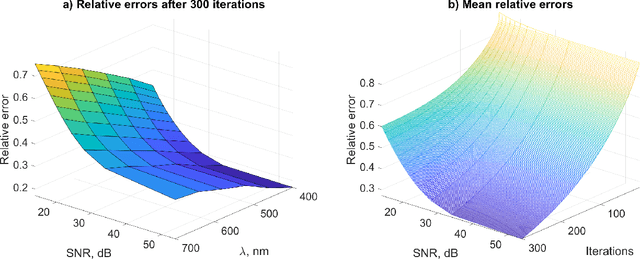

Abstract:We propose a novel approach and algorithm based on two preliminary tests of the optical system elements to enhance the super-resolved complex-valued imaging. The approach is developed for inverse phase imaging in a single-shot lensless optical setup. Imaging is based on wavefront modulation by a single binary phase mask. The preliminary tests compensate errors in the optical system and correct a carrying wavefront, reducing the gap between real-life experiments and computational modeling, which improve imaging significantly both qualitatively and quantitatively. These two tests are performed for observation of the laser beam and phase mask along, and might be considered as a preliminary system calibration. The corrected carrying wavefront is embedded into the proposed iterative Single-shot Super-Resolution Phase Retrieval (SSR-PR) algorithm. Improved initial diffraction pattern upsampling, and a combination of sparse and deep learning based filters achieves the super-resolved reconstructions. Simulations and physical experiments demonstrate the high-quality super-resolution phase imaging. In the simulations, we showed that the SSR-PR algorithm corrects the errors of the proposed optical system and reconstructs phase details 4x smaller than the sensor pixel size. In physical experiment 2um thick lines of USAF phase-target were resolved, which is almost 2x smaller than the sensor pixel size and corresponds to the smallest resolvable group of used test target. For phase bio-imaging, we provide Buccal Epithelial Cells reconstructed in computational super-resolution and the quality was of the same level as a digital holographic system with 40x magnification objective. Furthermore, the single-shot advantage provides the possibility to record dynamic scenes, where the framerate is limited only by the used camera. We provide amplitude-phase video clip of a moving alive single-celled eukaryote.

ADMM and Spectral Proximity Operators in Hyperspectral Broadband Phase Retrieval for Quantitative Phase Imaging

May 14, 2021

Abstract:A novel formulation of the hyperspectral broadband phase retrieval is developed for the scenario where both object and modulation phase masks are spectrally varying. The proposed algorithm is based on a complex domain version of the alternating direction method of multipliers (ADMM) and Spectral Proximity Operators (SPO) derived for Gaussian and Poissonian observations. Computations for these operators are reduced to the solution of sets of cubic (for Gaussian) and quadratic (for Poissonian) algebraic equations. These proximity operators resolve two problems. Firstly, the complex domain spectral components of signals are extracted from the total intensity observations calculated as sums of the signal spectral intensities. In this way, the spectral analysis of the total intensities is achieved. Secondly, the noisy observations are filtered, compromising noisy intensity observations and their predicted counterparts. The ability to resolve the hyperspectral broadband phase retrieval problem and to find the spectrum varying object are essentially defined by the spectral properties of object and image formation operators. The simulation tests demonstrate that the phase retrieval in this formulation can be successfully resolved.

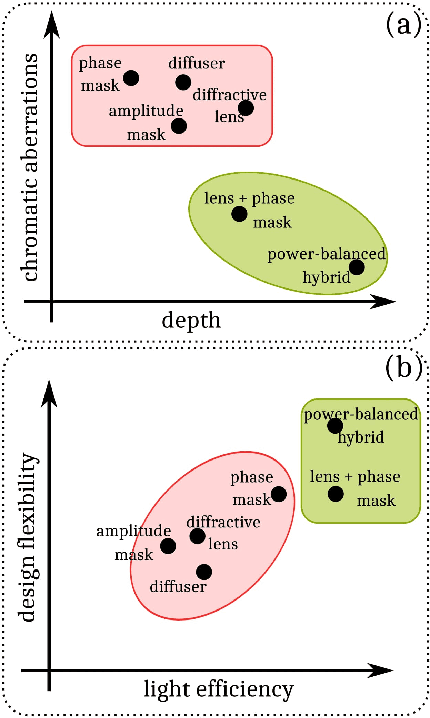

Optimized Power-Balanced Hybrid Phase-Coded Optics and Inverse Imaging for Achromatic EDoF

Mar 09, 2021

Abstract:The power-balanced hybrid optical imaging system is a special design of a computational camera, introduced in this paper, with image formation by a refractive lens and Multilevel Phase Mask (MPM) as a diffractive optical element (DoE). This system provides a long focal depth and low chromatic aberrations thanks to MPM, and a high energy light concentration due to the refractive lens. This paper additionally introduces the concept of a optimal power balance between lens and MPM for achromatic extended-depth-of-field (EDoF) imaging. To optimize this power-balance as well as to optimize MPM using Neural Network techniques, we build a fully-differentiable image formation model for joint optimization of optical and imaging parameters for the designed computational camera. Additionally, we determine a Wiener-like inverse imaging optimal optical transfer function (OTF) to reconstruct a sharp image from the defocused observation. We numerically and experimentally compare the designed system with its counterparts, lensless and just-lens optical systems, for the visible wavelength interval (400-700) nm and the EDoF range (0.5-1000) m. The attained results demonstrate that the proposed system equipped with the optimal OTF overcomes its lensless and just-lens counterparts (even when they are used with optimized OTFs) in terms of reconstruction quality for off-focus distances.

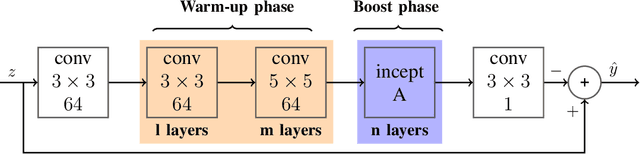

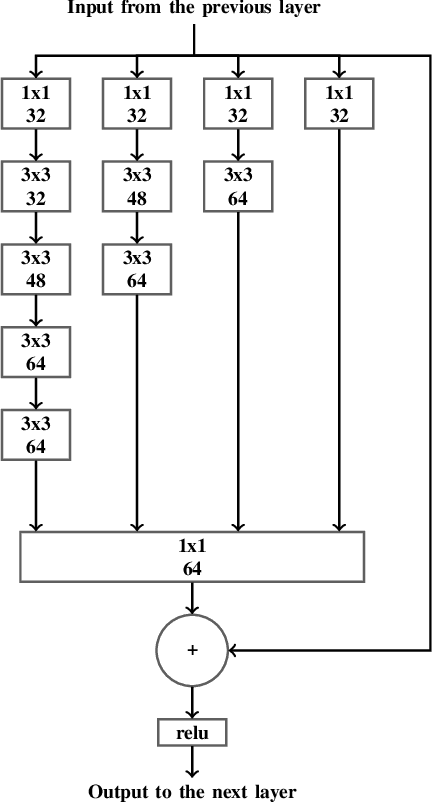

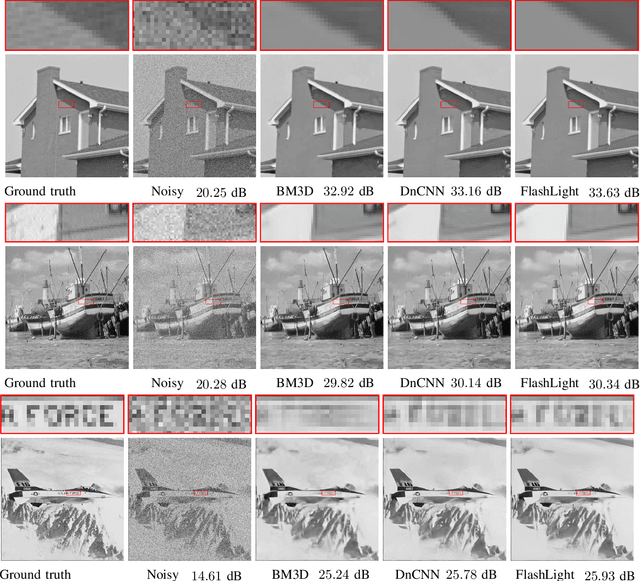

Flashlight CNN Image Denoising

Mar 02, 2020

Abstract:This paper proposes a learning-based denoising method called FlashLight CNN (FLCNN) that implements a deep neural network for image denoising. The proposed approach is based on deep residual networks and inception networks and it is able to leverage many more parameters than residual networks alone for denoising grayscale images corrupted by additive white Gaussian noise (AWGN). FlashLight CNN demonstrates state of the art performance when compared quantitatively and visually with the current state of the art image denoising methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge