Kangning Li

Easing Seasickness through Attention Redirection with a Mindfulness-Based Brain--Computer Interface

Jan 15, 2025Abstract:Seasickness is a prevalent issue that adversely impacts both passenger experiences and the operational efficiency of maritime crews. While techniques that redirect attention have proven effective in alleviating motion sickness symptoms in terrestrial environments, applying similar strategies to manage seasickness poses unique challenges due to the prolonged and intense motion environment associated with maritime travel. In this study, we propose a mindfulness brain-computer interface (BCI), specifically designed to redirect attention with the aim of mitigating seasickness symptoms in real-world settings. Our system utilizes a single-channel headband to capture prefrontal EEG signals, which are then wirelessly transmitted to computing devices for the assessment of mindfulness states. The results are transferred into real-time feedback as mindfulness scores and audiovisual stimuli, facilitating a shift in attentional focus from physiological discomfort to mindfulness practices. A total of 43 individuals participated in a real-world maritime experiment consisted of three sessions: a real-feedback mindfulness session, a resting session, and a pseudofeedback mindfulness session. Notably, 81.39% of participants reported that the mindfulness BCI intervention was effective, and there was a significant reduction in the severity of seasickness, as measured by the Misery Scale (MISC). Furthermore, EEG analysis revealed a decrease in the theta/beta ratio, corresponding with the alleviation of seasickness symptoms. A decrease in overall EEG band power during the real-feedback mindfulness session suggests that the mindfulness BCI fosters a more tranquil and downregulated state of brain activity. Together, this study presents a novel nonpharmacological, portable, and effective approach for seasickness intervention, with the potential to enhance the cruising experience for both passengers and crews.

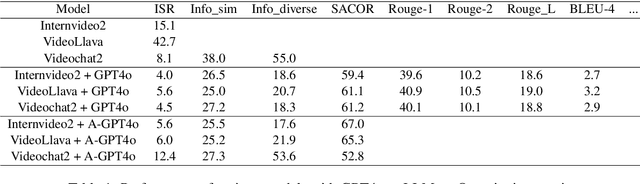

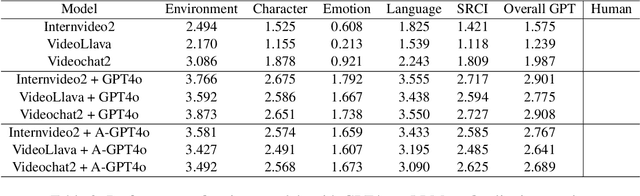

Movie2Story: A framework for understanding videos and telling stories in the form of novel text

Dec 19, 2024

Abstract:Multimodal video-to-text models have made considerable progress, primarily in generating brief descriptions of video content. However, there is still a deficiency in generating rich long-form text descriptions that integrate both video and audio. In this paper, we introduce a framework called M2S, designed to generate novel-length text by combining audio, video, and character recognition. M2S includes modules for video long-form text description and comprehension, audio-based analysis of emotion, speech rate, and character alignment, and visual-based character recognition alignment. By integrating multimodal information using the large language model GPT4o, M2S stands out in the field of multimodal text generation. We demonstrate the effectiveness and accuracy of M2S through comparative experiments and human evaluation. Additionally, the model framework has good scalability and significant potential for future research.

An Integrated Neighborhood and Scale Information Network for Open-Pit Mine Change Detection in High-Resolution Remote Sensing Images

Mar 22, 2024Abstract:Open-pit mine change detection (CD) in high-resolution (HR) remote sensing images plays a crucial role in mineral development and environmental protection. Significant progress has been made in this field in recent years, largely due to the advancement of deep learning techniques. However, existing deep-learning-based CD methods encounter challenges in effectively integrating neighborhood and scale information, resulting in suboptimal performance. Therefore, by exploring the influence patterns of neighborhood and scale information, this paper proposes an Integrated Neighborhood and Scale Information Network (INSINet) for open-pit mine CD in HR remote sensing images. Specifically, INSINet introduces 8-neighborhood-image information to acquire a larger receptive field, improving the recognition of center image boundary regions. Drawing on techniques of skip connection, deep supervision, and attention mechanism, the multi-path deep supervised attention (MDSA) module is designed to enhance multi-scale information fusion and change feature extraction. Experimental analysis reveals that incorporating neighborhood and scale information enhances the F1 score of INSINet by 6.40%, with improvements of 3.08% and 3.32% respectively. INSINet outperforms existing methods with an Overall Accuracy of 97.69%, Intersection over Union of 71.26%, and F1 score of 83.22%. INSINet shows significance for open-pit mine CD in HR remote sensing images.

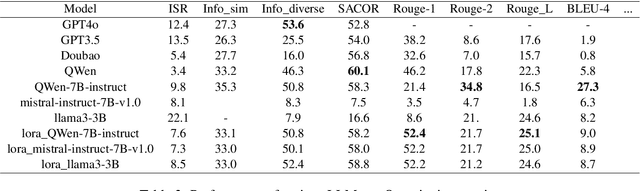

Object Detection in 3D Point Clouds via Local Correlation-Aware Point Embedding

Jan 11, 2023

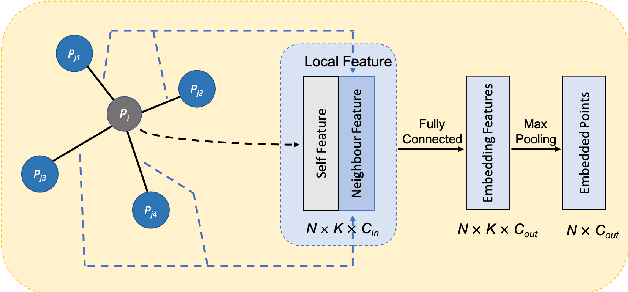

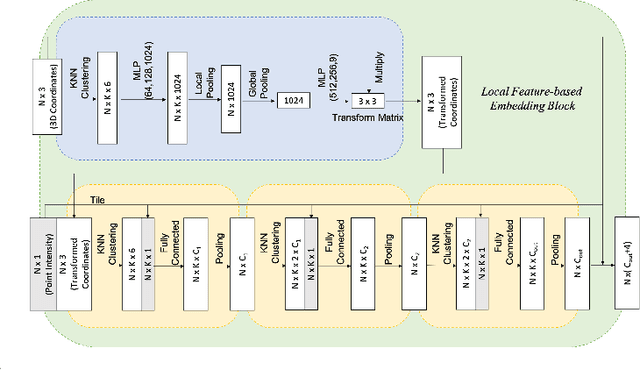

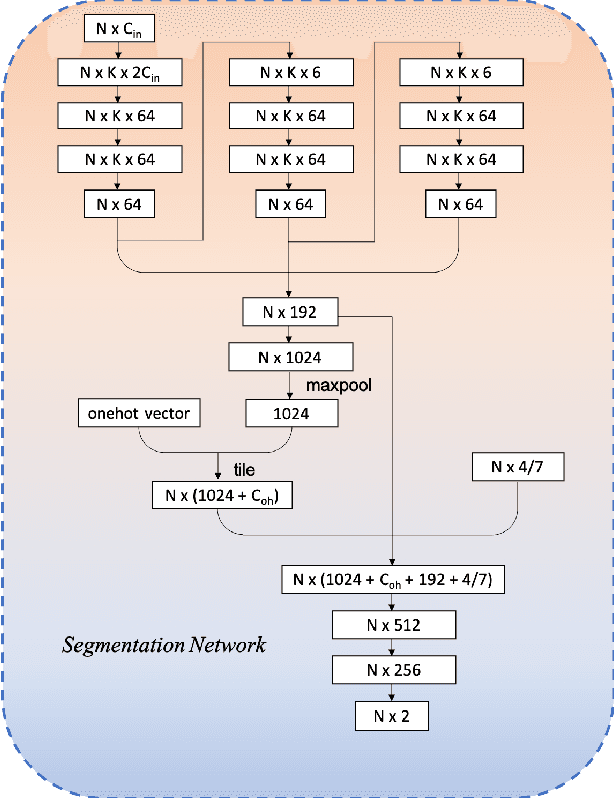

Abstract:We present an improved approach for 3D object detection in point cloud data based on the Frustum PointNet (F-PointNet). Compared to the original F-PointNet, our newly proposed method considers the point neighborhood when computing point features. The newly introduced local neighborhood embedding operation mimics the convolutional operations in 2D neural networks. Thus features of each point are not only computed with the features of its own or of the whole point cloud but also computed especially with respect to the features of its neighbors. Experiments show that our proposed method achieves better performance than the F-Pointnet baseline on 3D object detection tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge