Kai Heinrich

Stop ordering machine learning algorithms by their explainability! A user-centered investigation of performance and explainability

Jun 20, 2022

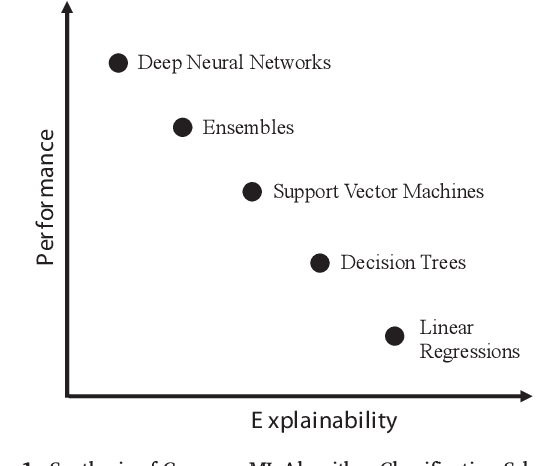

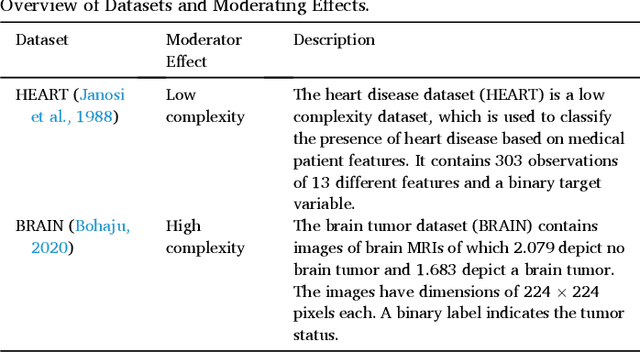

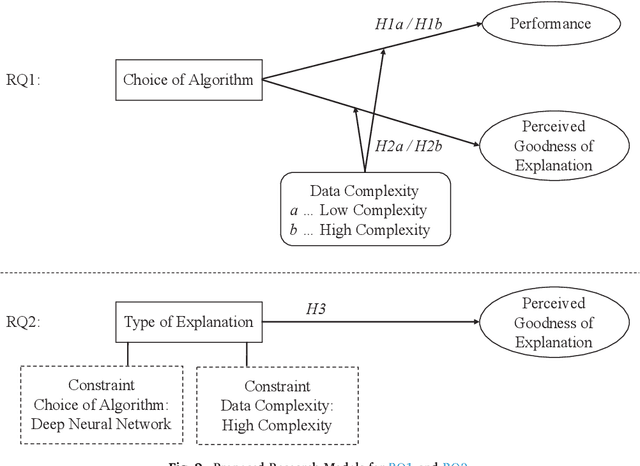

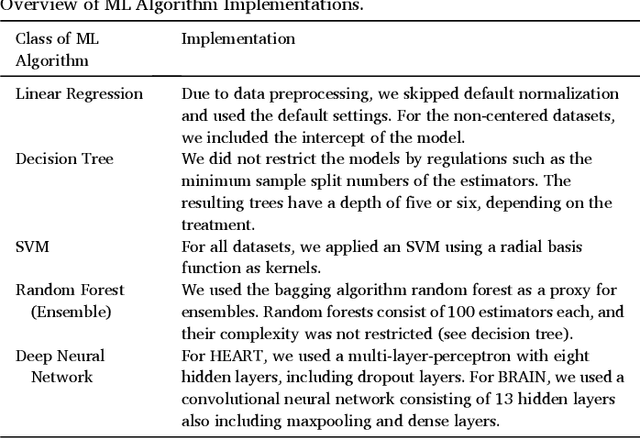

Abstract:Machine learning algorithms enable advanced decision making in contemporary intelligent systems. Research indicates that there is a tradeoff between their model performance and explainability. Machine learning models with higher performance are often based on more complex algorithms and therefore lack explainability and vice versa. However, there is little to no empirical evidence of this tradeoff from an end user perspective. We aim to provide empirical evidence by conducting two user experiments. Using two distinct datasets, we first measure the tradeoff for five common classes of machine learning algorithms. Second, we address the problem of end user perceptions of explainable artificial intelligence augmentations aimed at increasing the understanding of the decision logic of high-performing complex models. Our results diverge from the widespread assumption of a tradeoff curve and indicate that the tradeoff between model performance and explainability is much less gradual in the end user's perception. This is a stark contrast to assumed inherent model interpretability. Further, we found the tradeoff to be situational for example due to data complexity. Results of our second experiment show that while explainable artificial intelligence augmentations can be used to increase explainability, the type of explanation plays an essential role in end user perception.

Where Was COVID-19 First Discovered? Designing a Question-Answering System for Pandemic Situations

Apr 19, 2022

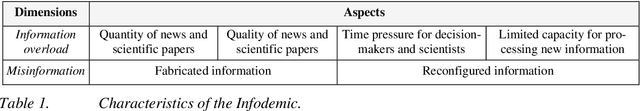

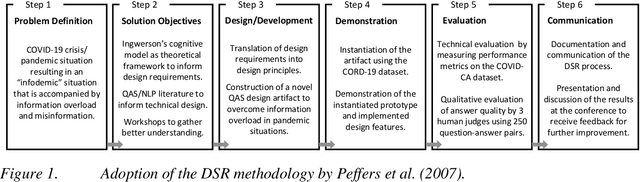

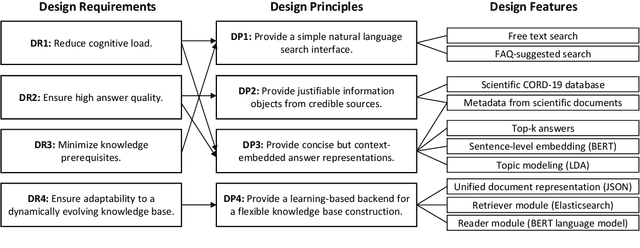

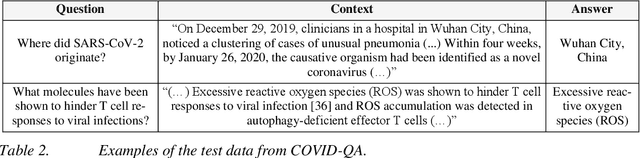

Abstract:The COVID-19 pandemic is accompanied by a massive "infodemic" that makes it hard to identify concise and credible information for COVID-19-related questions, like incubation time, infection rates, or the effectiveness of vaccines. As a novel solution, our paper is concerned with designing a question-answering system based on modern technologies from natural language processing to overcome information overload and misinformation in pandemic situations. To carry out our research, we followed a design science research approach and applied Ingwersen's cognitive model of information retrieval interaction to inform our design process from a socio-technical lens. On this basis, we derived prescriptive design knowledge in terms of design requirements and design principles, which we translated into the construction of a prototypical instantiation. Our implementation is based on the comprehensive CORD-19 dataset, and we demonstrate our artifact's usefulness by evaluating its answer quality based on a sample of COVID-19 questions labeled by biomedical experts.

A Picture is Worth a Collaboration: Accumulating Design Knowledge for Computer-Vision-based Hybrid Intelligence Systems

Apr 23, 2021

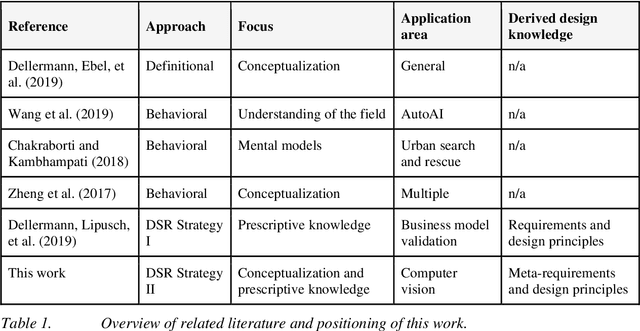

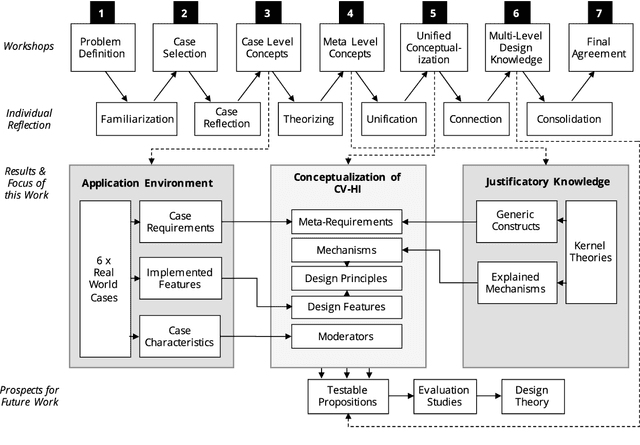

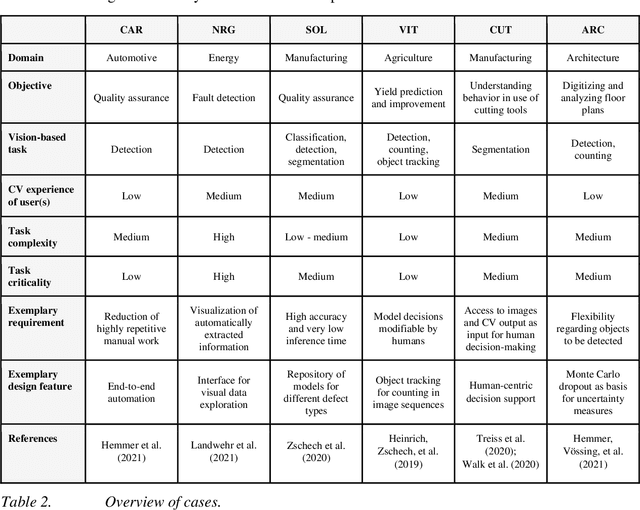

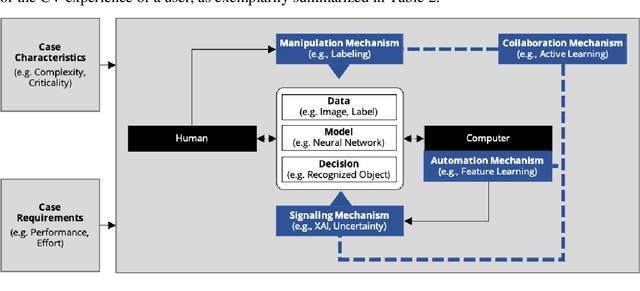

Abstract:Computer vision (CV) techniques try to mimic human capabilities of visual perception to support labor-intensive and time-consuming tasks like the recognition and localization of critical objects. Nowadays, CV increasingly relies on artificial intelligence (AI) to automatically extract useful information from images that can be utilized for decision support and business process automation. However, the focus of extant research is often exclusively on technical aspects when designing AI-based CV systems while neglecting socio-technical facets, such as trust, control, and autonomy. For this purpose, we consider the design of such systems from a hybrid intelligence (HI) perspective and aim to derive prescriptive design knowledge for CV-based HI systems. We apply a reflective, practice-inspired design science approach and accumulate design knowledge from six comprehensive CV projects. As a result, we identify four design-related mechanisms (i.e., automation, signaling, modification, and collaboration) that inform our derived meta-requirements and design principles. This can serve as a basis for further socio-technical research on CV-based HI systems.

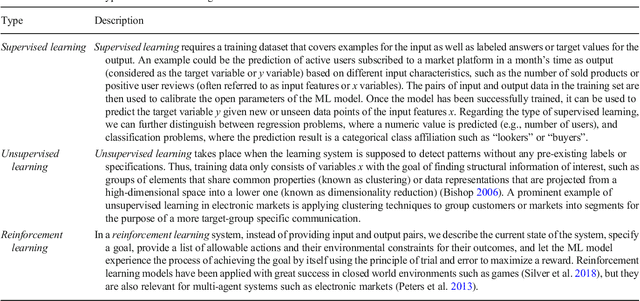

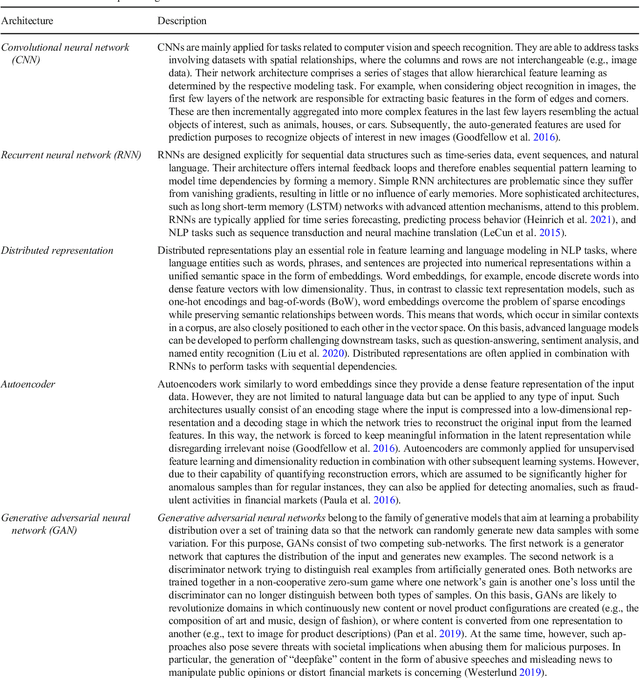

Machine learning and deep learning

Apr 14, 2021

Abstract:Today, intelligent systems that offer artificial intelligence capabilities often rely on machine learning. Machine learning describes the capacity of systems to learn from problem-specific training data to automate the process of analytical model building and solve associated tasks. Deep learning is a machine learning concept based on artificial neural networks. For many applications, deep learning models outperform shallow machine learning models and traditional data analysis approaches. In this article, we summarize the fundamentals of machine learning and deep learning to generate a broader understanding of the methodical underpinning of current intelligent systems. In particular, we provide a conceptual distinction between relevant terms and concepts, explain the process of automated analytical model building through machine learning and deep learning, and discuss the challenges that arise when implementing such intelligent systems in the field of electronic markets and networked business. These naturally go beyond technological aspects and highlight issues in human-machine interaction and artificial intelligence servitization.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge