Jyrki Latokartano

AR-based interaction for safe human-robot collaborative manufacturing

Sep 06, 2019

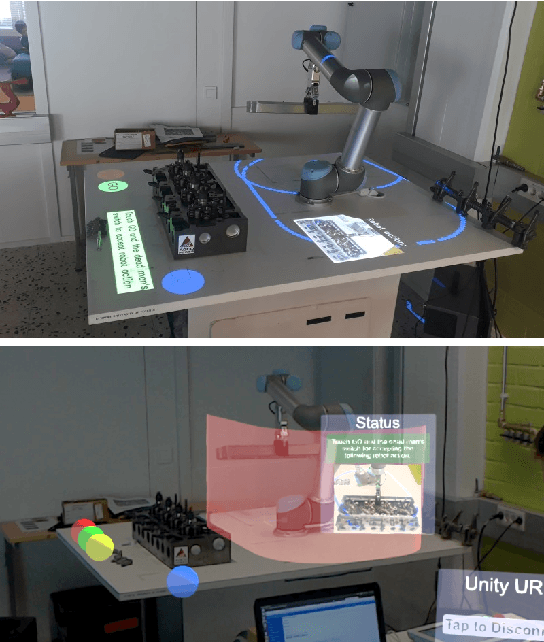

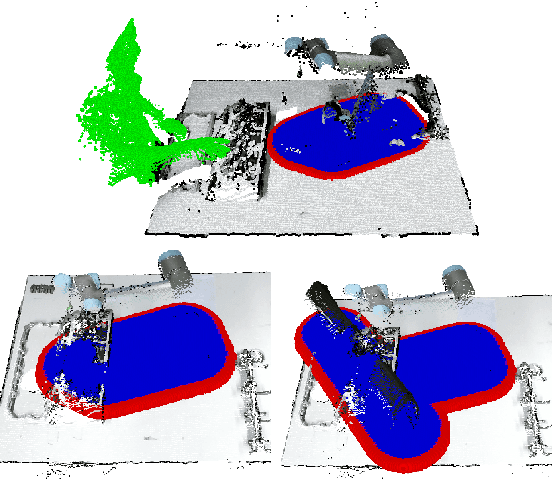

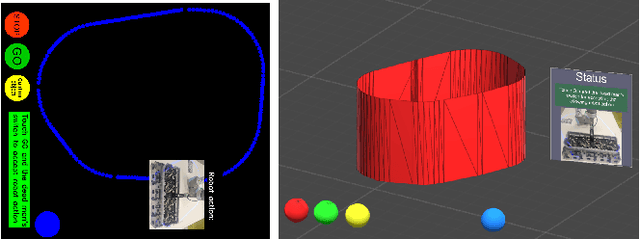

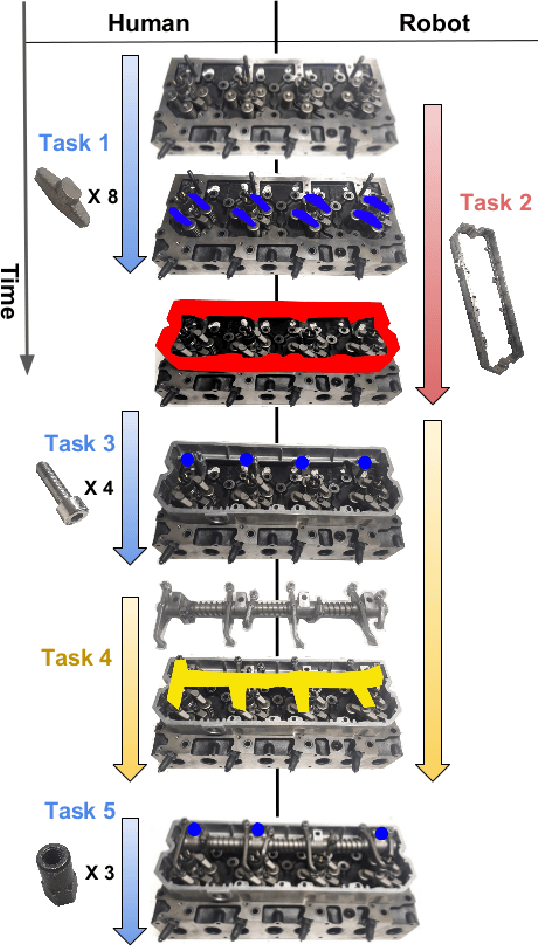

Abstract:Industrial standards define safety requirements for Human-Robot Collaboration (HRC) in industrial manufacturing. The standards particularly require real-time monitoring and securing of the minimum protective distance between a robot and an operator. In this work, we propose a depth-sensor based model for workspace monitoring and an interactive Augmented Reality (AR) User Interface (UI) for safe HRC. The AR UI is implemented on two different hardware: a projector-mirror setup anda wearable AR gear (HoloLens). We experiment the workspace model and UIs for a realistic diesel motor assembly task. The AR-based interactive UIs provide 21-24% and 57-64% reduction in the task completion and robot idle time, respectively, as compared to a baseline without interaction and workspace sharing. However, subjective evaluations reveal that HoloLens based AR is not yet suitable for industrial manufacturing while the projector-mirror setup shows clear improvements in safety and work ergonomics.

Benchmarking 6D Object Pose Estimation for Robotics

Jun 06, 2019

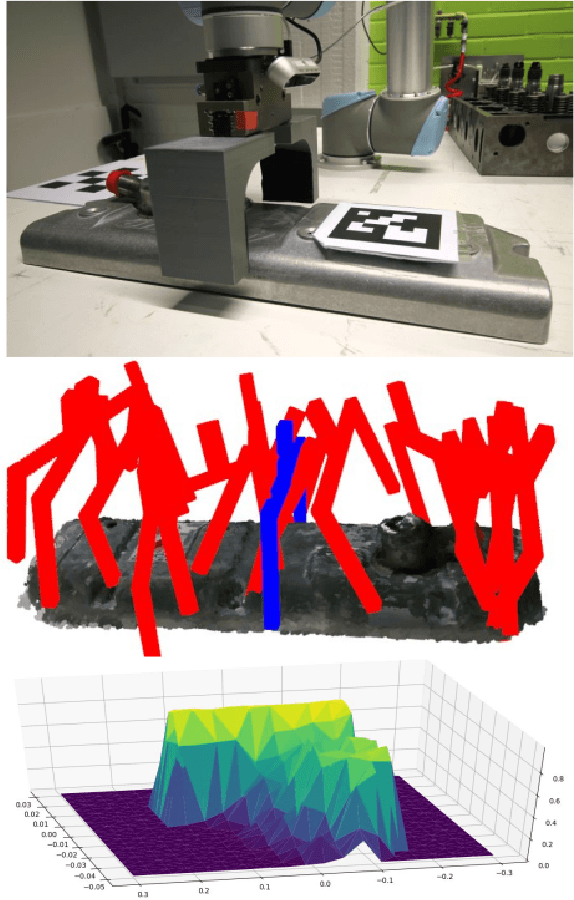

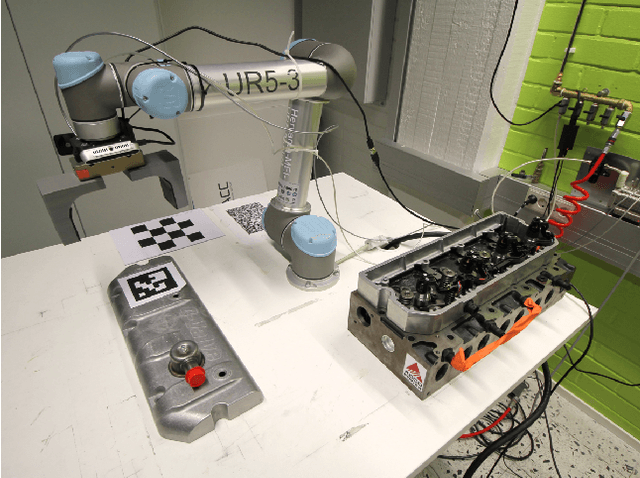

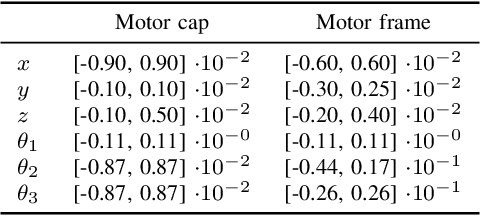

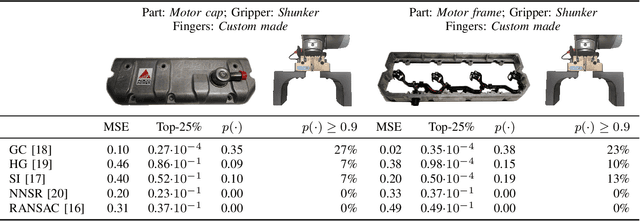

Abstract:Benchmarking 6D object pose estimation for robotics is not straightforward as sufficient accuracy depends on many factors, e.g., the selected gripper, dimensions, weight and material of an object, grasping point, and the robot task itself. We formulate the problem as a successful grasp, i.e. for a fixed set of factors affecting the task, will the given pose estimate provide sufficiently good grasp to complete the task. The successful grasp is modelled in a probabilistic framework by sampling in the pose error space and executing the task and automatically detecting success or failure. Hours of sampling and thousands of samples are used to construct a non-parametric probability of a successful grasp given the pose residual. The framework is experimentally validated with real objects and assembly tasks and comparison of several state-of-the-art point cloud based 3D pose estimation methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge