Justin N. Wood

Transformers self-organize like newborn visual systems when trained in prenatal worlds

Jan 06, 2026Abstract:Do transformers learn like brains? A key challenge in addressing this question is that transformers and brains are trained on fundamentally different data. Brains are initially "trained" on prenatal sensory experiences (e.g., retinal waves), whereas transformers are typically trained on large datasets that are not biologically plausible. We reasoned that if transformers learn like brains, then they should develop the same structure as newborn brains when exposed to the same prenatal data. To test this prediction, we simulated prenatal visual input using a retinal wave generator. Then, using self-supervised temporal learning, we trained transformers to adapt to those retinal waves. During training, the transformers spontaneously developed the same structure as newborn visual systems: (1) early layers became sensitive to edges, (2) later layers became sensitive to shapes, and (3) the models developed larger receptive fields across layers. The organization of newborn visual systems emerges spontaneously when transformers adapt to a prenatal visual world. This developmental convergence suggests that brains and transformers learn in common ways and follow the same general fitting principles.

Are Vision Transformers More Data Hungry Than Newborn Visual Systems?

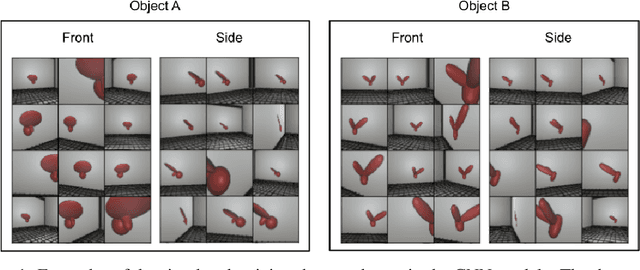

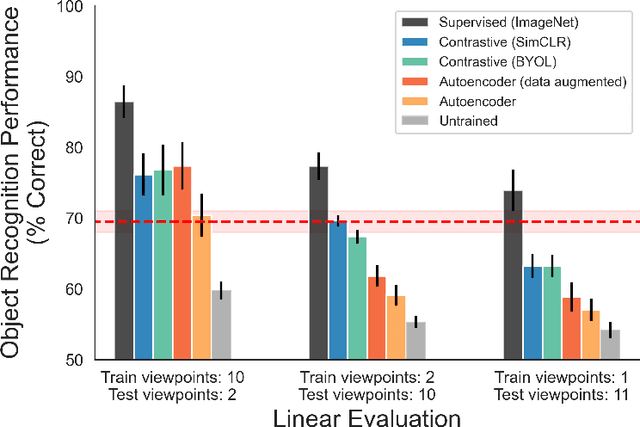

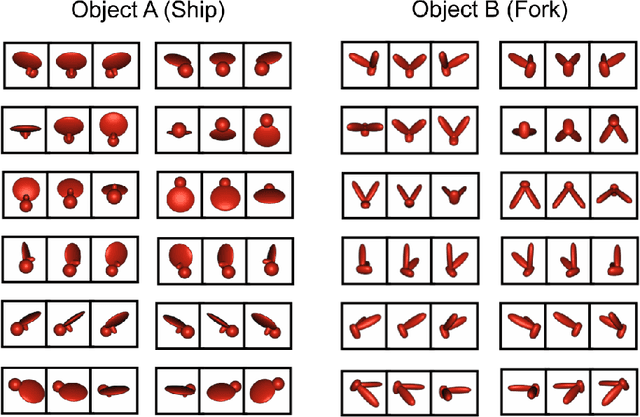

Dec 05, 2023Abstract:Vision transformers (ViTs) are top performing models on many computer vision benchmarks and can accurately predict human behavior on object recognition tasks. However, researchers question the value of using ViTs as models of biological learning because ViTs are thought to be more data hungry than brains, with ViTs requiring more training data to reach similar levels of performance. To test this assumption, we directly compared the learning abilities of ViTs and animals, by performing parallel controlled rearing experiments on ViTs and newborn chicks. We first raised chicks in impoverished visual environments containing a single object, then simulated the training data available in those environments by building virtual animal chambers in a video game engine. We recorded the first-person images acquired by agents moving through the virtual chambers and used those images to train self supervised ViTs that leverage time as a teaching signal, akin to biological visual systems. When ViTs were trained through the eyes of newborn chicks, the ViTs solved the same view invariant object recognition tasks as the chicks. Thus, ViTs were not more data hungry than newborn visual systems: both learned view invariant object representations in impoverished visual environments. The flexible and generic attention based learning mechanism in ViTs combined with the embodied data streams available to newborn animals appears sufficient to drive the development of animal-like object recognition.

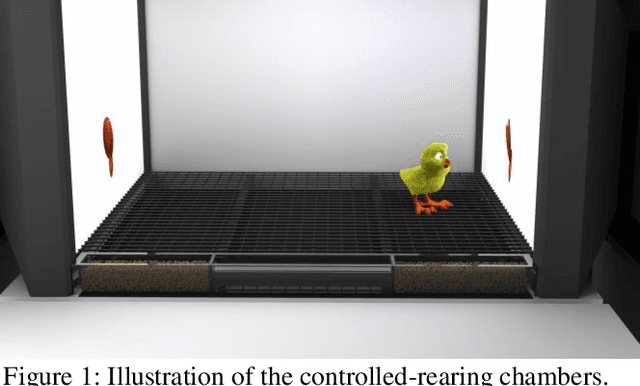

A newborn embodied Turing test for view-invariant object recognition

Jun 08, 2023Abstract:Recent progress in artificial intelligence has renewed interest in building machines that learn like animals. Almost all of the work comparing learning across biological and artificial systems comes from studies where animals and machines received different training data, obscuring whether differences between animals and machines emerged from differences in learning mechanisms versus training data. We present an experimental approach-a "newborn embodied Turing Test"-that allows newborn animals and machines to be raised in the same environments and tested with the same tasks, permitting direct comparison of their learning abilities. To make this platform, we first collected controlled-rearing data from newborn chicks, then performed "digital twin" experiments in which machines were raised in virtual environments that mimicked the rearing conditions of the chicks. We found that (1) machines (deep reinforcement learning agents with intrinsic motivation) can spontaneously develop visually guided preference behavior, akin to imprinting in newborn chicks, and (2) machines are still far from newborn-level performance on object recognition tasks. Almost all of the chicks developed view-invariant object recognition, whereas the machines tended to develop view-dependent recognition. The learning outcomes were also far more constrained in the chicks versus machines. Ultimately, we anticipate that this approach will help researchers develop embodied AI systems that learn like newborn animals.

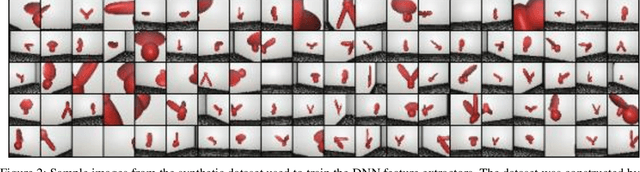

Controlled-rearing studies of newborn chicks and deep neural networks

Dec 12, 2021

Abstract:Convolutional neural networks (CNNs) can now achieve human-level performance on challenging object recognition tasks. CNNs are also the leading quantitative models in terms of predicting neural and behavioral responses in visual recognition tasks. However, there is a widely accepted critique of CNN models: unlike newborn animals, which learn rapidly and efficiently, CNNs are thought to be "data hungry," requiring massive amounts of training data to develop accurate models for object recognition. This critique challenges the promise of using CNNs as models of visual development. Here, we directly examined whether CNNs are more data hungry than newborn animals by performing parallel controlled-rearing experiments on newborn chicks and CNNs. We raised newborn chicks in strictly controlled visual environments, then simulated the training data available in that environment by constructing a virtual animal chamber in a video game engine. We recorded the visual images acquired by an agent moving through the virtual chamber and used those images to train CNNs. When CNNs received similar visual training data as chicks, the CNNs successfully solved the same challenging view-invariant object recognition tasks as the chicks. Thus, the CNNs were not more data hungry than animals: both CNNs and chicks successfully developed robust object models from training data of a single object.

Development of collective behavior in newborn artificial agents

Nov 06, 2021

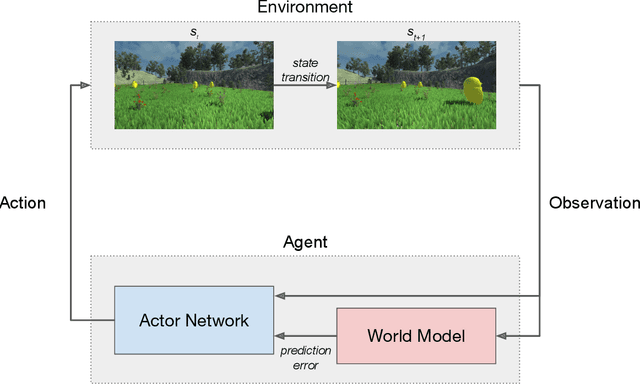

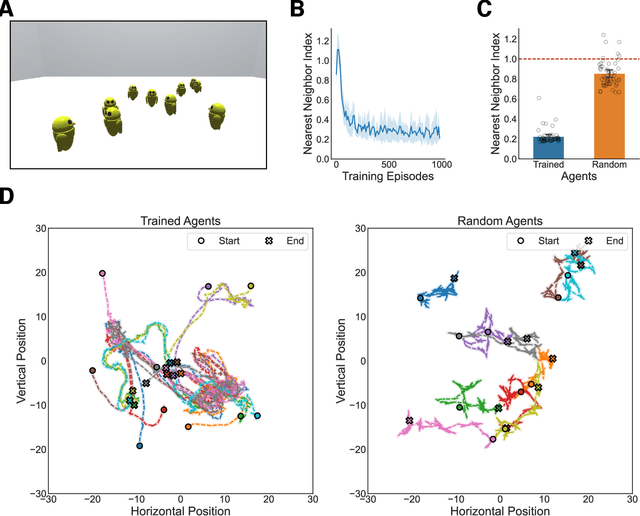

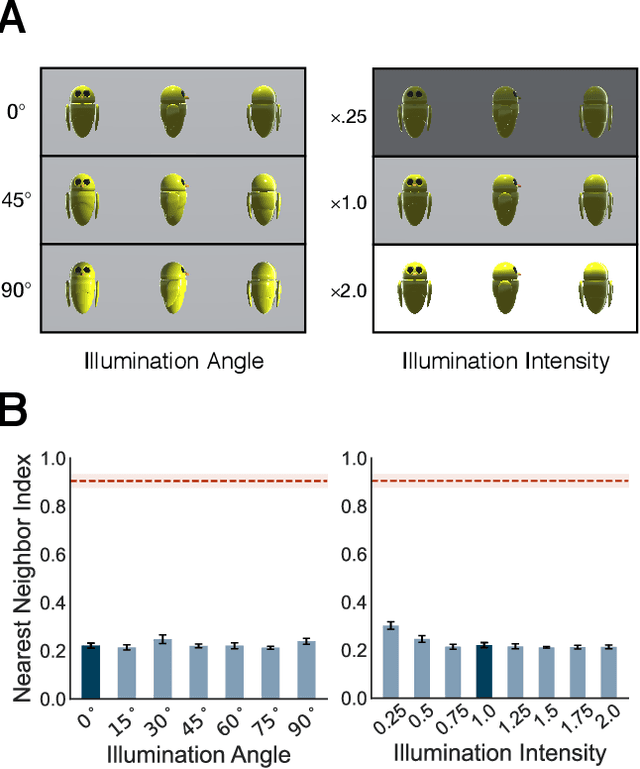

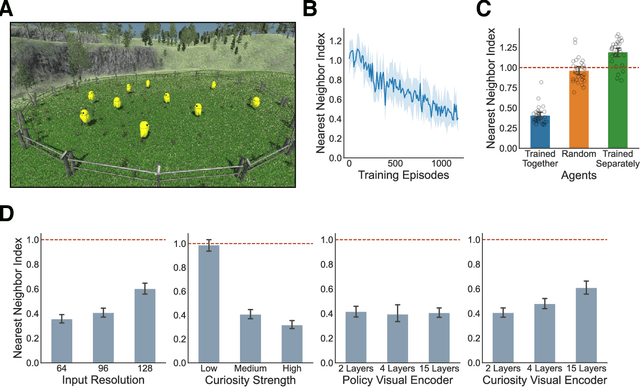

Abstract:Collective behavior is widespread across the animal kingdom. To date, however, the developmental and mechanistic foundations of collective behavior have not been formally established. What learning mechanisms drive the development of collective behavior in newborn animals? Here, we used deep reinforcement learning and curiosity-driven learning -- two learning mechanisms deeply rooted in psychological and neuroscientific research -- to build newborn artificial agents that develop collective behavior. Like newborn animals, our agents learn collective behavior from raw sensory inputs in naturalistic environments. Our agents also learn collective behavior without external rewards, using only intrinsic motivation (curiosity) to drive learning. Specifically, when we raise our artificial agents in natural visual environments with groupmates, the agents spontaneously develop ego-motion, object recognition, and a preference for groupmates, rapidly learning all of the core skills required for collective behavior. This work bridges the divide between high-dimensional sensory inputs and collective action, resulting in a pixels-to-actions model of collective animal behavior. More generally, we show that two generic learning mechanisms -- deep reinforcement learning and curiosity-driven learning -- are sufficient to learn collective behavior from unsupervised natural experience.

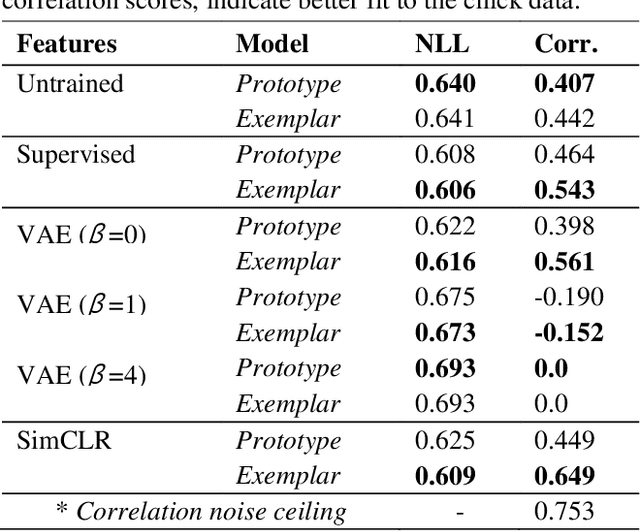

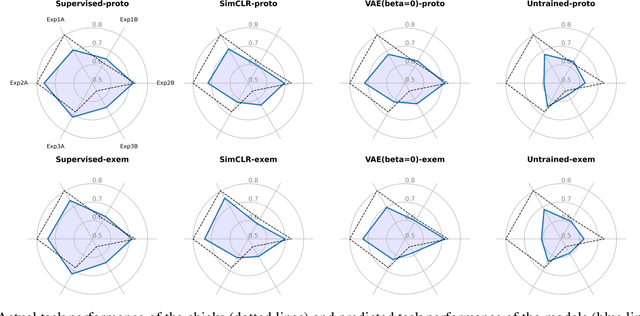

Modeling Object Recognition in Newborn Chicks using Deep Neural Networks

Jun 14, 2021

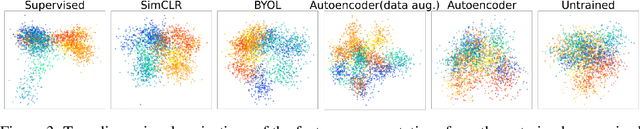

Abstract:In recent years, the brain and cognitive sciences have made great strides developing a mechanistic understanding of object recognition in mature brains. Despite this progress, fundamental questions remain about the origins and computational foundations of object recognition. What learning algorithms underlie object recognition in newborn brains? Since newborn animals learn largely through unsupervised learning, we explored whether unsupervised learning algorithms can be used to predict the view-invariant object recognition behavior of newborn chicks. Specifically, we used feature representations derived from unsupervised deep neural networks (DNNs) as inputs to cognitive models of categorization. We show that features derived from unsupervised DNNs make competitive predictions about chick behavior compared to supervised features. More generally, we argue that linking controlled-rearing studies to image-computable DNN models opens new experimental avenues for studying the origins and computational basis of object recognition in newborn animals.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge