Denizhan Pak

A quantum semantic framework for natural language processing

Jun 11, 2025Abstract:Semantic degeneracy represents a fundamental property of natural language that extends beyond simple polysemy to encompass the combinatorial explosion of potential interpretations that emerges as semantic expressions increase in complexity. Large Language Models (LLMs) and other modern NLP systems face inherent limitations precisely because they operate within natural language itself, making them subject to the same interpretive constraints imposed by semantic degeneracy. In this work, we argue using Kolmogorov complexity that as an expression's complexity grows, the likelihood of any interpreting agent (human or LLM-powered AI) recovering the single intended meaning vanishes. This computational intractability suggests the classical view that linguistic forms possess meaning in and of themselves is flawed. We alternatively posit that meaning is instead actualized through an observer-dependent interpretive act. To test this, we conducted a semantic Bell inequality test using diverse LLM agents as ``computational cognitive systems'' to interpret ambiguous word pairs under varied contextual settings. Across several independent experiments, we found average CHSH expectation values ranging from 1.2 to 2.8, with several runs yielding values (e.g., 2.3-2.4) that significantly violate the classical boundary ($|S|\leq2$). This demonstrates that linguistic interpretation under ambiguity can exhibit non-classical contextuality, consistent with results from human cognition experiments. These results inherently imply that classical frequentist-based analytical approaches for natural language are necessarily lossy. Instead, we propose that Bayesian-style repeated sampling approaches can provide more practically useful and appropriate characterizations of linguistic meaning in context.

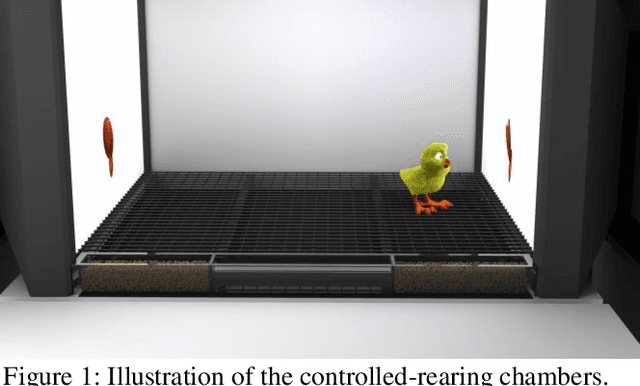

A newborn embodied Turing test for view-invariant object recognition

Jun 08, 2023Abstract:Recent progress in artificial intelligence has renewed interest in building machines that learn like animals. Almost all of the work comparing learning across biological and artificial systems comes from studies where animals and machines received different training data, obscuring whether differences between animals and machines emerged from differences in learning mechanisms versus training data. We present an experimental approach-a "newborn embodied Turing Test"-that allows newborn animals and machines to be raised in the same environments and tested with the same tasks, permitting direct comparison of their learning abilities. To make this platform, we first collected controlled-rearing data from newborn chicks, then performed "digital twin" experiments in which machines were raised in virtual environments that mimicked the rearing conditions of the chicks. We found that (1) machines (deep reinforcement learning agents with intrinsic motivation) can spontaneously develop visually guided preference behavior, akin to imprinting in newborn chicks, and (2) machines are still far from newborn-level performance on object recognition tasks. Almost all of the chicks developed view-invariant object recognition, whereas the machines tended to develop view-dependent recognition. The learning outcomes were also far more constrained in the chicks versus machines. Ultimately, we anticipate that this approach will help researchers develop embodied AI systems that learn like newborn animals.

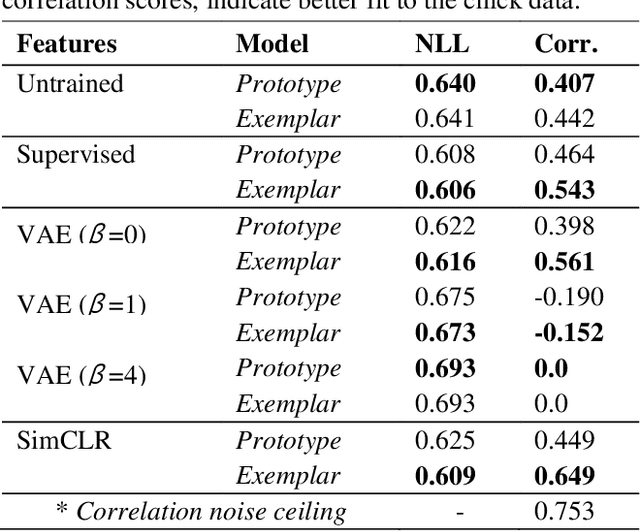

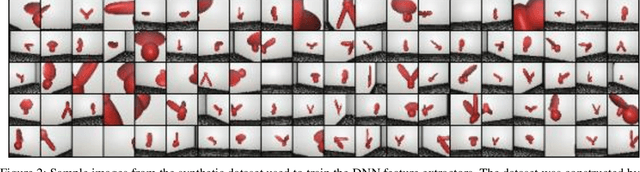

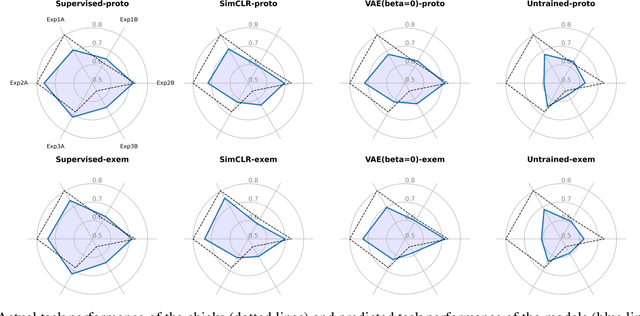

Modeling Object Recognition in Newborn Chicks using Deep Neural Networks

Jun 14, 2021

Abstract:In recent years, the brain and cognitive sciences have made great strides developing a mechanistic understanding of object recognition in mature brains. Despite this progress, fundamental questions remain about the origins and computational foundations of object recognition. What learning algorithms underlie object recognition in newborn brains? Since newborn animals learn largely through unsupervised learning, we explored whether unsupervised learning algorithms can be used to predict the view-invariant object recognition behavior of newborn chicks. Specifically, we used feature representations derived from unsupervised deep neural networks (DNNs) as inputs to cognitive models of categorization. We show that features derived from unsupervised DNNs make competitive predictions about chick behavior compared to supervised features. More generally, we argue that linking controlled-rearing studies to image-computable DNN models opens new experimental avenues for studying the origins and computational basis of object recognition in newborn animals.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge