Development of collective behavior in newborn artificial agents

Paper and Code

Nov 06, 2021

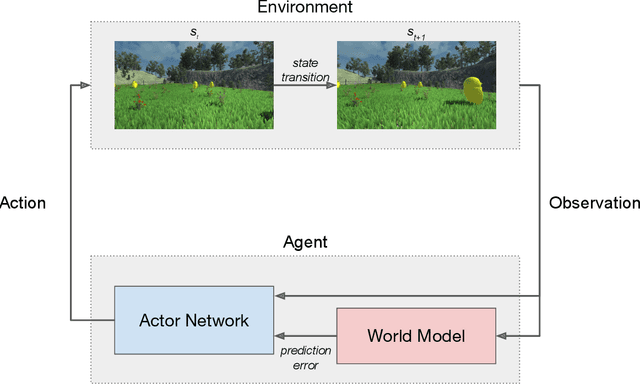

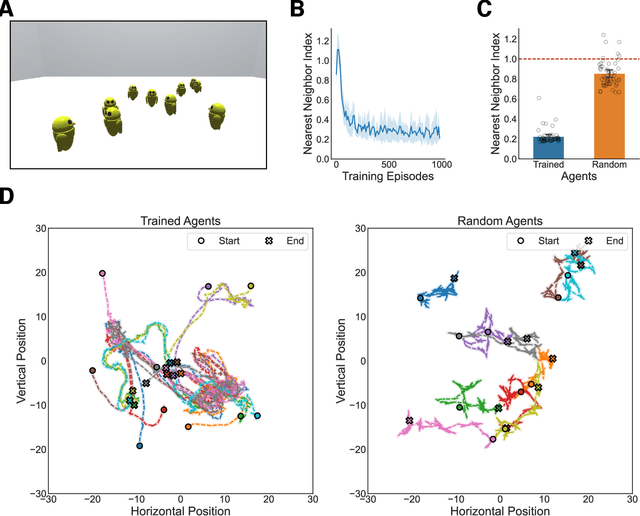

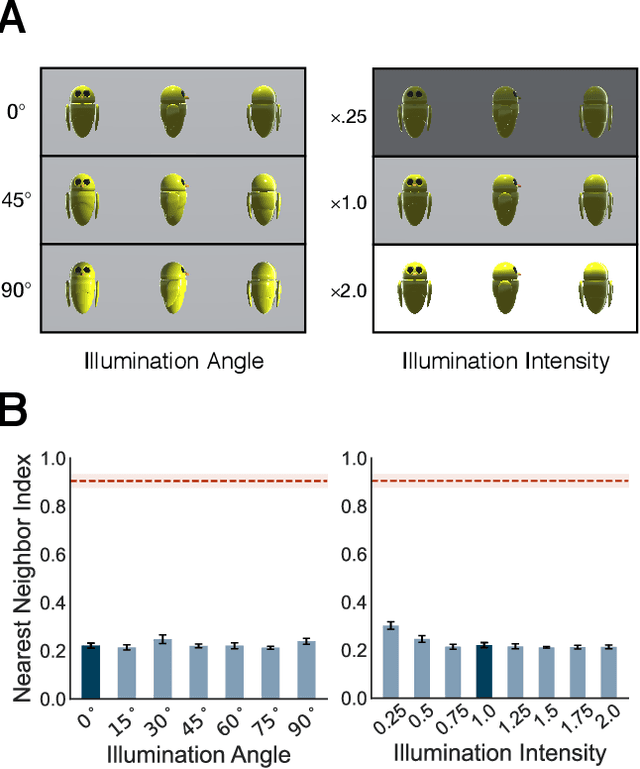

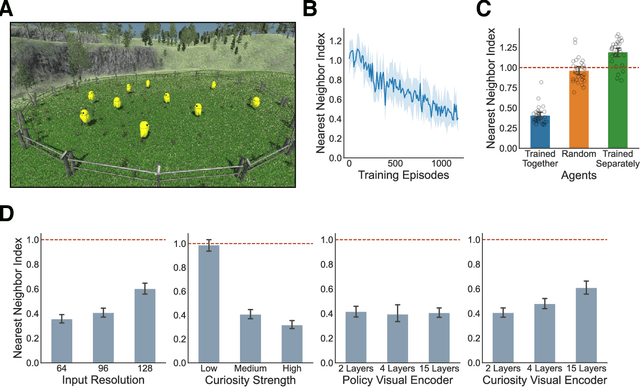

Collective behavior is widespread across the animal kingdom. To date, however, the developmental and mechanistic foundations of collective behavior have not been formally established. What learning mechanisms drive the development of collective behavior in newborn animals? Here, we used deep reinforcement learning and curiosity-driven learning -- two learning mechanisms deeply rooted in psychological and neuroscientific research -- to build newborn artificial agents that develop collective behavior. Like newborn animals, our agents learn collective behavior from raw sensory inputs in naturalistic environments. Our agents also learn collective behavior without external rewards, using only intrinsic motivation (curiosity) to drive learning. Specifically, when we raise our artificial agents in natural visual environments with groupmates, the agents spontaneously develop ego-motion, object recognition, and a preference for groupmates, rapidly learning all of the core skills required for collective behavior. This work bridges the divide between high-dimensional sensory inputs and collective action, resulting in a pixels-to-actions model of collective animal behavior. More generally, we show that two generic learning mechanisms -- deep reinforcement learning and curiosity-driven learning -- are sufficient to learn collective behavior from unsupervised natural experience.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge